At DVCon I had a chance to discuss PSS and real-life applications with Tom Anderson (product management director at Cadence). Tom is very actively involved in the PSS working group and is now driving the Cadence offering in this area (Perspec System Verifier), so he has a pretty good perspective on the roots, the evolution and practical experiences with this style of verification.

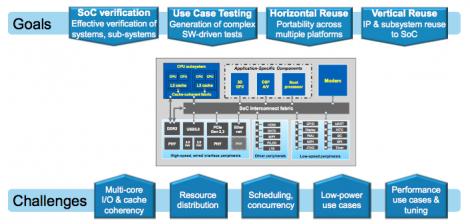

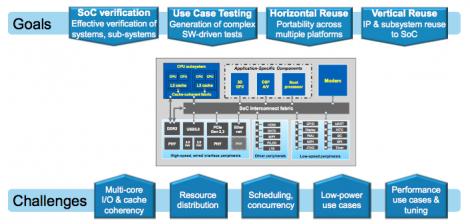

PSS grew out of the need to address an incredibly complex system verification problem, which users vociferously complained was not being addressed by industry-standard test-bench approaches (DVCon 2014 hosted one entertaining example). High on the list of complaints were challenges in managing software and use-case-based testing in hardware-centric languages, reusability of tests across diverse verification engines and across IP, sub-system and SoC testing, and in managing test of complex constraints such as varying power configurations layered on top of all that other complexity. Something new was obviously needed.

Of course, the hope in cases like this is “#1: make it handle all that additional stuff, #2: make it incremental to what I already know, #3: minimize the new stuff I have to learn”. PSS does a pretty good job with #1 and #3 but some folks may feel that it missed on #2 because it isn’t an incremental extension to UVM. But reasonable productivity for software-based testing just doesn’t fit well with being an extension to UVM. Which is not to say that PSS will replace UVM. All that effort you put into learning UVM and constrained-random testing will continue to be valuable for a long time, for IP verification and certain classes of (primarily simulation-based) system verification. PSS is different because it standardizes the next level up in the verification stack, to serve architects, software and hardware experts and even bring-up experts.

That sounds great but some observers wonder if it is over-ambitious, a nice vision which will never translate to usable products. They’re generally surprised to hear that solutions of this type are already in production and have been in active use for a few years; Perspec System Verifier is a great example. These tools predate the standard so input isn’t exactly PSS but concepts are very similar. And as PSS moves towards ratification, vendors are busy syncing up, just as they have in the past for SVA and UVM. Tom told me that officially the standard should be released in the second half of 2017.

How does PSS work? For reasons that aren’t important here, the standard allows for two specification languages: DSL and a constrained form of C++. I’m guessing many of you will lean to DSL so I’ll base my 10-cent explanation on that language (and I’ll call it PSS to avoid confusion). The first important thing to understand is that PSS is a declarativelanguage, unlike most languages you have seen, which are procedural. C and C++ are procedural, as are SV, Java and Python. Conversely, scripting for yacc, Make and HTML is declarative. Procedural languages are strong at specifying exactly “how” to do something. Declarative languages expect a definition of “what” you want to do; they’ll figure out how to make that happen by tracing through dependencies and constraints, eventually getting down to leaf-level nodes where they execute little localized scripts (“how”) to make stuff happen. If you’ve ever built a Makefile, this should be familiar.

PSS is declarative and starts with actions which describe behavior. At the simplest level these can be things like receiving data into a UART or DMA-ing from the UART into memory. You can build up compound actions from a graph of simple actions and these can describe multiple scenarios; maybe some steps can be (optionally) performed in parallel, some must be performed sequentially. Actions can depend on resources and there can be a finite pool of resources (determining some constraints).

Then you build up higher-level actions around lower-level actions, all the way up to run multiple scenarios of receiving a call, Bluetooth interaction, navigating, power-saving mode-switching and whatever else you have in the kitchen sink. You don’t have to figure out scenarios through the hierarchy of actions; just as in constrained random, a tool-specific solver will figure out legal scenarios. Hopefully you begin to get a glimmer of the immense value in specifying behavior declaratively in a hierarchy of modules. You specify the behavior for a block and that can be reused and embedded in successively higher-level models with no need for rewrites to lower-level models.

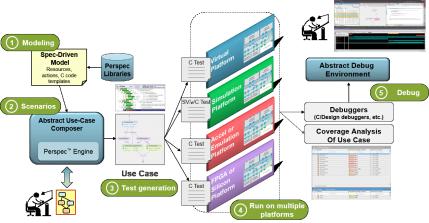

Of course, I skipped over a rather important point in the explanation so far; at some point this must drop down to real actions (like the little scripts on Makefile leaf-nodes). And it must be able to target different verification platforms – where does all that happen? I admit this had me puzzled at first, but Tom clarified for me. I’m going to use Perspec to explain the method, though the basics are standard in PSS. An action can contain an exec body. This could be a piece of SV, or UVM (maybe instantiating a VIP) or C; this is what ultimately will be generated as a part of the test to be run. C might run on an embedded CPU, or in virtual model connected to the DUT or may drive a post-silicon host bus adapter. I’m guessing you might have multiple possible exec blocks depending on the target, but I confess I didn’t get deeper on this.

So in the Perspec figure above, once you have built a system level test model with all kinds of possible (hierarchically-composed) paths, then the Perspec engine can “solve” for multiple scenario instances (each spit as a separate test), with no further effort on your part. And tests can be targeted to any of the possible verification engines. Welcome to a method that can generate system-level scenarios faster than you could hope to, with better coverage than you could hope to achieve, runnable on whichever engine is best suited to your current objective (maybe you want to take this test from emulation back into simulation for closer debug? No problem, just use the equivalent simulation test.)

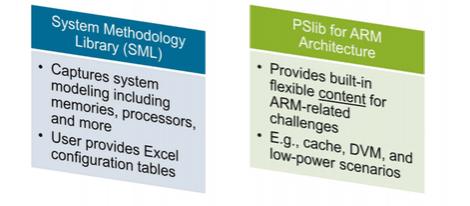

We’re nearly there. One last question – where do all those leaf-level actions and exec blocks come from? Are you going to have to build hundreds of new models to use Perspec? Tom thinks that anyone who supplies IPs is going to be motivated to provide PSS models pretty quickly (especially if they also sell a PSS-based solution). Cadence already provides a library for the ARM architecture and an SML (system methodology library) to handle modeling for memories, processors and other components. They also provide a method to model other components starting from simple Excel tables. He anticipates that, as the leading VIP supplier, Cadence will be adding support for many of the standard interface and other standard components over time. So you may have to generate PSS models for in-house IP, but it’s not unreasonable to expect that IP and VIP vendors will quickly catch up with the rest.

This is well-proven stuff. Cadence already has public endorsements from TI, MediaTek, Samsung and Microsemi. These include customer claims for 10x improvement in test generation productivity (Tom told me the Cadence execs didn’t believe 10x at first – they had to double-check before they’d allow that number to be published.) You can get a much better understanding of Perspec and a whole bunch of info on customer experiences with the approach HERE.

Share this post via:

Comments

0 Replies to “Perspective in Verification”

You must register or log in to view/post comments.