I’m guessing that more than a few people were mystified (maybe still are) when Cadence acquired OpenEye Scientific, a company known for computational molecular design aimed at medical drug/therapeutics discovery. What could EDA, even SDA (system design automation), and drug discovery possibly have in common? More than you might imagine, but to understand you first need to understand Anirudh’s longstanding claim that Cadence is at heart a computational software company, which I read as expert in big scientific/engineering applications. EDA is one such application, computational fluid dynamics (e.g for aerodynamics) is another and computational molecular design (for drug design) is yet another. I sat in on a presentation by Geoff Skillman (VP R&D of Cadence Molecular Sciences, previously OpenEye) at Cadence Live 2024, and what I heard was an eye opener (pun intended) for this hard-core EDA guy.

The dynamics and challenges in drug design

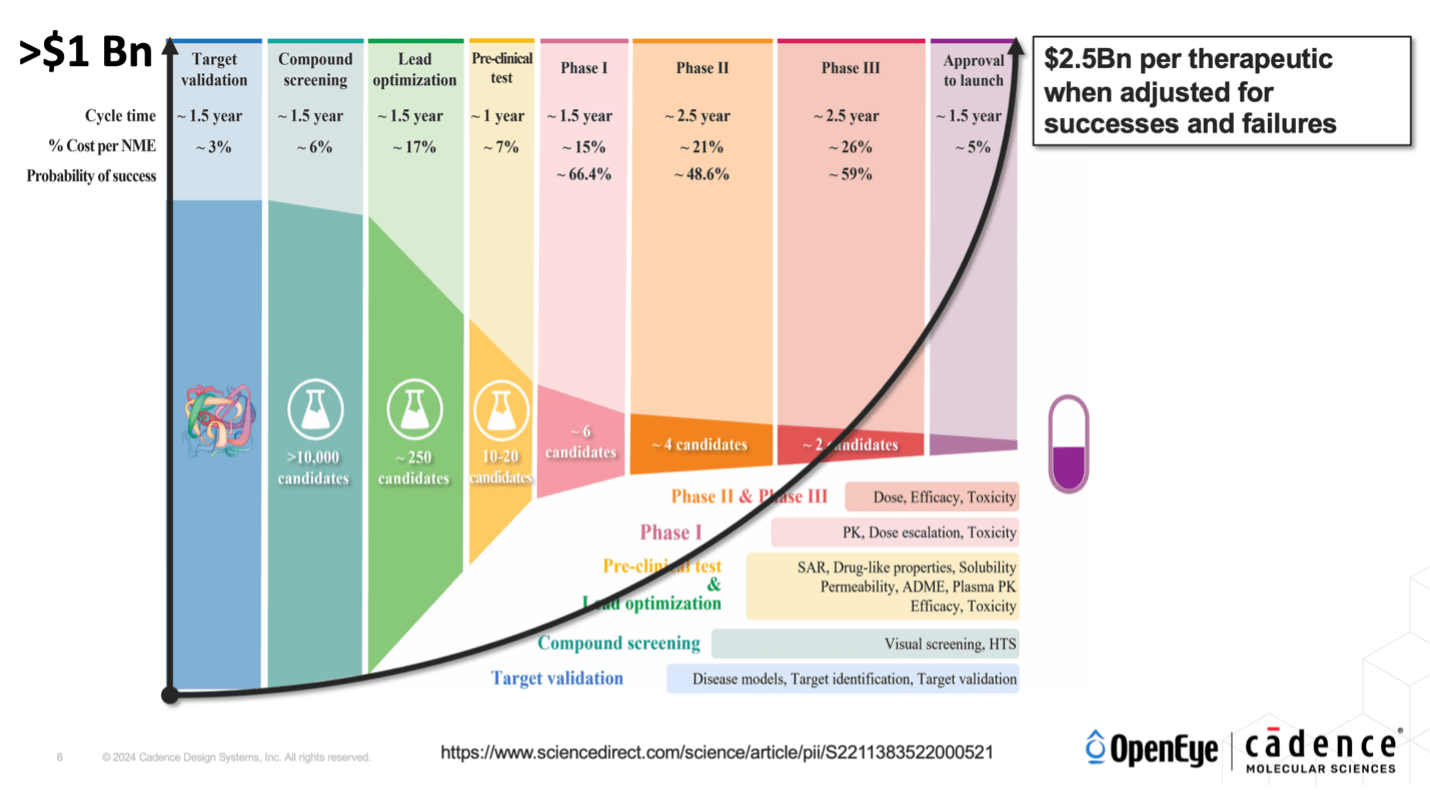

Developing a new drug has echoes with the path to developing a new semiconductor design – only much worse: a 12-year cycle from start to delivery; a very high fall out rate (90% of trials end in failure); an average $2.5B NRE per successful drug when averaged together with failed trials. I can’t imagine any semiconductor enterprise even contemplating this level of risk.

At least half of that time is consumed in clinical trials and FDA approval, stages we might not want to accelerate (I certainly don’t want to be a guinea pig for a poorly tested drug). The first half starts with discovery among a huge set of possibilities (10^60), screening for basic problems, optimizing, and running experiments in the lab. Unfortunately, biology is not nearly as cooperative as the physics underlying electronic systems. First, it is complex and dynamic, changing for its own reasons and in unforeseen responses to experiments. It has also evolved defenses over millions of years, seeing foreign substances as toxins to be captured and eliminated no matter how well intentioned. Not only must we aim to correct a problem, but we must also trick our way around those defenses to apply the fix.

A further challenge is that calculations in biology are approximate thanks to the sheer complexity and evolving understanding of bio systems. Worse yet, there is always a possibility that far from being helpful, a proposed drug may actually prove to be toxic. Adding experimental analyses helps refine calculations but these too have limited accuracy. Geoff admits that with all this complexity, approximation, and still artisanal development processes, it must seem like molecular science is stuck in the dark ages. But practitioners haven’t been sitting still.

From artisanal design to principled and scalable design

As high-performance computing options opened up in cloud and some supercomputer projects, some teams were able to sample these huge configuration spaces more effectively, refining their virtual modeling processes to a more principled and accelerated flow.

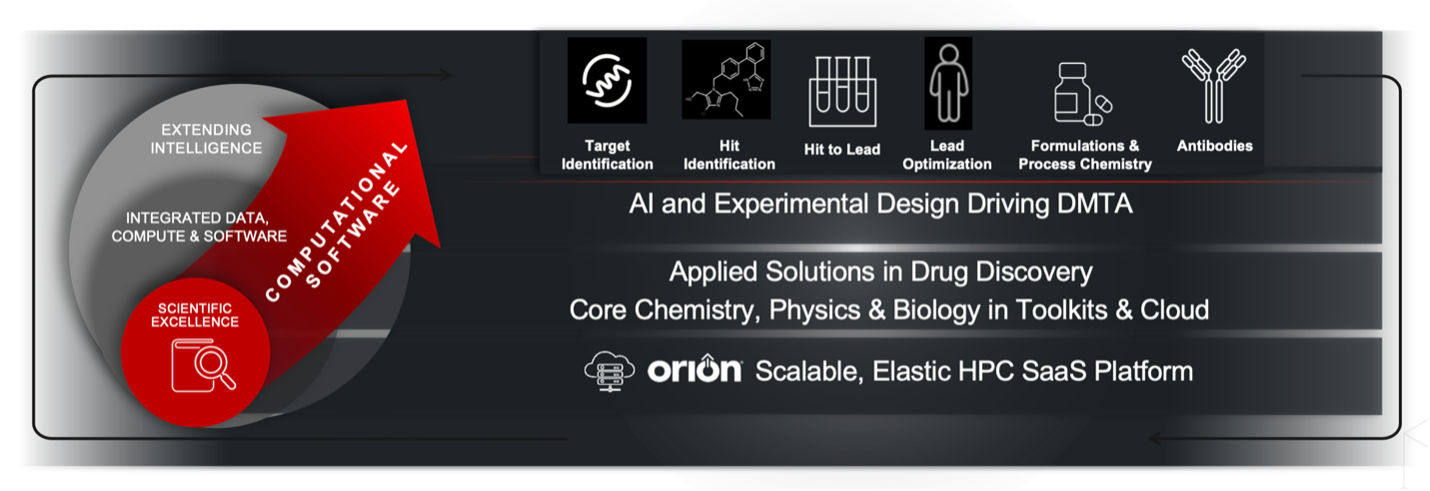

Building on these advances, Cadence Molecular Sciences now maps their approach to the Cadence 3-layer cake structure: their Orion scalable elastic HPC SaaS platform providing the hardware acceleration (and scaling) layer; a wide range of chemistry, physics, and biology tools and toolkits offering principled simulation and optimization over large configuration spaces; and AI/GenAI reinforced with experimental data in support of drug discovery.

At the hardware acceleration layer, they can scale to arbitrary number of CPUs or GPUs. In the principled simulation/optimization layer they offer options to virtually screen candidate molecules (looking for similarity with experimentally known good options), to study molecular dynamics, quantum mechanics modeled behaviors, and free energy predictions (think objective functions in optimization). At the AI layer they can connect to the NVIDIA BioNeMo GenAI platforms, to AI-driven docking (to find best favored ligand to receptor docking options) and to AI-driven optimization toolkits. You won’t be surprised to hear that all these tools/processes are massively compute intensive, hence the need for the hardware acceleration layer of the cake.

Molecular similarity screening is a real strength for Cadence Molecular Sciences according to Geoff. Screening is the first stage in discovery, based on a widely accepted principle in medicinal chemistry that similar molecules will interact with biology in similar ways. This approach quickly eliminates random guess molecules of course, also molecules with possible strange behaviors or toxicities. Here they are comparing 3D shape and electrostatics for similarity and have measured performance improvement between 32x 3rd generation Xeon cores and 8x H100 GPU cores at over 1100X faster and 15X more cost efficiency. When you’re comparing against billions of candidate molecules, that matters.

Geoff also added that through Cadence connections to cloud providers (not so common among biotech companies) they have been able to expand availability to more cloud options. They have also delivered a proof of concept on Cadence datacenter GPUs. For biotech startups this is a big positive since they don’t have the capital to invest in their own large GPU clusters. For bigger enterprises, he hinted interest in adding GPU capacity to their own in-house datacenters to get around GPU capacity limitations (and I assume cloud overhead concerns).

Looking forward

What Geoff described in this presentation centered mostly on the Design stage of the common biotech Design-Make-Test-Analyze loop. What can be done to help with the other stages? BioNeMo includes a ChemInformatics package which could be used to help develop a recipe for the Make stage. The actual making (candidate synthesis), followed by test and analyze would still be in a lab. Yet following this approach, I can understand higher confidence among biotechs/pharmas that a candidate drug is more likely to survive into trials and maybe beyond.

Very cool. There’s lots more interesting information, about the kinds of experiments researchers are running for osteoporosis therapeutics, for oncology drugs and for other targets previously thought to be “undruggable”. But I didn’t want to overload this blog. If you are interested in learning more, click HERE.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.