SoC verification has always been an interesting topic for me. Having worked at companies like Zycad that offered hardware accelerators for logic and fault simulation, the concept of reducing the time needed to verify a complex SoC has occupied a lot of my thoughts. The bar we always tried to clear was actually simple to articulate – verification is over when you run out of time. The goal was to make the verification process converge BEFORE you ran out of time. A recent announcement from Cadence got my attention due to the headline that Cadence Increases Verification Efficiency up to 5X with Xcelium ML.

I had a chance to chat with Paul Cunningham, corporate vice president and general manager of the System & Verification Group at Cadence about what this announcement really means to verification engineers. You may recall Paul provided a great overview on machine learning at Cadence – inside, outside and everywhere else. So, I saw this as an opportunity to get the real scoop about the announcement.

The backstory is quite interesting. At a high level, the way to think of this machine learning (ML) enhancement to Xcelium is that it now delivers the same test coverage results in up to one fifth the time. That time compression has some significant implications. Before we go there, I explored how Cadence increases verification efficiency up to 5X with Xcelium ML.

This all starts with constrained random tests for simulation through a language like SystemVerilog. By ordering the tests correctly, you can get some improvement in simulation throughput. While this approach helps, verification compute still has a tendency to explode. How can you manage this? It turns out the answer is quite complex.

The core focus of the new Xcelium ML is to examine the circuit, identify the relationship between input stimulus and design or functional coverage. Then develop randomized vectors that hit coverage points much more efficiently. This requires a lot of work on the core algorithms of Xcelium. Paul explained that is took a few tries to get it right, but once an approach was identified the resultant reduction in simulation time was very significant.

Paul explained that the machine learning problem being addressed requires both an analysis of the input data set (the network) and the stimulus for that network. A “standard” ML problem starts with a known input (e.g., a picture of a cat) and the goal is to analyze and recognize the input. The Xcelium problem requires analysis of the input data set as well as generation of targeted vectors. Without analysis and optimization of the input data, the size of the ML problem becomes the same size as the design itself, and ML inference becomes so slow you lose any performance benefits of the approach. This dual analysis task is a much harder problem. Paul described the work as “true innovation”.

So, what do you do when you can achieve the same coverage in one fifth the time? The answer is actually quite straightforward – you spend 80 percent of the time you recover finding new bugs in your design. This is great news for the verification engineer.

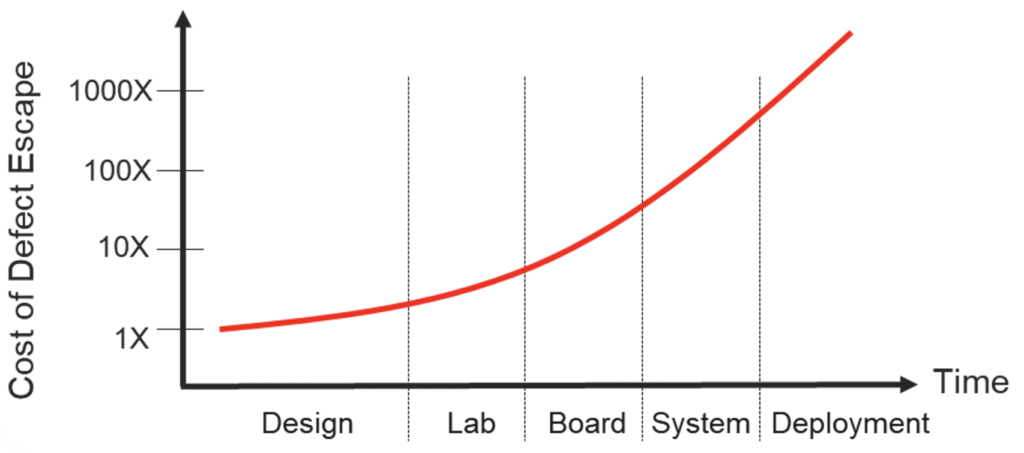

Finding problems earlier is always a win. Anyone who works in EDA knows this intuitively, but finding data to back it up is sometimes difficult. Cadence has found a very useful analysis of this effect from Intrinsix. Here is the graphic. It shines a light on what we all know – finding and fixing bugs earlier in the process is much more cost-effective.

The innovation Cadence has delivered is organic in nature. A bit of genealogy will help explain this:

- NCSim is introduced around 2000

- Incisive adds constrained random, SystemVerilog and UVM

- Xcelium adds multi-core capability from the Rocketick acquisition, high-performance, low-power SystemVerilog capability, incremental compile and save/restart support

- Xcelium ML adds machine learning optimization for efficient randomized vector generation

So, Xcelium ML is built on a series of organically developed innovations. The product will be generally available in Q4, 2020. Adoption of Xcelium is quite strong. According to the Cadence earnings call:

“Our Xcelium simulator has been steadily proliferating, with multiple migrations from competitive simulators underway.”

If you want to experience a 5X “time warp” in your next verification cycle, you should definitely check out Xcelium ML. You can learn more about the Xcelium simulator here.

Also Read

Structural CDC Analysis Signoff? Think Again.

Cadence on Automotive Safety: Without Security, There is no Safety

DAC Panel: Cadence Weighs in on AI for EDA, What Applications, Where’s the Data?

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.