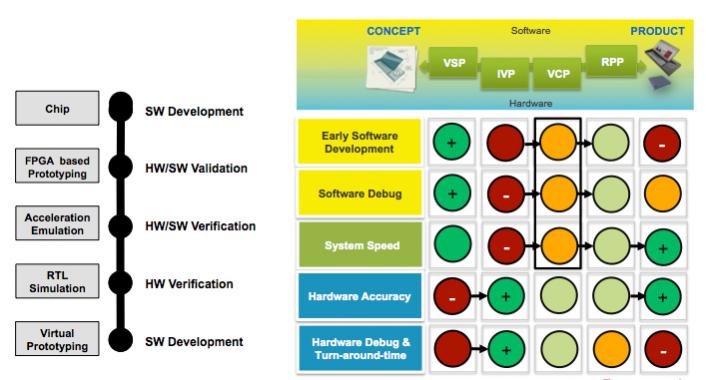

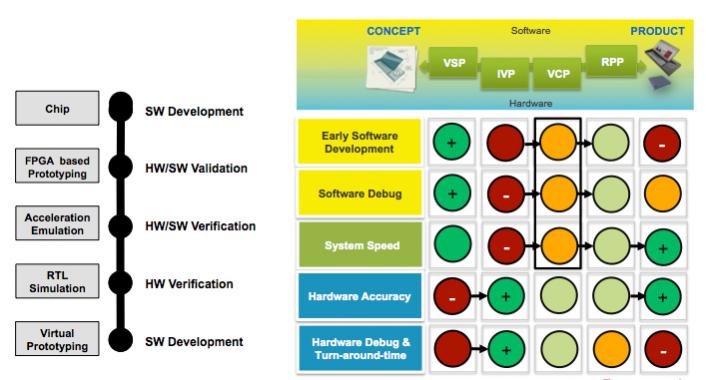

At CDNLive today Frank Schirrmeister presented a nice overview of Cadence’s verification capabilities. The problem with verification is that you can’t have everything you want. What you really want is very fast runtimes, very accurate fidelity to the hardware and everything available very early in the design cycle so you can get software developed, integration done and so on. But clearly you can’t verify RTL early in the design cycle before you’ve written it.

The actual chip back from the fab is completely accurate and fast. But it’s much too late to start verification and the only way to fix a hardware bug is to respin the chip. And it’s not such a great software debug environment with everything going through JTAG interfaces.

At the other end of the spectrum, a virtual prototype can be available early, is great for software debug, has reasonable speed. But there can be problems keeping it faithful to the hardware as the hardware is developed, and, of course, it doesn’t really help in the hardware verification flow at all.

RTL simulation is great for the hardware verification, although it can be on the slow side on large designs. But it is way too slow to help validate or debug embedded software.

Emulation is like RTL simulation only faster (and more expensive). Hardware fidelity is good and it is fast enough that it can be used for some software integration testing, developing device drivers etc. But obviously the RTL needs to be complete which means it comes very late in the design cycle.

Building FPGA prototypes is a major investment, especially if the design won’t fit in a single FPGA and so needs to be partitioned. So it can only be done when the RTL is complete (or close) meaning it is very late. In most ways it is as good as the actual chip and the debug capabilities are much better for both hardware and software.

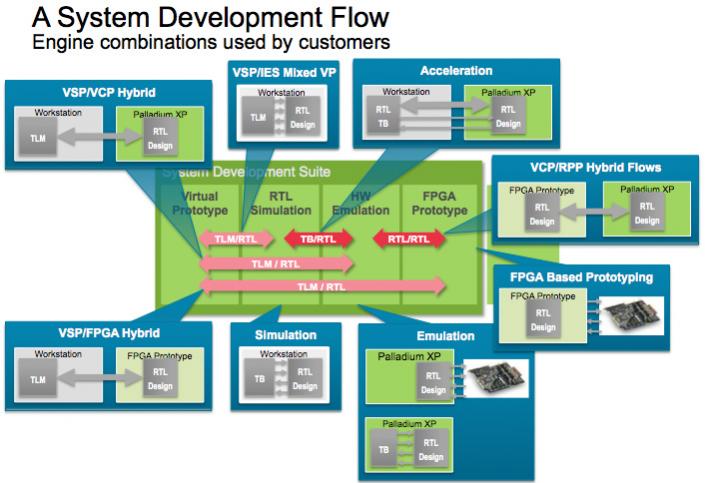

So like “better, cheaper, faster; pick any two”, none of these are ideal in all circumstances. Instead, customers are using all sorts of hybrid flows linking two or more of these engines. For example, running the stable part of a design on an FPGA prototype and the part that is not finalized on a Palladium emulator. Or running transactional level models (TLM) on a workstation against RTL running in an FPGA prototype.

To make this all work, it needs to be possible to move a design from one environment to another as automatically as possible, same RTL, same VIP, even be able to pull data from a running simulation and use it to populate another environment. Frank admits it is not all there yet, but it is getting closer.

Now that designs are so large that even RTL simulation isn’t always feasible, these hybrid environments are going to become more common as a way to get the right mix of speed, accuracy, availability and effort.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.