Machine learning (ML) is finding its way into many of the tools in silicon design flows, to shorten run times and improve the quality of results. Logic simulation seemed an obvious target for ML, though resisted apparent benefits for a while. I suspect this was because we all assumed the obvious application should be to use ML to refine constrained random tests for higher coverage. Which turned out not to be such a great starting point. My understanding of why those typical constraints are far too low-level to exhibit meaningful trends in learning. The data is just too noisy. Interestingly we had an Innovation in Verification topic some time ago in which (simulation) command-line parameters were used instead in learning. Such parameters represent system-level constraints, ultimately controlling lower-level constraints. That approach was more effective but is not so easy to productize since such parameters tend to be application specific. While it sounds simple, many state-of-the-art ML solutions end up with ineffective results. The reason is that there are so many randomizations made in practical designs and finding key control points to steer test sequences while leaving abundant randomness to stress designs was a challenge.

Cadence has an innovation to address this challenge and recently posted a TechTalk on the progress that has been made.

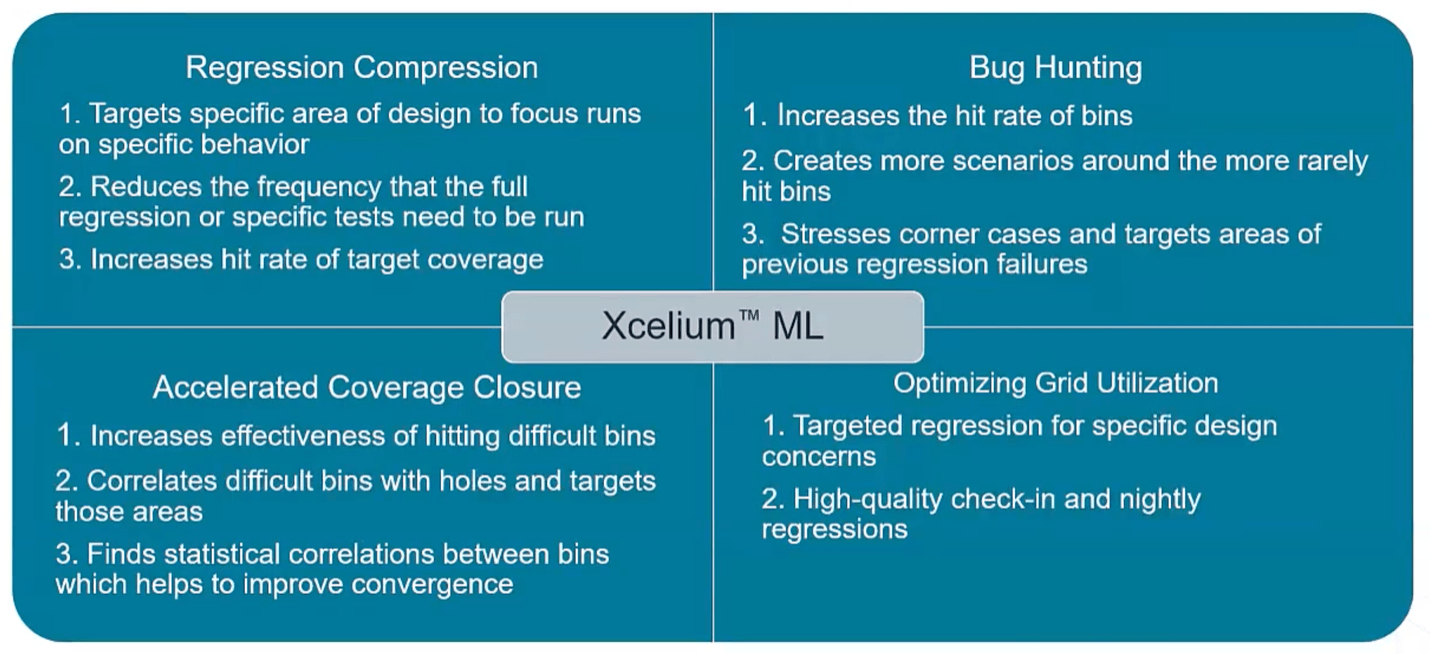

Regression compression and bug hunting

Increasing coverage is important but just as important is accelerating getting to a target coverage. Cut that time in half and you have more time in your schedule to find difficult bugs, to further increase coverage. There is an additional benefit, that increase focus on rarely hit bins can improve the verification of rare scenarios. If a bug is found with some rare scenario, that area becomes suspect – possibly containing more bugs. Focus on such scenarios increases the likelihood that additional bugs in that area will be found. Naturally, it will also increase general testing around other bins, potentially improving coverage and bug exposure in those cases also.

Overall the tool improves the hit rate in areas that are difficult to hit and provides significant improvement of the environment around challenging areas where holes may be correlated to targeted bins. A targeted attack on such cases may provide extra benefits in increasing coverage.

When can you apply learning?

Xcelium ML distinguishes between augmentation runs and optimization runs. Augmentation runs are those where bugs runs are focused on specific areas of the design or toward rare bins with a goal of improving overall verification quality. Optimization runs are those where the regression run suite is compressed in order to do the same essential work using a fraction of resources. Early in a project, where the simulator is actively learning, the coverage model is not mature, the recommendation is to stick to full regressions but uses Xcelium ML to augment the regression runs to find bug signatures earlier.

By the middle of the project, the coverage model should be sufficient to start depending on compression plus augmentation for nightly regressions. These runs can be complemented periodically with a full regression, for example, running a full regression once a week and an ML-generated regression nightly. Later in the project you can depend even more on compressed runs with only occasional cross-checks against a full regression.

The results across a representative set of designs are impressive. Better than 3X – 5X compression with negligible loss in coverage – as little as 0.1% in several instances to worst case ~1%. Compression may be lower for test suites that have already been manually optimized, but even in these cases 2X compression is still typical. This provides good hints on where Xcelium ML will help most. For example, effectiveness has little to do with the design type and more to do with the testbench methodology. In general, the more randomization is supported in the testbench the better the results you will see.

More detail

An excellent technical talk follows the introduction, explaining in more detail how the tool works and how to use it most effectively. One example they explain is a methodology to hit coverage holes.

You can learn more HERE.

Also Read:

Post-Silicon Consistency Checking. Innovation in Verification

Test Ordering for Agile. Innovation in Verification

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.