AI is everywhere or so it seems, though often promoted with insufficient detail to understand methods. I now look for substance, not trade secrets but how exactly they using AI. Matt Graham (Product Engineering Group Director at Cadence) gave a good and substantive tutorial pitch at DVCon, with real examples of goal-centric optimization in verification. Some of these are learning-based, some are simply sensible automation. In the latter class he mentioned test weight optimization, ranking test value and perhaps ordering tests by contribution to coverage. Pushing the low contributors to the end or out of the list. This is human intelligence applied to automation, just normal algorithmic progress.

AI is a bigger change, yet our expectations must remain grounded to avoid disappointment and the AI winters of the past. I think of AI as a second industrial revolution. We stopped using an ox to drag a plough through a field and started building steam driven tractors. The industrial revolution didn’t replace farmers, it made them more productive. Today, AI points to a similar jump in verification productivity. The bulk of Matt’s talk was on opportunities, some of these already claimed for the Verisium product.

AI opportunities in simulation

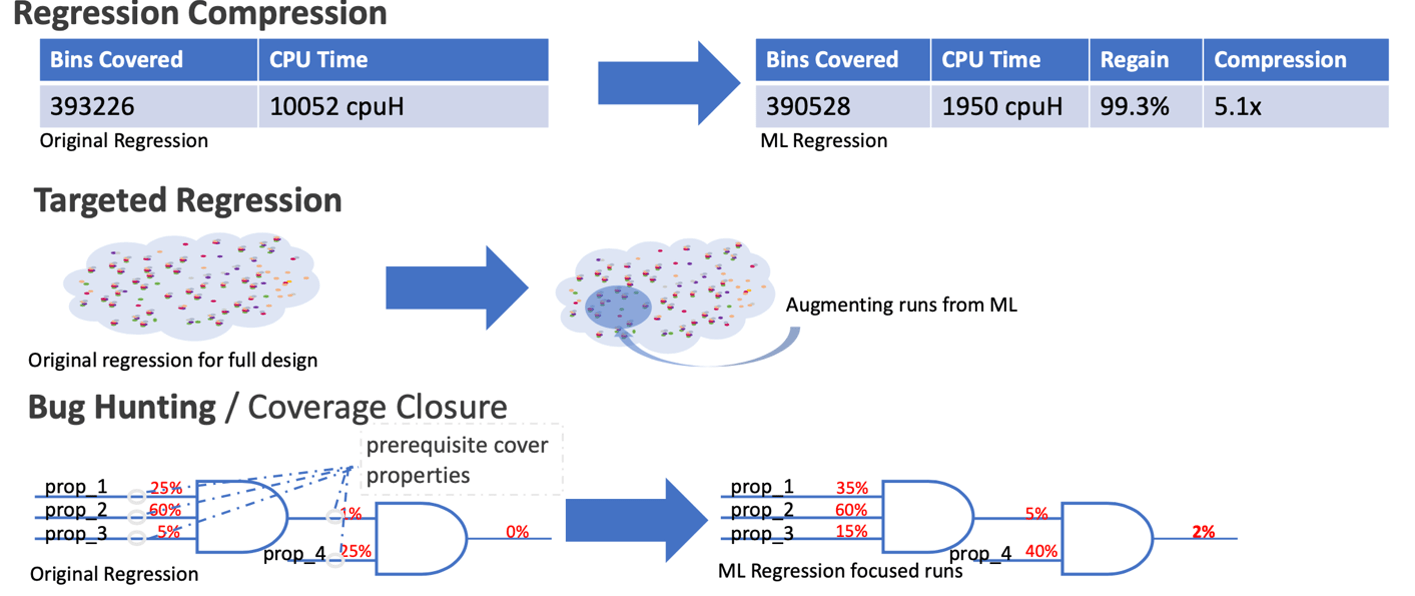

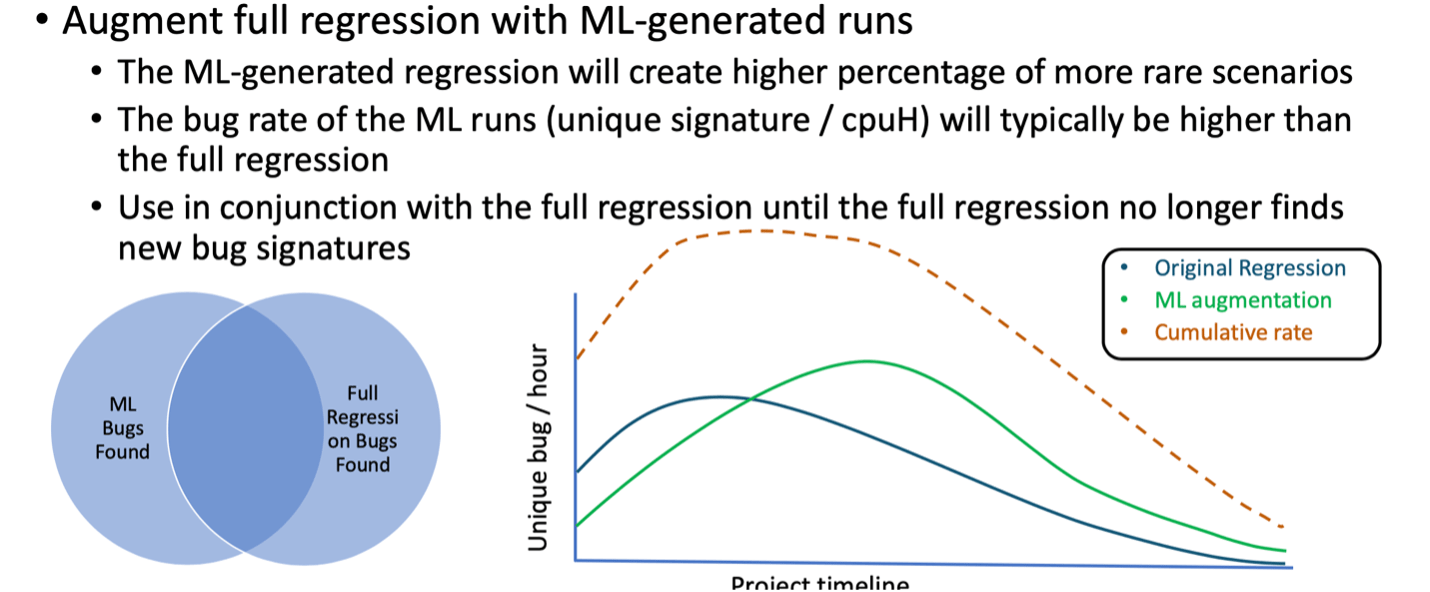

AI can be used to compress regression, by learning from coverage data in earlier runs. It can be used to increase coverage in lightly covered areas and on lightly covered properties, both worthy of suspicion that unseen bugs may lurk under rocks. Such methods don’t replace constrained random but rather enhance it, increasing bug exposure rate over CR alone.

One useful way to approach rare states is through learning on front-end states which naturally if infrequently reach rare states, or come close. New tests can be synthesized based on such learning which together with regular CR tests can increase overall bug rate both early and late in the bug maturation cycle.

AI opportunities in debug

I like to think of debug as the third wall in verification. We’ve made a lot of progress in test generation productivity through reuse (VIP) and test synthesis, though we’re clearly not done yet. And we continue to make progress on verification engines, from virtual to formal and in hardware assist platforms (also not done yet). But debug remains a stubbornly manual task, consuming a third or more of verification budgets. Debuggers are polished but don’t attack the core manual problems – figuring out where to focus, then drilling down to find root causes. We’re not going to make a big dent until we start knocking down this wall.

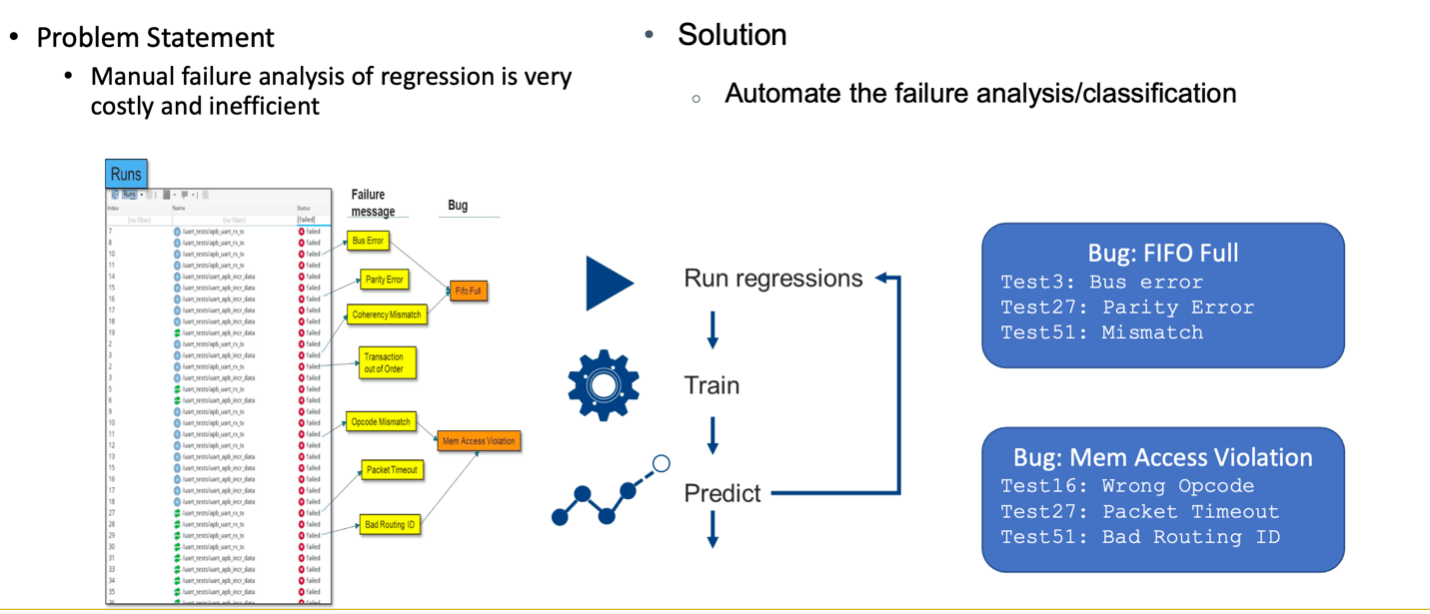

This starts with bug triage. Significant time can be consumed simply by separating a post-regression pile of bugs into those that look critical and those that can wait for later analysis. Then sub-bucketing into groups with suspected common causes. Clustering is a natural for unsupervised learning, in this case looking at meta-data from prior runs. What checkins were made prior to the test failing? Who ran it and when? How long did the test run for? What was the failure message? What part of the design was responsible for the failure message?

Matt makes the point that as engineers we can look at a small sample of these factors but are quickly overwhelmed when we have to look at hundred or thousands of pieces of information. AI in this context is just automation to handle large amounts of relatively unstructured data to drive intelligent clustering. In a later run when intelligent triage sees a problem matching an existing cluster with high probability, bucket assignment becomes obvious. An engineer then only needs to pursue the most obvious or easiest failing test to a root cause. They can then re-run regression in expectation that all or most of that class of problems will disappear.

On problems you choose to debug, deep waveform analysis can further narrow down a likely root cause. Comparing legacy and current waveforms, legacy RTL versus current RTL, a legacy testbench versus the current testbench. There is even research on AI-driven methods to localize a fault – to a file or possible even to a module (see this for example).

AI Will Chip Away at Verification Complexity

AI-based verification is a new idea for all of us; no-one is expecting a step function jump into full blown adoption. That said, there are already promising signs. Orchestrating runs against proof methods appeared early in formal methodologies. Regression optimization for simulation is on an encouraging ramp to wider adoption. AI-based debug is the new kid in this group, showing encouraging results in early adoption. Which will no doubt drive further improvements, pushing debug further up the adoption curve. All inspiring progress towards a much more productive verification future.

You can learn more HERE. (Need a link)

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.