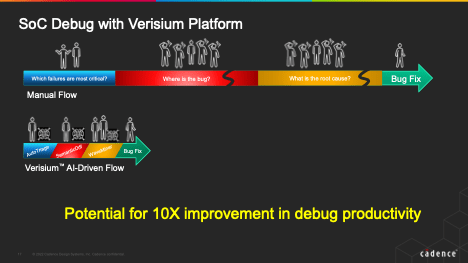

Verification technologies have progressed in almost all domains over the years. We’re now substantially more productive in creating tests for block, SoC and hybrid software/hardware verification. These tests provide better coverage through randomization and formal modeling. And verification engines are faster – substantially faster in hardware accelerators – and higher capacity. We’ve even added non-functional testing, for power, safety and security. But one area of the verification task – debug – has stubbornly resisted meaningful improvements beyond improved integration and ease of use.

This is not an incidental problem; debug now accounts for almost half of verification engineer hours on a typical design. Effective debug depends on expertise and creativity and these tasks are not amenable to conventional algorithmic solutions. Machine learning (ML) seems an obvious answer; capture all that expertise and creativity in training. But you can’t just bolt ML onto a problem and declare victory. ML must be applied intelligently (!) to the debug cycle. There has been some application-specific work in this direction, but no general-purpose solutions of which I am aware. Cadence has made the first attack I have seen on that bigger goal, with their Verisium ™ platform.

The big picture

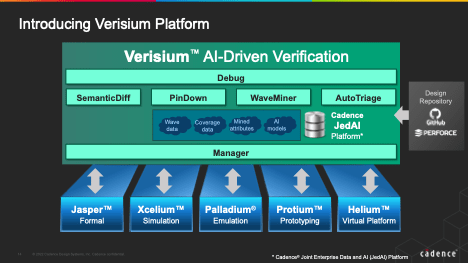

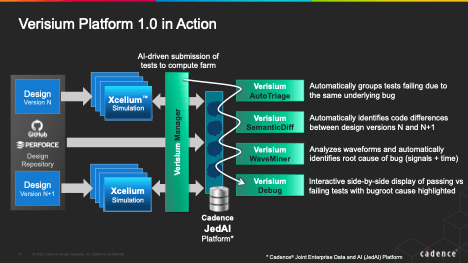

Verisium is Cadence’s name for their new AI-driven verification platform. This subsumes the debug and vManager engines for those tracking product names, but what is most important is that this platform now becomes a multi-run, multi-engine center applying AI and big data methods to learning and debug. Start with the multi-run part. To learn you need historical data; yesterday the simulation was fine, today we have bugs – what changed? There could be clues in intelligent comparison of the two runs. Or in checked-in changes to the RTL, or in changes in the tests. Or in deltas in runs on other engines – formal for example. Maybe even in hints further afield, in synthesis perhaps.

Tapping into that information must start with a data lake repository for run data. Cadence has built a platform for this also, which they call JedAI for Cadence Joint Enterprise Data and AI Platform. Simulation trace files, log files, even compiled designs go into JED AI. Design and testbenches can stay where they are normally stored (Perforce or GitHub for example). From these Verisium can easily access design revs and check-in data.

Drilling down

Now for the intelligent part of applying ML to all this data in support of much faster debug. Verisium breaks the objective down into four sub-tasks. Bug triage is a timing-consuming task for any verification team. Grouping bugs with a likely common cause to minimize redundant debug effort. This task is a natural candidate for ML, based on experience from previous runs pointing to similar groupings. AutoTriage provides this analysis.

SemanticDiff identifies meaningful differences between RTL code checkins, providing another input to ML. WaveMiner performs multi-run bug root-cause analysis based on waveforms. This looks at passing and failing tests across a complete test suite to narrow down which signals and clock cycles are suspect in failures. Verisium Debug then provides a side-by-side comparison between passing and failing tests.

Cadence is already engaging with customers on another component called PinDown, an extension which aims to predict bugs on check-in. This looks both at historical learning and behavioral factors like check-in times to assess likely risk in new code changes

Putting it all together

First a caveat. Any technique based on ML will return answers based on likelihood, not certainties. The verification team will still need to debug, but they can start closer to likely root causes and can get to resolution much faster. Which is a huge advance over the way we have to do debug today. As far as training is concerned, I am told that AutoTriage requires 2-3 regressions worth of data to start to become productive. PinDown bug prediction needs a significant history in the revision control system, but if that history exists, can train in a few hours. Looks like training is not a big overhead.

There’s a lot more that I could talk about, but I’ll wrap with a few key points. This is the first release of Verisium, and Cadence will be announcing customer endorsements shortly. Further, JedAI is planned to extend to other domains in Cadence. They also plan APIs for customers and other tool vendors to access the same data, acknowledging that mixed vendor solutions will be a reality for a long time 😊

I’m not a fan of overblown product reviews, but here I feel more than average enthusiasm is warranted here. If it delivers on half of what it promises, Verisium will be a ground breaker. You should check it out.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.