“Who guards the guardians?” This is a question from Roman times which occurred to me as relevant to this topic. We use constrained random to get better coverage in simulation. But what ensures that our constrained random testbenches are not wanting, maybe over constrained or deficient in other ways? If we are improving with a faulty methodology our coverage may seem strong while in fact being incomplete. Synopsys VCS supports an ML-based capability called Intelligent Coverage Optimization (ICO) to answer precisely this question.

What’s wrong with my CR testbench?

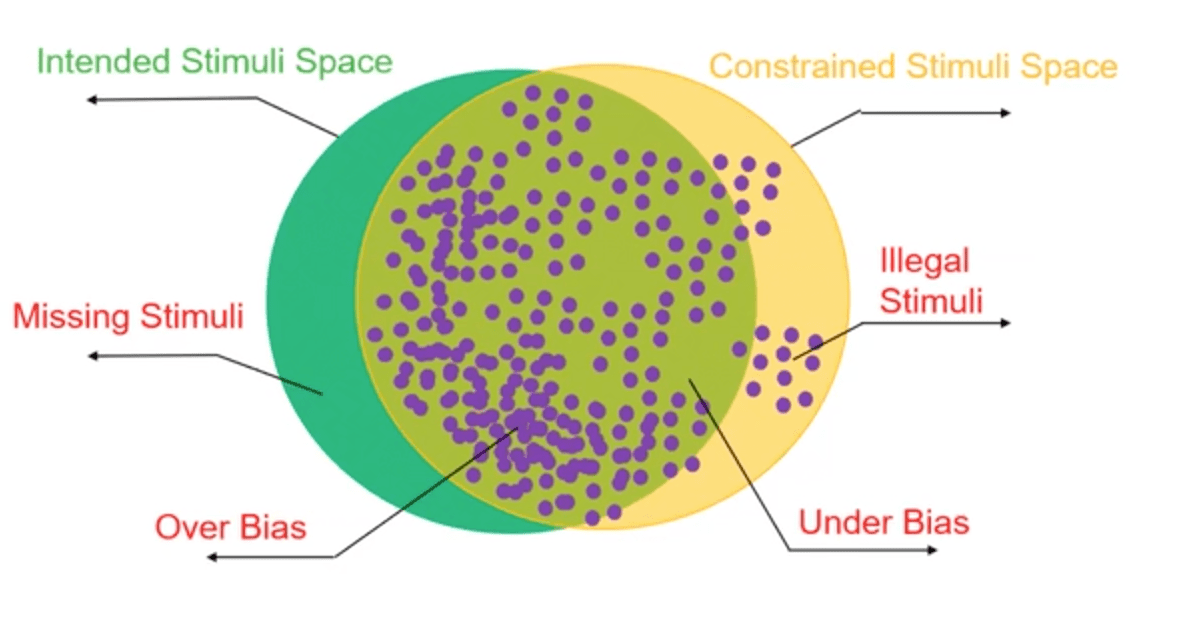

Constrained random methods require constraints that we define. Constraints are a practical but coarse and very low-level way to attempt to map the boundaries of intended functionality. Brilliant though we may be, we are imperfect, which can lead to coverage problems. In the figure above the green circle represents the testing space we intend to generate. The yellow circle graphs the space CR can generate given the constraints we have defined. The misalignment between the two indicates multiple TB issues. As a result, some part of the test space we should cover, we can’t reach (missing stimuli). Some regions in which we will generate tests that do not correspond to intended behavior (illegal stimuli). Even within the overlap, some parts of the space we may be cover too well (over bias). And some parts may be under generated or not at all (under bias).

Our attempts to better cover design testing themselves need exposing these issues early on and rectify them wherever possible. Which is the purpose of ICO – to help you build better testbenches for coverage optimization.

How ICO works

This capability is built into the constraints solver in VCS and uses machine learning (specifically reinforcement learning) to improve stimulus quality for better coverage. In the course of this optimization it will also expose testbench bugs. There’s a lot of detail in the link below on controlling this analysis and reviewing results. My main takeaways are that the analysis does not require recompilation and can be reviewed post-run in text or HTML tables, or in Verdi.

Malay Ganai (Synopsys Scientist) who presented the technology shared a mobile user experience with ICO, comparing non-ICO and ICO analysis directly. The user found 30% more testbench bugs with the ICO analysis and importantly found an RTL deadlock which could not be found with non-ICO analysis. Moreover, they were also able to reduce regression time from 10 days to 6 days for the same functional coverage. Other users report finding critical bugs earlier (thanks to better stimulus coverage), finding more bugs faster and reducing regression times significantly.

AMD experience

AMD presented a more detailed summary of their findings using ICO, comparing also with non-ICO runs. They confirmed that they consistently find ICO covers more tests with the same number of seeds. In one striking example, ICO-based analysis was able to reach the same coverage as non-ICO in 15 regressions versus 23. That’s quite a convincing differentiating value with ICO.

AMD also gave a talk on improving testbench quality. This comes down to analysis diagnostics for skewed distributions, together with root cause analysis. They used ICO features to resolve under constraints, over constraints and to fix constraint inconsistencies. An example might be declaring a constraint variable as int when it should be a more limited bit-width, allowing far too wide of a range in randomization, thus affecting coverage and runtime.

ICO guards the testbench guardians. The testbench measures and helps optimize coverage for the design while ICO measures and helps optimize coverage for the testbench.

You can watch the SNUG presentations for both talks HERE . Register with a SolvNet Plus ID and search for ‘Early Use-cases of VCS ICO (Intelligent Coverage Optimization)’ by Malay Ganai and ‘Accelerate Coverage Closure and Expose Testbench Bugs using VCS ML-Driven Intelligent Coverage Optimization Technology’ by AMD.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.