One aspect of received wisdom on AI has been that all the innovation starts in the big machine learning/training engines in the cloud. Some of that innovation might eventually migrate in a reduced/ limited form to the edge. In part this reflected the newness of the field. Perhaps also in part it reflected need for prepackaged one-size-fits-many solutions for IoT widgets. Where designers wanted the smarts in their products but weren’t quite ready to become ML design experts. But now those designers are catching up. They read the same press releases and research we all do, as do their competitors. They want to take advantage of the same advances, while sticking to power and cost constraints.

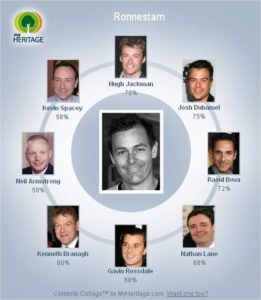

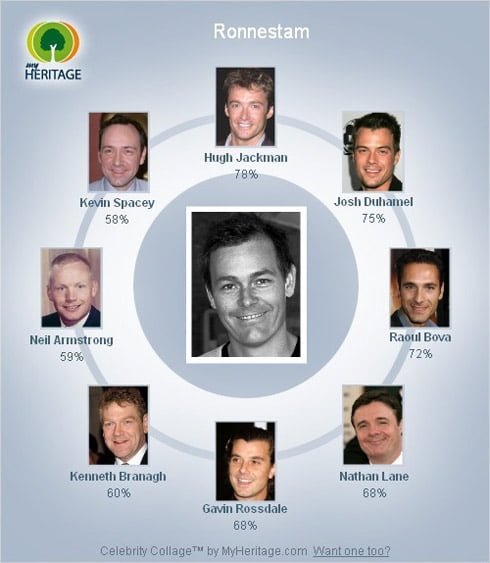

Facial Recognition

AI differentiation at the edge

It’s all about differentiation within an acceptable cost/power envelope. That’s tough to get from pre-packaged solutions. Competitors have access to the same solutions after all. What you really want is a set of algorithm options modeled in the processor as dedicated accelerators ready to be utilized, with ability to layer on your own software-based value-add. You might think there can’t be much you can do here, outside of some admin and tuning. Times have changed. CEVA recently introduced their NeuPro-M embedded AI processor which allows optimization using some of the latest ML advances, deep into algorithm design.

OK, so more control of the algorithm, but to what end? You want to optimize performance per watt, but the standard metric – TOPS/W – is too coarse. Imaging applications should be measured against frames per second (fps) per watt. For security applications, for automotive safety, or drone collision avoidance, recognition times per frame are much more relevant than raw operations per second. So a platform like NeuPro-M which can deliver up to thousands of fps/W in principle will handle realistic fps rates of 30-60 frames per second at very low power. That’s a real advance on traditional pre-packaged AI solutions.

Making it possible

Ultimate algorithms are built by dialing in the features you’ve read about, starting with a wide range of quantization options. The same applies to data type diversity in activation and weights across a range of bit-sizes. The neural multiplier unit (NMU) optimally supports multiple bit-width options for activation and weights such as 8×2 or 16×4 and will also support variants like 8×10.

The processor supports Winograd Transforms or efficient convolutions, providing up to 2X performance gain and reduced power with limited precision degradation. Add the sparsity engine to the model for up to 4X acceleration depending on quantity of zero-values (in either data or weights). Here, the Neural Multiplier Unit also supports a range of data types, fixed from 2×2 to 16×16, and floating point (and Bfloat) from 16×16 to 32×32.

Streaming logic provides options for fixed point scaling, activation and pooling. The vector processor allows you to add your own custom layers to the model. “So what, everyone supports that”, you might think but see below on throughput. There are also a set of next generation AI features including vision transformers, 3D convolution, RNN support, and matrix decomposition.

Lots of algorithm options, all supported by a network optimization to your embedded solution through the CDNN framework to fully exploit the power of your ML algorithms. CDNN is a combination of a network inferencing graph compiler and a dedicated PyTorch add-on tool. This tool will prune the model, optionally supports model compression through matrix decomposition, and adds quantization-aware re-training.

Throughput optimization

In most AI systems, some of these functions might be handled in specialized engines, requiring data to be offloaded and the transform to be loaded back when completed. That’s a lot of added latency (and maybe power compromises), completely undermining performance in your otherwise strong model. NeuPro-M eliminates that issue by connecting all these accelerators directly to a shared L1 cache. Sustaining much higher bandwidth than you’ll find in conventional accelerators.

As a striking example, the vector processing unit, typically used to define custom layers, sits at the same level as the other accelerators. Your algorithms implemented in the VPU benefit from the same acceleration as the rest of the model. Again, no offload and reload needed to accelerate custom layers. In addition, you can have up to 8 of these NPM engines (all the accelerators, plus the NPM L1 cache). NeuPro-M also offers a significant level of software-controlled bandwidth optimization between the L2 cache and the L1 caches, optimizing frame handling and minimizing need for DDR accesses.

Naturally NeuPro-M will also minimize data and weight traffic . For data, accelerators share the same L1 cache. A host processor can communicate data directly with the NeuPro-M L2, again reducing need for DDR transfers. NeuPro-M compresses and decompresses weights on-chip in transfer with DDR memory. It can do the same with activations.

The proof in fps/W acceleration

CEVA ran standard benchmarks using a combination of algorithms modeled in the accelerators, from native through Winograd, to Winograd+Sparsity, to Winograd+Sparsity+4×4. Both benchmarks showed performance improvements up to 3X, with power (fps/W) by around 5X for an ISP NN. The NeuPro-M solution delivered smaller area, a 4X performance, 1/3 of the power, compared with their earlier generation NeuPro-S.

There is a trend I am seeing more generally to get the ultimate in performance by combining multiple algorithms. Which is what CEVA has now made possible with this platform. You can read more HERE.

Also Read:

RedCap Will Accelerate 5G for IoT

Ultra-Wide Band Finds New Relevance

Low Power Positioning for Logistics – Ultimate Tracking

Share this post via:

Comments

2 Replies to “AI at the Edge No Longer Means Dumbed-Down AI”

You must register or log in to view/post comments.