At the DAC show in June I met with folks at Berkeley DA and heard about their Analog Fast SPICE simulator being used inside of the Tanner EDA tools. With the newest release from Tanner called HiPer Silicon version 15.23 you get a tight integration between: Continue reading “Schematic Capture, Analog Fast SPICE, and Analysis Update”

GlobalFoundries Announces 14nm Process

Today GlobalFoundries announced a 14nm process that will be available for volume production in 2014. They are explicitly trying to match Intel’s timeline for the introduction of 14nm. The process is called 14XM for eXtreme Mobility since it is especially focused on mobile. The process will be introduced just one year after 20nm, so an acceleration of about a year over the usual two year process node heartbeat.

The process is actually a hybrid, a low risk approach to getting (most of) the power and performance advantages of 14nm without a lot of the costs. The transistor is a 14nm FinFET but the middle-of-line and back-end-of-line are unchanged from 20nm, specifically 20nm-LPM process. So in effect it is 20nm process with 14nm transistors (at 20nm spacing). But this process gets a lot of the advantage of 14nm. It has 56% higher frequency at low operating voltages and 20% higher at high operating voltages. Or the voltage can be reduced by 160-200mV resulting in 40-60% increase in battery life.

Of course there is not really any area reduction from 20nm since all the metal pitches are the same. But it avoids the cost of having even more layers requiring double patterning and avoids needing to contemplate triple patterning. This is something I’ve been talking about recently, since there is a risk that future processes have higher performance and lower power but not lower cost. In the short term for existing high margin products like iPhone 5 this might not matter much, but in the long run it is the 1000 fold reductions in cost that drives electronics. If we hadn’t had that then we might still be able to make an iPhone in principle but it would cost as much as a 1995 mainframe of equivalent computing power.

GF have “fin-friendly migration” (FFM) design rules which they reckon should make migration planar designs, especially if they are already in the GF 20nm process that forms the basis for 14XM since only the transistors have changed.

Test silicon is being run in GlobalFoundries Fab 8 in upstate New York. Like other foundries, GF have been working on FinFETs for years even though they have none in a production process. Because the only thing that is new is the 14nm FinFet transistor, early process design kits are already available and customer product tape-outs are expected next year with volume production in 2014.

For more information on FinFETs (not specifically GlobalFoundries) here is my blog on a talk from Dr Chenmin Hu, the inventor of the FinFET. It explains the motivation for going away from planar transistors to FinFETs (or FDSOI, the only alternative technology that seems viable at present).

ReRAM Based Memory Buffers in SSDs

In a paper at the VLSI meeting in Hawaii, Professor Ken Takeuchi described using an ReRAM buffer with an SSD. He points to some major performance gains that one can expect from such a configuration in terms of energy, speed and lifetime. Is this an opportunity for ReRAM that could spur development of the technology? Read more in a post over at www.ReRAM-Forum.com….

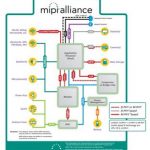

High Speed PHY Interfacing with SSIC, UFS or PCI express in Smartphone, Media tablet and Ultrabook at Lower Power

We have recently commented the announcement from MIPI Alliance and PCI-SIG, allowing PCI Express to be used in martphone, Media tablet and Ultrabook, while keeping decent power consumption, compatible with these mobile devices. The secret sauce is in the High Speed SerDes function selected to interface with these high data bandwidth protocol controller, like SuperSpeed USB Inter Chip (SSIC) from USB-IF, Universal Flash Storage from JEDEC and PCIe from PCI-SIG. The second PHY defined by MIPI Alliance: M-PHY, first specified in April 2011 at 1.25 Gbits/s, recently updated (June 2012) to run at 2.9 Gbits/s and a third generation coming next year will hit up to 5.8 Gbits/s.

You also may read the announcement from the MIPI Alliance: “MIPI® Alliance M-PHY® Physical Layer Gains Dominant Position for Mobile Device Applications”, and see the quote from Joel Huloux, MIPI Alliance Chairman at the end of this post…

In order for the information to be complete, the D-PHY (first PHY specification defined by the Alliance) was developed primarily to support camera and display applications, and is now widely used in the systems shipping now. But M-PHY is the function that should be integrated today to cope with the data bandwidth demand in the Gb/s range, and can be compared with SATA 6G or PCIe gen-2, but at lower power consumption. This is the key word for mobile application like smartphones and media tablet, this is exactly the feature which explains why ARM based Application Processor dominates the mobile systems, as strongly as X86 based chipset dominates the PC world-but not the mobile world. In fact, it would be very nice to have actual figures for the M-PHY power consumption, say ay 2.9 Gbit/s on a 28 nm (low power) technology node, and to compare it with PCI Express or USB 3.0 PHY at a similar speed and technology node. I am sure that this information is available “somewhere”, sharing it within the industry could be a good idea…

According with an analyst quoted in the PR from the MIPI Alliance “MIPI’s D-PHY interface is currently the dominant technology in mobile devices and we anticipate that its M-PHY interface will follow suit.” There is at least a good reason why D-PHY is dominant: if you remember, this function has been created to support Display and Camera interfaces. Display was relying on Low Voltage Differential Swing (LVDS) signaling, a pretty old technology, forcing to use wide parallel busses at the expense of real estate hungry connector, so a serial based solution like D-PHY and the Camera Interface Specification (CIS-2) was welcomed. Camera Controller IC interfaces with the Application Processor was varying with the chip supplier, not a comfortable situation for the wireless phone integrator, so Display Interface Specification (DSI) and D-PHY was also welcomed.

This explains why CSI-2 and DSI were among the first MIPI specifications to be widely adopted. But the Camera Controller chip makers, integrating the controller on the same IC than the image sensor, on a mature technology node like 90nm, may have some hard time integrating a multi-Gbit/s SerDes on such a technology. The story is slightly different for the Display controller chip, but I suspect the high voltage needed to drive the display to lead to the same situation: mature technology node are not high speed SerDes friendly! It may be that D-PHY will still be in use for some time, to support Display and camera Interfaces, and this is consistent with the feedback from IP vendors like Synopsys, still seeing demand for D-PHY in 28nm…

Now, we can come to the up-to-date M-PHY, which, as wisely noted by a cleaver analyst “…will follow suit”. To prepare the quote I have made for the MIPI Alliance in the M-PHY related PR, I have interviewed several IP vendors, as I thought their answer would be a good indication about how popular was the function. So, my question was “How many RFQ did you received (for M-PHY)?”, as I knew that asking about the number of design-in, or sales, would not be answered. As they knew that the answer may be published, let’s have a look at the respective answers from Synopsys, Mixel, Cosmic Circuits and Arteris.

Arteris does not sell any PHY IP, but their Low Latency Interface IP has to be integrated with M-PHY. Arteris is claiming that LLI is used in ten projects, if you consider that the specification has been issued in February this year, that a pretty good adoption rate! Most probably, the fact that LLI allow sharing a single DRAM between AP and Modem, leading to save the cost of one DRAM, is a good enough incentive! Arteris perception of the market is that the AP chip makers prefer using in-house designed M-PHY, at least for the time being. Our perception is that the chip makers adopting LLI are probably the market leaders, and they have PHY dedicated design teams. This may change when the Tier-2 and 3 will also adopt LLI, according with Kurt Shuler, VP Marketing for Arteris.

This remark from Arteris makes the answer from Navraj Nandra, Director of Marketing for PHY IP products with Synopsys, even more interesting, as Synopsys claim to have had 120 RFQ for M-PHY. Let’s make it clear, a Request For Quotation (RFQ) does not mean that a product will be sold at the end. But that’s a good indication about the market behavior in respect with M-PHY.

I would like to thank Ganapathy Subramaniam, CEO of Cosmic Circuits, as he was the first to answer (during the week-end), and saying that the company has seen 10+ RFQ for the M-PHY. As far as I am concerned, my estimate would be that the M-PHY could generate up to 20 IP sales in 2012, but I may be optimistic, if we consider that many of the functions will come from internal sourcing…

The year 2013 could be very interesting to monitor, as numerous functions, like PCI Express, UFS, SSIC on top of the MIPI specific functions like LLI, DigRFV4, DSI-2 or CSI-3, could be integrated and leading to controller IP sales (for the above mentioned IP) and M-PHY IP sales. This could happen, not only for chips used in mobile systems, but also in the traditional PC and PC peripherals segments, as wisely noted by Brad Saunders, Chairman/Secretary of the USB 3.0 Promoters Group: “MIPI’s low-power physical layer technology makes it possible for the PC ecosystem to benefit from the SSIC chip-to-chip interface”.

Quote from MIPI Alliance Chairman: “M-PHY has truly become the de-facto standard for mobile device applications requiring a low-power, scalable solution,” said Joel Huloux, Chairman of the Board of MIPI Alliance. “We are pleased to join with our partners at JEDEC, USB IF, and PCI-SIG, and MIPI member companies, to advance solutions that push the envelope in interface technology.”

Eric Esteve – from IPNEST

Synopsys-Springsoft: Almost Done

Synopsys announced today that they had completed the two main hurdles to acquiring SpringSoft. Remember, SpringSoft is actually a public Taiwanese company so has to fall in line with Taiwanese rules. The first hurdle is that they have obtained regulatory approval in Taiwan for the acquisition (roughly equivalent to FTC approval in the US, I think). And second, over 51% of the outstanding shares of SpringSoft have been tendered (roughly equivalent to voting in favor of the merger: once 51% shares have been tendered then Synopsys has to purchase the remaining shares and take over the company). Synopsys expects the deal to close definitively on October 1st next week.

UPDATE: actually Synopsys contacted me to point out that the deal doesn’t actually close on October 1st. They will take control of SpringSoft on that date and will start to consolidate SpringSoft financials into Synopsys financials. The deal will technically close later.

Springsoft has two product lines that are completely separate except sometimes being purchased by the same company.

The Laker (Laker[SUP]3[/SUP] is the latest version) product line is a layout editor with a lot of advanced analog placement and routing capability. Synopsys already had a layout capability. Then they bought Magma who had another, reputedly better one. Then they bought Ciranova who had a well-regarded analog placer. Now with SpringSoft they have yet another. I have no idea which technology will survive or how they will merge all this in the end.

The Verdi (Verdi[SUP]3[/SUP] is the latest version) product line is for functional verification. SpringSoft don’t have their own simulation capabililty but Verdi allows you to manage the verification process and analyze results. Synopsys also have verification environments (not to mention simulators). Again, over time, presumably these technologies will be merged.

They also have some interesting FPGA-based technology called ProtoLink. This allows FPGA-based verification to be done much more effectively by being able to change which signals are probed on the fly without needing to recompile the entire netlist, which for a big design is very slow. I don’t think Synopsys have anything like it.

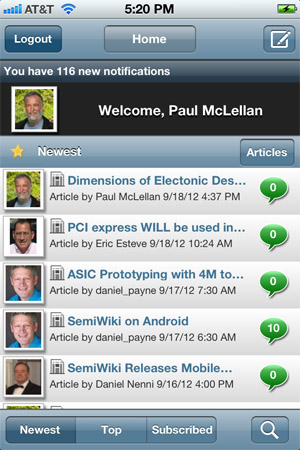

SemiWiki? There’s an App for that

The iPhone version of the SemiWiki App is now available. Download it from the iTunes store, it’s free. The App allows you to look at similar things to the website but much more conveniently adapted to fit the small screen. When you first start up the App you can log in (assuming you are a SemiWiki registered user and why would you not be).

For example, here is the front page of Semiwiki, with all the articles in order. You can, of course, scroll to get to the more articles below. And if you tap on any particular article you get to read it, formatted for the small screen.

It only went live today so I’m not going to claim that I’ve had extensive experience of playing with it, but it certainly seems easy to use and everything seemed to work as you would expect.

Daniel Payne did a more detailed explanation of how to navigate within the App here. He was actually discussing the Android version of the App but since they area essentially the same (once you’ve got the App installed) you can read his blog entry and it all applies just the same on iPhone except that to get to the “8 choice” top menu you tap on the “home” button in the middle of the top row. That will get you to here:

The App is a big advance on just trying to run SemiWiki’s regular website in Safari (or any other browser) where everything is always the wrong size, and it takes more clicks that you would like to navigate around.

It’s on the App Store here.

PCI express WILL be used in Smartphone and Media Tablet… opening infinity of new opportunities

Looking at the various Interface protocols like HDMI, SuperSpeed USB, Universal Flash Storage (UFS) or even SATA integrated in Application Processor for smartphone and media tablet, one extremely powerful Interface protocol, already in use in electronic systems from various market segments from PC, PC Peripherals, Networking, Computing, Embedded to Test Equipment (and I forgot probably some), was still banned from Mobile application, due to the high power consumption of the PCIe PHY layer. As a Semiwiki reader, you are smart and know that I am talking about PCI Express!

The MIPI Alliance has issued a set of specifications (not a “standard” according with the terminology from MIPI Alliance) that smartphone users are running every time: the most famous are Camera Serial Interface (CSI-2 or 3), Display Serial Interface (DSI, DSI-2), Low Latency Interface (LLI) allowing sharing the same physical DRAM between the AP and the Modem, the list of functional specifications is becoming longer and longer since the creation of the MIPI Alliance in 2003. To support physical data exchange, MIPI defines two types of PHY, D-PHY (half-duplex, up to 80 Mb/s) and M-PHY (dual-simplex, means the protocol can send and receive data at the same time, first defined in April 2011 at 1.25 Gbits/s, an update released in June 2012 runs at 2.9 Gbits/s and a third generation coming next year will hit up to 5.8 Gbits/s).

M-PHY is the piece which has allowed MIPI Alliance to close deals with:

- USB-IF, so SuperSpeed USB (USB 3.0) controller can be interfaced with M-PHY, the “merged” protocol is named SSIC, allowing to interface two IC in the system;

- JEDEC, for the Universal Flash Storage (UFS) support in mobile application, allowing to interface AP with Mass Storage devices.

- And now the PCI-SIG: the organization will define a new variant of its physical link layer software to run the M-PHY.

The adaptation of PCIe protocols to operate over the M-PHY is targeted to be released as an ECN to the PCIe 3.0 Base specification and will achieve full integration into the PCIe 4.0 Base specification upon its release. The initial application of this technology is anticipated to be high-performance wireless communications with other applications based on device design requirements. Future implementations are expected in the handheld device market, including smartphones, tablets and other ultra-low power applications. Implementers of this technology must be members of both PCI-SIG and MIPI Alliance in order to leverage member benefits including access to licensing rights and specification evolutions.

As far as I am concerned, I will probably need to rework this forecast for USB, HDMI, DDRn, PCIe and MIPI!

For some who remember the blog “Interface Protocols USB 3.0, HDMI, MIPI…Winner and Losers in 2011” the conclusion about PCI Express was: “PCI Express pervasion has been strong in almost every segment (except in Wireless handset, Consumer electronic or Automotive), generating growing IP sales year after year…” Opening the door to PCI Express usage in smartphone and media tablet will create many new opportunities. Like for example to link an applications processor to an external 802.11ac controller. Or, when using the NVM Express specification, benefit from an internal SSD in a media tablet (or smartphone), at a decent cost in term of link power consumption. These are only a couple of examples, but linking these two worlds (everything mobile on one hand, the multiples applications already developed in the PC world on the other hand) will create infinity of new possibilities. Just limited by your imagination power!

Eric Esteve– from IPNEST–

See here the quotes from the chairman of MIPI and PCI-SIG:

“We’re excited about the opportunities this collaboration creates for the Mobile industry, MIPI Alliance and its members,” said Joel Huloux, MIPI Alliance Chairman of the Board. “By leveraging our proven M-PHY technology that meets mobile low-power requirements, coupled with the reuse of existing PCIe IP, component and device manufacturers can recoup their investments faster, can drastically reduce the time for product development and validation, and can thus hasten the delivery of innovative solutions to the market.”

“This collaboration brings together decades of PCIe innovation in PCs with the proven technology of the M-PHY specification that meets the low-power needs of handhelds devices,” said Al Yanes, chairman and president, PCI-SIG. “As PCs evolve to thin and light platforms and tablets and smartphones take on the role of primary computing devices, consumers demand a seamless, power-efficient user experience. We’re pleased to work with MIPI Alliance to deliver a technology solution that meets these demands.”

ASIC Prototyping with 4M to 96M Gates

I’ve used Aldec tools like their Verilog simulator (Riviera PRO) when teaching a class to engineers at Lattice Semi, so to get an update about the company I spoke with Dave Rinehart recently by phone. A big product announcement by Aldec today is for their ASIC prototyping system with a capacity range of 4 Million to 96 Million gates, called the HES-7. Continue reading “ASIC Prototyping with 4M to 96M Gates”

SemiWiki on Android

This morning I got to try out the new Android app for SemiWiki, so this is something that you will benefit from as you’re on the go with an Android phone and want to stay up to date. It’s an intuitive app, so you’ll be up and running within minutes. My first step was to visit the Play Store, search for the app using “Semiwiki”, install it and login: Continue reading “SemiWiki on Android”

SemiWiki Releases Mobile Apps!

In response to the growing mobile audience and increasing cost of data plans, SemiWiki has uploaded Android and Apple IOs applications. The SemiWiki mobile apps are available to you at no cost and are advertisement free. The Android app is available TODAY but the IOs app is still PENDING Apple approval (up to 60 days) versus Google Android (24 hours or less). Why? Because Apple individually reviews the apps and Google uses crowd sourcing (user reviews) to track app quality. As a result, I will not order my iPhone5 until the SemiWiki IOs app is approved. Take that Apple!

*** Update: The SemiWiki Apple app is up! And I purchased my iPhone5 as stated. Very nice device! It will do well!

Additionally, not only does Apple charge $99 PER YEAR to have an app in the Apple Store (versus the one time Android fee of $28 from Google), Apple requires you to have a MAC with the new OS to validate your app. So I now own a MAC Air which is a very nice laptop by the way but one I do not really need otherwise. Maybe this is one reason why Android is catching Apple?

According to Daniel Payne’s blog: “EDA Industry Talks about Smart Phones and Tablets, Yet Their Own Web Sites are Not Mobile-friendly“, SemiWiki is now the first EDA media site with a mobile app. Lets hope we start a trend here!

SemiWiki Q2 2012 analytics can be found HERE. To expand on that here are some of the mobile analytics:

Q2 2012 SemiWiki numbers:

Unique Visitors: 85,012

Pageviews: 506,316

15.9% of the visits were from mobile devices:

[LIST=1]

By Device:

[LIST=1]

Google Analytics also sorts by service provider, operating system, input device, screen resolution, demographics, and behavior. Can you imagine what other types of tracking information Apple, Google, and your service provider has on you? Would you trust Samsung with all of your personal information? Let’s just agree that nothing we do on our mobile devices is private.

My son Ryan and I cannot review our own apps since it would be like my wife asking me if her butt looks big in a new outfit that is not returnable. So I have asked Daniel Payne, our resident Android user, to review the SemiWiki app. As soon as the Apple app is up I will ask Paul McLellan, our resident Apple user, to review it. Please download the Android app if you can and let us know how you like it. It is Version 1.3 and we definitely have some more work to do so be kind if you do a review on the Android site.

Speaking of reviews, no sooner than we uploaded the apps we got this email to which we will definitely not respond to, but it certainly makes you wonder:

—————————- Original Message —————————-

Subject: Android / iOS reviews offered

From: “sales”

Date: Wed, September 12, 2012 7:39 am

————————————————————————–

Hello Team Semiwiki,

We are reaching out to you to offer our services for android apps/iOS Apps. These services will UNDOUBTEDLY increase your app’s popularity in Google Play store/ iPhone app store.

We offer positive 5 ★★★★★ star review comments from authentic users (no fake , no spam) which will ensure discoverability and reputation for your app.

· Currently we are offering this service at introductory rate of 3 $ per review.

· We can meet upto 100s of review comments for any app.

· No need to pay in advance , we will let you review a sample batch for free.

· Everyone is using this advantage so don’t get left behind , boost your app.

· You can provide exact comments accentuating features of your app.

Please feel free to reach out to us by replying to this email , we look forward to working with you and starting a symbiotic professional relationship.

All payments to be done via Paypal.

-Regard

Team eDigiMark

Also see: SemiWiki on Androidand SemiWiki on iPhone