Looking at the Press Release (PR) flow, it was interesting to see how TSMC has solved a communication dilemma. At first, let’s precise that #1 Silicon foundry has to work with each of the big three EDA companies. As a foundry, you don’t want to lose any customer, and then you support every major design flow. Choosing another strategy would be stupid.

The first PR came on October 12, about Chip on Wafer on Substrate tape out, here is an extract: “TSMC today announced that it has taped out the foundry segment’s first CoWoS™ (Chip on Wafer on Substrate) test vehicle using JEDEC Solid State Technology Association’s Wide I/O mobile DRAM interface… A key to this success is TSMC’s close relationship with its ecosystem partners to provide the right features and speed time-to-market. Partners include: Wide I/O DRAM from SK Hynix; Wide I/O mobile DRAM IP from Cadence Design Systems; and EDA tools from Cadence and Mentor Graphics.”

As you can see, both design tools from Cadence and Mentor are mentioned, and Cadence can be honored: the test vehicle is based on Wide I/O mobile DRAM IP from the company. We will have a look at Wide I/O more in depth soon in this blog.

Cadence and Mentor? Look like one is missing!

Then, today, the industry was awarded that Synopsys has “received TSMC’s 2012 Interface IP Partner of the Year Award for the third consecutive year. Synopsys was selected based on customer feedback, TSMC-9000 compliance, technical support excellence and number of customer tape-outs. Synopsys’ DesignWare Interface IP portfolio includes widely used protocols such as USB, PCI Express, DDR, MIPI, HDMI and SATA that are offered in a broad range of processes from 180 nanometer (nm) to 28nm.”

If you want to know more about the Interface IP market, weighting over $300 million in 2011, you should take a look at this post

The PR about the Chip on Wafer on Substrate (CoWoS) from TSMC shows that Cadence invests to develop the memory controller technology of the near future, to be used for 3D-IC on mobile applications. I suggest you to read this excellent article from Paul McLelan, so you will understand how work CoWoS from a Silicon technology standpoint.

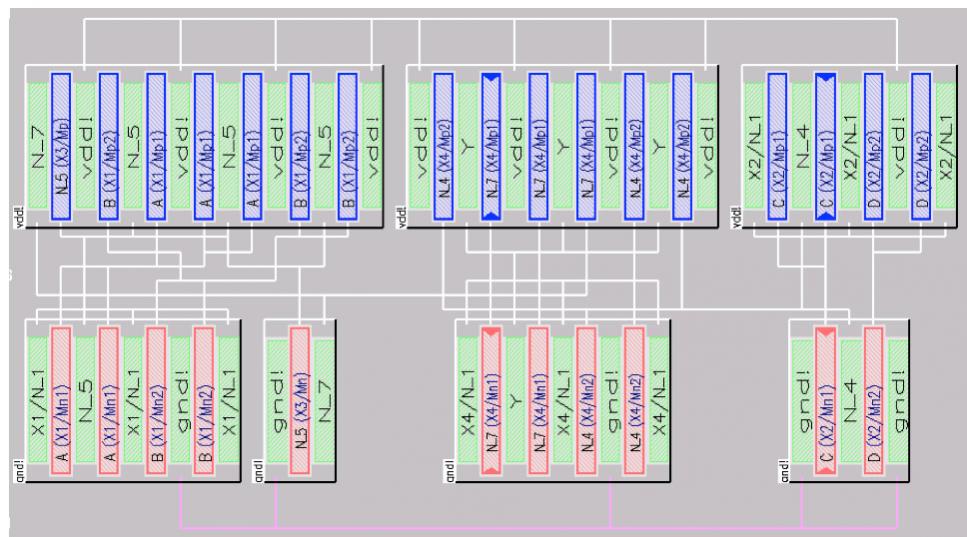

I will rather focus on the Wide I/O Memory Controller. Here is the description of the key features, as described by Cadence:

Key Features

- Supports Wide I/O DRAM memories compliant with JESD229

- Supports typical 512-bit data interface from SoC to DRAM (4 x 128 bit channels) over TSV at 200MHz offering more than 100Gbit/sec of peak DRAM bandwidth

- Independent controllers for each channel allow optimization of traffic and power on a per-channel basis

- Supports 3D-IC chip stacking using direct chip-to-chip contact

- Supports 2.5D chip stacking using silicon interposer to connect SoC to DRAM

- Priority and quality-of-service (QoS) features

- Flexible paging policy including autoprecharge-per-command

- Two-stage reordering queue to optimize bandwidth and latency

- Coherent bufferable write completion

- Power-down and self-refresh

- Advanced low-power module can reduce standby power by 10x

- Supports single- and multi-port host busses (up to 32 busses with a mix of bus types)

- Priority-per-command (AXI4 QoS)

- BIST algorithm in hardware enables high-speed memory testing and has specific tests for Wide I/O devices

It’s amazing! During the last ten years, we have seen a massive move from parallel to serial interface, think about PCI moving to PCI Express, PATA being completely replaced by SATA in storage application in less than 5 years, and the list is long. With the Wide I/O concept, we can see that a massively (512-bit) parallel interface, running at 200 MHz (to be compared with LPDDR3 at 800 MHz DDR), can offer both a better bandwidth up to 17 GB/s, and a better power per transfer performance than LPDDRn solution.

Anything magic here? The higher performance in term of bandwidth can be easily explained: adding enough 64-bit wide busses will allow passing LPDDR3 performance. But the reason why the power per transfer is better is more subtle: because it’s a 3D technology, the connection between the SoC and the DRAM will be made in the 3[SUP]rd[/SUP] (vertical) dimension, as shown in the picture from Qualcomm : thus, the connection length will be shorter than any connection made on a board. Moreover, the capacitance (due to the bumping or bonding material and to the track on the PCB) will be minimized with 3D connection. Then the power per bit transferred at a certain frequency. I did not checked how this was computed, but I am not shocked by this result…

So, Wide I/O memory controller looks like a superb new technology developed by Cadence, the mobile market is healthy enough (an understatement!) to decide to introduce the technology, but, as mentioned by Qualcomm on the above picture “Qualcomm want this but also competitive pricing”…

Eric Esteve from IPNEST