In last few years IP design has grown significantly compared to the rest of the semiconductor industry. There are newer IP start-ups opening across the world, particularly in India and China. Amid this rush, I wanted to understand the actual dynamics pushing this business and whether all of these IPs follow quality standards. Quality is a must considering IP integration into high-end SoCs. I found a very nice opportunity talking to Ritesh Saraf, CEO at OmniPhy. OmniPhy develops specialized IPs for top tier companies like SerDes PHY including HDMI 2.0, Ethernet , USB, PCIe, SATA PHYs, etc.

What I learned from Ritesh is that there are a few major reasons for the growth of IP business:

a) Number of protocols, complexity and speed of execution has grown. This has forced SoC vendors to source IPs from third parties and integrate IP into their SoCs rather than develop everything themselves. Only a few players are developing IPs themselves.

b) Emerging economies like China and India have proved their mettle in making successful IPs at lower cost. Also, there is good availability of talent in these regions — one can find designers with 6-8 years of experience in AMS design which is generally difficult in the USA. This often tips the scales for a “buy vs. make” strategy.

Earlier SoC vendors were satisfied with off-the-shelf IPs from third party vendors. But in recent times, they are also demanding differentiation and customization at a faster pace.

Considering the gold rush towards developing IPs with new entrants, short cycles and the desire to have them at lower cost, I was concerned about the quality of these IPs: Is it being sacrificed somewhere? It was interesting to learn from Ritesh that to lower the cost of IP, vendors may cut costs somewhere in the development process, verification process, the tools used for design management and so on. It’s important to have designers experienced with taking designs through production, otherwise there can be failure either before production (initiating re-spins) or later in the field.

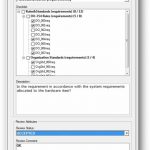

So I wanted to know what OmniPhy does to maintain the quality of their IPs. Ritesh described in detail various aspects of their quality process, such as controlled design management to use the correct versions of cell views in the entire design flow, diligent design reviews, various levels of testing and signing-off through a comprehensive checklist of procedures with extensive rules. They require their designers to have extensive experience in order to make effective decisions during the design process.

Ritesh said usage of effective quality tools makes a difference. In the case of digital design, they have been using open source tools such as SVN for design management. But analog designs have a different flow: they need development and verification hand-in-hand between different designers in the team and that needs much tighter control of design revisions. In the case of AMS designs, there are analog designers and digital designers; they think differently, so there is a need for an intelligent design management tool that can ease the pressure of check-in/check-out synchronization, sharing of cell views between designers, and ensure that correct views are used in higher levels of designs while being seamlessly integrated into the AMS design flow. A lot of bugs appear during top level verification and procedural diligence is needed to flush them out.

Ritesh further talked about earlier days of small analog designs (such as IO or ADC with 10-20 cells) when designers used to manage them manually, something not possible today. Not using a good design management (DM) tool is a big risk. OmniPhy has analog designs with 1000s of cells, with 20-25 designers working on one design at a time. It’s imperative to have an integrated DM and control solution for analog IP design to ensure quality.

OmniPhy uses ClioSoft’sSOS for design management. SOS is a good vehicle to control the design flow: the verification team does not need to wait, they can check-out the DUT from the system, get all information about the changes (who, what, why…) and continue with the verification process. The tool tracks the changes to be verified and resolution of all issues. At tape-out time, management can use SOS to freeze the design (i.e., make the completed cells read only). Any change at that time would be based on management’s decision. In other words, it’s a nice control on creeping elegance! The DM system by ClioSoft provides a greater level of confidence in the state of the design.

Ritesh was kind enough to share some of the screenshots of their actual designs and flows.

[Analog PHY IP – Data Management complexity]

An HDMI 2.0 PHY design like that above has 8-10 schematic designers, 8-10 layout designers and about 4 verification designers.

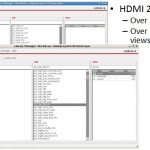

[Data Flow for AMS PHY IP]

The analog design flow uses Cadence Virtuoso and ClioSoft SOS, whereas the digital flow uses the SVN open source version control system. The digital flow is easy to maintain because there is clear distinction between development and test, but in the case of analog design, an integrated DM is a must.

This is the top level assembly of a PHY. All custom design views are managed in ClioSoft SOS viaDFII(Integration of SOS in Virtuoso). Digital PnR blocks are checked into the same library. The DM system assures that the blocks at the top level have passed through the final verification and ensures a stable state of the design data at the top level.

Considering this complexity in designing IPs, I asked Ritesh if the cost of the DM tool justified the ROI it provides? Ritesh happily said, “It doesn’t cost at all, considering the savings in re-spins. If you talk in monetary terms, it’s just ~2% of our total EDA spend.”

Also Read

ClioSoft at Arasan

Data Management in Russia

Managing Multi-site Design at LBNL