I got an invite to the SNUG (Synopsys User Group meeting) keynotes this year. I could only make it to the second keynote but what a treat that was. The speaker was Dr. Peter Stone, professor and chair of CS at UT Austin. He also chaired the inaugural panel for the Stanford 100-year study on AI. This is a guy who knows more about AI than most of us will ever understand. In addition to that, he’s a very entertaining speaker who knows both how to do and how to communicate very relatable research.

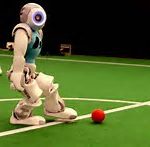

His team’s research is built around a fascinating problem which I expect will generate new discoveries and new directions for research for many years to come. Officially this is work in his Learning Agents Research Group (LARG), slightly less officially it is around learning multi-agent reasoning for autonomous robots but mostly (in this talk at least) it’s about building robot teams to play soccer.

The immediate appeal of course is simply watching and speculating on how the robots operate. These little guys play rather slowly with not much in the way of nail-biting moments but it’s fascinating to watch how they do it, especially in cooperative behavior between robots on the same team and competitive behavior between teams. When one robot gets the ball, forwards move downfield so they can take a pass. Meanwhile competitors move towards the player with the ball or move back upfield to intercept a pass or to block shots. The research behind this is so rich and varied that the speaker said he could easily spend an hour just presenting on any one aspect of what it takes to make this happen. I’m going to touch briefly on a few things he discussed that should strike you immediately.

When we think about AI, we generally think about a single intelligent actor performing a task – recognition, playing Jeopardy or Go or driving a car. The intelligence needs to be able to adapt in at least some of these cases but there is little/no need to cooperate, except to avoid problems. But robot soccer requires multi-agent reasoning. There are multiple actors who must collaboratively work to meet a goal. We talk about cars doing something similar someday, though what I have seen recently still has each car following its own goal with adjustments to avoid collision (multi-agent reasoning would focus on team goals like congestion management).

You might think this could be handled by individual robot intelligence handling local needs and a master intelligence handling team strategy, or perhaps though collaborative learning. From the outset, master command-center options were disallowed and team learning was nixed by the drop-in team challenge, asserting that any team player can be replaced with a new member with whom the players had not previously worked. This requires that each team player must be able to assess during the game what other team members can and cannot do and should be able to strategize action around that knowledge. Obviously, they also need to be able to adapt as circumstances change. The “master” strategy becomes a collective/emergent plan rather than a supervising intelligence.

A second consideration is managing the time dimension. In the “traditional” AI examples above, intelligence is applied to analysis of a static (or mostly static) context. There can be a sequence of static contexts, as in board games, but each move is analyzed independently. Autonomous cars (as we hear about them today) may support a little more temporal reasoning but only enough perhaps to adjust corrective action in a limited time window. But soccer robots must deal with a constantly changing analog state space, they must recognize objects in real-time, they must deal with multiple cooperating and opposing players and a ball somewhere in the field, and must cooperatively reason about how to move a future objective – scoring a goal while defending their own goal.

A third consideration is around how these agents learn. Again the “traditional” approach, based on massive and carefully labeled example databases, is impractical for soccer robots. The LARG group use guided self-learning through a method called reinforcement learning (RL). Here a robot makes decisions starting from some policy, takes actions and is rewarded (possibly through human feedback; this was cited as a way to accelerate learning) based on the result of the action. This is the reinforcement. Over time policies improve through closed-loop optimization. An important capability here is understanding sequences of actions, which can be formalized as Markov decision processes with probabilistic behaviors.

One other component caught my attention. Team soccer is a complex activity; you can’t train it as a single task. In fact, even getting a robot to learn to walk stably is a complex task. So they break complex tasks down into simpler tasks in what they call layered learning. One example he gave was walking fast to the ball then doing something with the ball. Apparently you can train in one task to walk quickly but the robot falls over when it gets to the ball. They had to break the larger task down into 2-3 layers to manage it effectively.

I should in fairness add that this is not all about teaching robots to play soccer. What the speaker and his team learn here they are applying to practical problems as varied as collaborative traffic control at intersections, building-wide intelligence and trading agents. But what a great way to stimulate that research 😉

There is much more information you can find HERE and HERE. HERE is one of many games they have played and HERE is a fun blooper reel. And there are many more video examples!