It must be a measure of the dim view taken of the automotive industry by Silicon Valley types that of the 294 slides in Mary Meeker’s annual Trends presentation delivered at this year’s Code Conference less than 10 of those slides refer to transportation. The Kleiner Perkins Caufield & Byers partner even managed to avoid using the word “car.”

With the main headline of the much-anticipated report being that the globe has achieved peak smartphone sales, with little new growth to be had, the opportunity to recognize the inexorable upward march of vehicle sales was overlooked. (An analysis of the report and the slides can be found here: https://tinyurl.com/ydbk7qf7 – care of Recode.)

This oversight is especially notable given the report’s focus on data collection. The car has rapidly emerged as the new frontier for data gathering as autonomous vehicle technology begins to take hold. Concerns surrounding data privacy are swirling across the worlds of social media and mobile devices, while car companies are beginning to lay the groundwork for an opt-in culture tuned to the needs of an increasingly connected transportation industry.

Dim view or not, the A-Team – Apple, Alphabet and Amazon – are all keenly interested in exploiting the unexplored world of vehicle data for marketing purposes with voice-based digital assistants capable of converting the car into a browser on wheels. But leading players in the world of transportation, such as Uber and Tesla, recognize deeper stores of value in using vehicle data to improving transportation experiences and business models and processes.

The car is the ultimate mobile device and it happens to serve the most inefficient business in the world: transportation. Massive amounts of metal are used to move people and goods across crumbling infrastructure at great cost and with relatively low utilization rates (at least of the vehicles themselves).

Upping the efficiency of the transportation network will require oceans of data to optimize transportation networks for ad hoc, on-demand services such as ride hailing, but also to improve public transportation options and to refine subscription-based vehicle usage models. At the core of transportation inefficiency is the increasingly tenuous vehicle ownership proposition.

In her talk, Meeker does highlight the growing inclination of consumer households to rely on on-demand transportation options in lieu of vehicle ownership. This behavior is reflected in the declining portion of household spending devoted to transportation even as cars continue to become more expensive.

Meeker is highlights the shift to cheaper ad hoc transportation options and lauds Uber’s algorhythmic acumen. So, she steps up to the edge of the question of potentially declining vehicle ownership but steps back before making the logical leap. It is almost as if cars are irrelevant in her calculations or at least a major blind spot.

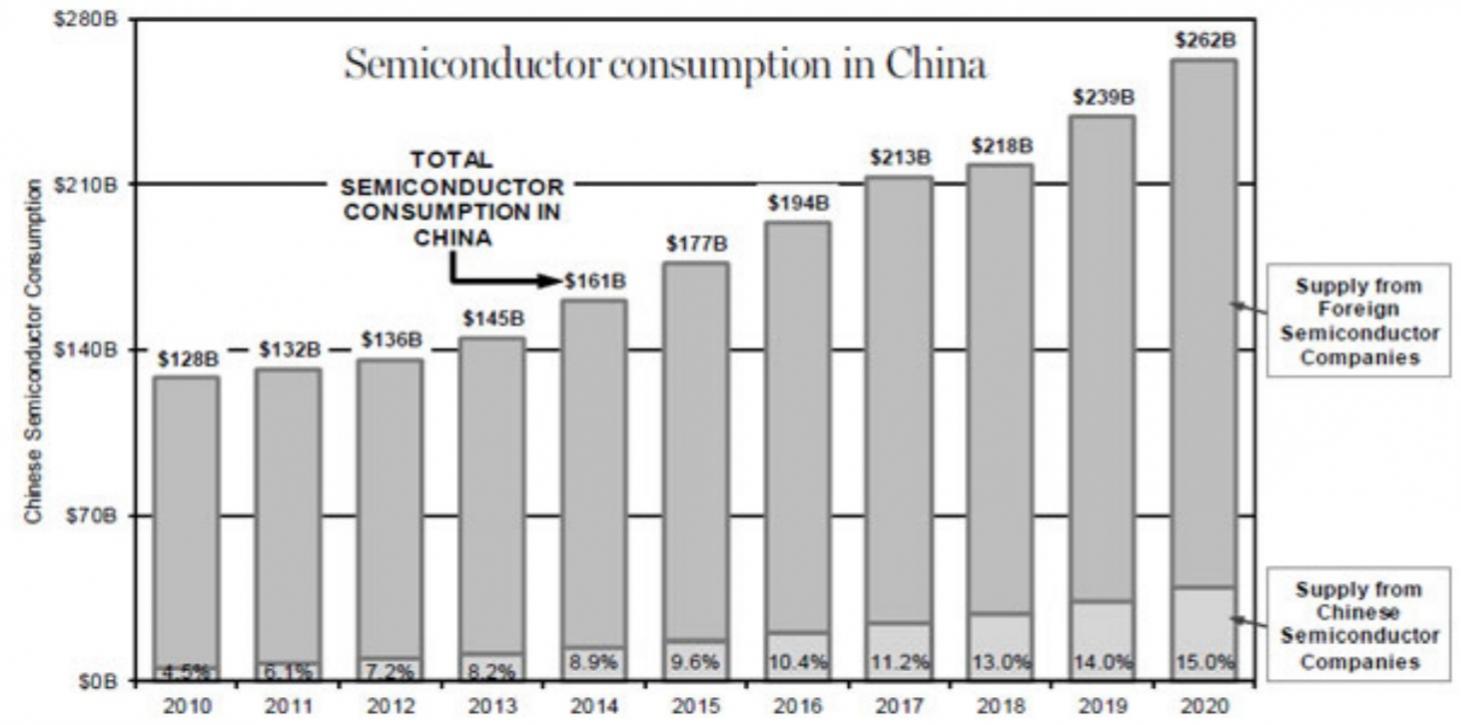

In developed markets such as North America and Europe, vehicle ownership does appear to be in some peril. Ride hailing and car sharing options – along with proliferating public transportation options – are putting pressure on the ownership proposition. In Europe and China, there are active measures to limit the use of cars in traffic-clogged or pollution-choked cities (Europe) or to using licensing limits to make it difficult to obtain a car (China).

In spite of these obstacles, global vehicle sales are forecast to climb at a compound annual rate of 2.5% through at least 2025, rising to more than 112M vehicles annually and on a trajectory to 120M. While the developed world may be bumping up against peak vehicle sales and ownership, the developing world is only just beginning to explore its very own love affair with cars.

Data is at the core of preserving vehicle relevance in both worlds. Data is also at the heart of the market disruptions wrought by Uber and Tesla.

Both Uber and Tesla capture transportation related data. The customer opt-in is more or less a requirement. How do they do it and why do consumers accept it? Because the trade-off is implicit.

Just as Google provides free search in exchange for using your search behavior as a basis for advertising (and for the privilege of manipulating your search results) Uber provides a discounted taxi service in exchange for the data it collects. The data that Uber collects – regarding a substantial proportion of local ad hoc transportation activity – is gold.

By now Uber knows the most popular routes in and around 100’s of major cities around the world. The company knows how to manage the supply and demand of transportation services and also knows a lot about driver and passenger behavior. Uber is in position to advise municipalities regarding multimodal transportation infrastructure, parking, and traffic and may even be able to advise on congestion mitigation solutions – even though Uber itself is a major contributor to that congestion.

The ocean of data gathered on a daily basis by Tesla is rapidly advancing the company toward the autonomous vehicle future by enabling the company to gather essential information regarding driving behavior and driving conditions. Tesla has also managed to convert the thousands of Tesla drivers using its autopilot feature into human guinea pigs helping Tesla understand when and where humans have to take control of the driving task from the system – and even helping Tesla understand when and why the system fails.[

Tesla’s data collection activity is providing the company with advanced electric vehicle insights regarding the number and type of trips taken, the impact of driving behavior on battery performance and the long-term performance of the vehicle batteries. Tesla by now knows far more than any other electric vehicle company about the ideal locations for charging stations and consumer behavior associated with the charging activity.

Just as Uber provides discounted taxi rides in exchange for data, Tesla’s value exchange is in the form of software updates. Tesla will continue to enhance the value of your “ride” as long as you’re still opted into the data sharing proposition – though it’s not as if you have a choice.

Uber and Tesla are creating and delivering value related to the driving experience in exchange for the data they collect. Notably, both companies have eschewed leveraging vehicle data for annoying and intrusive marketing messages.

A quick side note here: Waze is another transportation-centric application that has built its brand on data aggregation. It is worth noting that Waze is seeming to lose its way of late with an increasing emphasis on those very distracting advertising and marketing messages during navigation that Tesla and Uber have skirted.

Some analysts have suggested that the value of vehicle data is such that cars ought to be or will someday be free to consumers in exchange for access to the vehicle data. The closest approximation to this value proposition is Hyundai’s WaiveCar program which allows Hyundai Ioniq drivers to use the car for free as long as they allow WaiveCar to load the car with external advertising signage.

The idea of a free car is no less compelling than the idea of a free smartphone – but neither of these propositions are likely. What is more likely is an exchange of value, in the case of the car, that enhances the safety or efficiency of the driving experience. An example: Tesla just updated its Autopilot software to lengthen the stopping distance associated with the system in order to get back in the good graces of Consumer Reports.

The greater existential problem facing the automotive industry, though, is efficiency. Cars are only used 3%-4% of the time. This fundamental inefficiency is spurring the consumer consideration of less expensive alternatives, such as Uber, Lyft, Car2Go etc.

Car companies seeking a long term future beyond selling large volumes of vehicles primarily in emerging markets will do well to shift their focus to leveraging vehicle data to enhance the efficiency of vehicle usage by enabling and supporting networked transportation services such as car sharing, ride hailing and subscription-based “ownership.” These new forms of networked customer engagement will help preserve the relevance of cars in a world of inefficient automobile-centric transportation.