The semiconductor conference season has started out strong and the premier verification gathering is coming up at the end of this month. SemiWiki bloggers, myself included, will be at the conference covering verification so you don’t have to. Verification is consuming more and more of the design cycle so I expect this event to be well worth our time, absolutely. Mentor, of course is the premier verification company so first let’s see what they are up to:

Mentor, a Siemens Business will have experts presenting conference papers and posters, as well as hosting a luncheon, panel, workshop, and tutorial at DVCon 2019 February 25-28 in San Jose, CA. You’ll also find experts on the exhibit floor in booth #1005. Special guest Fram Akiki, VP Electronics Industry at Siemens PLM, will deliver Tuesday’s keynote “Thriving in the Age of Digitalization”.

KEYNOTE

Thriving in the Age of Digitalization

Tuesday, February 26, 1:30pm-2:30pm

Presented by Fram Akiki, Siemens PLM

Semiconductor technology continues its relentless advance with shrinking geometries and increased capacity stressing design and verification of today’s most demanding System on Chip solutions. While these challenges alone are significant, future challenges are rapidly expanding as market demand for Internet of Things, Automotive electronics and autonomous systems, 5G communication, Artificial Intelligence and Machine Learning technologies – and more – show explosive growth. These advances add significant complications and drive exponential growth in design and verification challenges of these solutions. This exponential development not only expands the market; it sparks new competitive pressures as new companies look to challenge the more established businesses that have long defined the industry. These factors explain why it’s important to have an integrated digitalization strategy to succeed in today’s semiconductor market.

SPONSORED LUNCHEON

A Tale of Two Technologies: ASIC & FPGA Functional Verification Trends

Tuesday, February 26, 12:00pm-1:15pm

The IC/ASIC market in the early- to mid-2000 timeframe underwent verification process growing pains to address increased design complexity. Similarly, due to increased complexity we find that today’s FPGA market is being forced to address its processes. What solutions are working? What separates successful projects from less successful ones? And how do you measure success anyway? At this luncheon we will address these and other questions. Please join Mentor, A Siemens Business as we explore the latest industry trends and what successful projects are doing to address growing complexity.

PANEL

Deep Learning –– Reshaping the Industry or Holding to the Status Quo?

Wednesday, February 27, 1:30pm-2:30pm

Participating Companies: Advanced Micro Devices, Babblelabs, Arm, Achronix Semiconductor, NVIDIA

Moderator Jean-Marie Brunet from Mentor, a Siemens Business, will take panelists through various scenarios to determine how AI and deep learning will reshape the semiconductor industry. They will look carefully at the chip design verification landscape to access whether it’s equipped to handle this new and potentially exciting area. Audience members will be encouraged to bring questions and opinions to ensure a lively and thought-provoking panel session.

WORKSHOP

It’s Been 24 Hours – Should I Kill My Formal Run?

Monday, February 25, 3:30-5:00pm

In this workshop we will show the steps you can take to make an informed decision to forge ahead, or cut your losses and regroup. Specifically, we will describe:

- How you can set yourself up for success before you kick off the run by writing assertions, constraints, and cover properties in a “formal friendly” coding style

- What types of logic in your DUT will likely lead to trouble (in particular, deep state space creators like counters and RAMs), and how to effectively handle them via non-destructive black boxing or remodeling

- Matching the run-time multicore configuration and formal engine specifications to the available compute resources

- Once the job(s) start, how to monitor the formal engines’ “health” in real time

- Confirm the relevance of the logic “pulled in” by your constraints

- Show how a secure mobile app can be employed to monitor formal runs when you are away from your workstation

- Examine whether a run’s behavior is consistent with the expected alignment between the DUT’s structure and the formal engines’ algorithmic strengths

- Leverage all of the above to make the final “continue or start over” decision

TUTORIAL

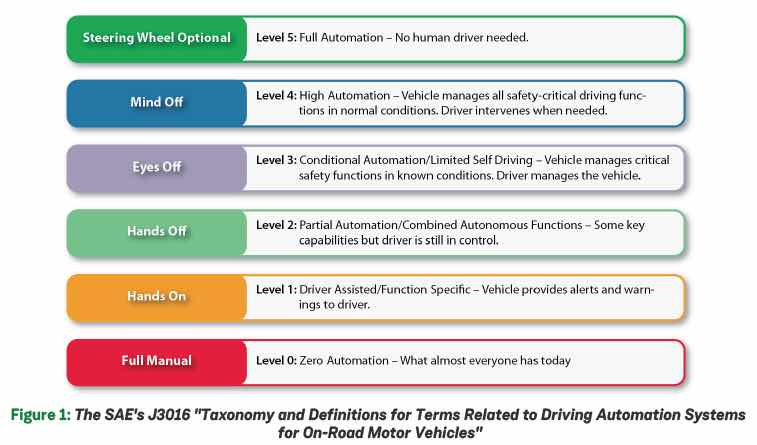

Nex Gen System Design and Verification for Transportation

Thursday, February 28, 8:30am-11:30am

In this tutorial, Mentor experts will demonstrate how to use these next-generation IC development practices to build and validate smarter, safer ICs. Specifically, it will look at:

- How to use High-Level Synthesis (HLS) to accelerate the design of smarter IC’s

- How to use emulation to provide a digital twin validation platform beyond just the IC

- How to use develop functionally safe IC’s

CONFERENCE PAPERS

Tuesday, February 26, 3:00pm-4:30pm

5.1 UVM IEEE Shiny Object

5.3 Fun with UVM Sequences – Coding and Debugging

Wednesday, February 27, 10:00am-12:00pm

9.2 A Systematic Take on Addressing Dynamic CDC Verification Challenges

9.3 Using Modal Analysis to Increase Clock Domain Crossing (CDC) Analysis Efficiency and Accuracy

10.1 Unleashing Portable Stimulus Productivity with a PSS Reuse Strategy

10.2 Results Checking Strategies with the Accellera Portable Test & Stimulus Standard

Wednesday, February 27, 3:00pm-4:30pm

11.1 Supply Network Connectivity: An Imperative Part in Low Power Gate-level Verification

12.2 Formal Bug Hunting with “River Fishing” Techniques

EXHIBIT HALL – MENTOR BOOTH #1005

Mentor, a Siemens Business, has pioneered technology to close the design and verification gap to improve productivity and quality of results. Technologies include Catapult® High-Level Synthesis for C-level verification and PowerPro® for power analysis. Questa® for simulation, low-power, VIP, CDC, Formal and support for UVM and Portable Stimulus. Veloce® for hardware emulation and system of systems verification, unified with the Visualizer™ debug environment.

Join the Mentor booth theater sessions to watch technology experts discuss a broad range of topics including Portable Stimulus, emulation for AI designs, verification signoff with HLS, functional safety, accelerating SoC power analysis, and much more!

Theater sessions include:

- Portable Stimulus from IP to SoC – Achieve More Verification with Questa inFact

- Accelerate SoC Power, Veloce Strato – PowerPro

- Exploring Veloce DFT and Fault Apps

- Mentor Safe IC: ISO 26262 & IEC 61508 Functional Safety

- Adding Determinism to Power in Early RTL Using Metrics

- Scaling Acceleration Productivity beyond Hardware

- Verification Signoff of HLS C++/SystemC Designs

- An Emulation Strategy for AI and ML Designs

- Advanced UVM Debugging

POSTER SESSIONS

Tuesday, February 26, 10:30am-12:00pm

Mentor experts will be representing the following poster sessions:

- SystemC FMU for Verification of Advanced Driver Assistance Systems

- Transaction Recording Anywhere Anytime

- Multiplier-Adder-Converter Linear Piecewise Approximation for Low Power Graphics Applications

- Verification of Accelerators in System Context

- Introducing your Team to an IDE

- Moving Beyond Assertions: An Innovative Approach to Low-power Checking using UPF Tcl Apps

DVCon is the premier conference for discussion of the functional design and verification of electronic systems. DVCon is sponsored byAccellera Systems Initiative, an independent, not-for-profit organization dedicated to creating design and verification standards required by systems, semiconductor, intellectual property (IP) and electronic design automation (EDA) companies. In response to global interest, in addition to DVCon U.S., Accellera also sponsors events in China, Europe and India. For more information about Accellera, please visitwww.accellera.org. For more information about DVCon U.S., please visitwww.dvcon.org. Follow DVCon on Facebookhttps://www.facebook.com/DvCon or @dvcon_us on Twitter or to comment, please use #dvcon_us.