In the early days of chip design circa 1970’s the engineers would write logic equations, then manually reduce that logic using Karnaugh Maps. Next, we had the first generation of logic synthesis in the early 1980’s, which read in a gate-level netlist, performed logic reduction, then output a smaller gate-level netlist. Logic synthesis then added the capability to move a gate-level netlist from one foundry to another. In the late 1980’s logic synthesis allowed RTL designers to write Verilog code and then produce a gate-level netlist. Ever since that time our industry has been searching for a design methodology even more productive than RTL coding, because a higher-level design entry above RTL entry could simulate quicker, have a higher capacity and even reach a larger audience of system-level users that don’t want to be encumbered with the low-level semantics of RTL coding.

High-Level Synthesis (HLS) is an accepted design paradigm now, and the engineers at Konica Minolta have been using C++ as their design entry language for several years while designing multi-functional peripherals, professional digital printers, ultra-sound equipment for healthcare and other products.

The original C++ design flow used is shown below using the Catapult tool from Mentor – a Siemens business, with benefits like 100X faster simulation times than RTL:

Even with this kind of C++ flow, there are some extra steps and issues, like:

- Manually inspecting algorithm code takes too much time.

- Code coverage with GCOV produced no insight for synthesizable C++ code, plus no expression, toggle and functional coverage analysis.

- Manual waivers were required to close coverage.

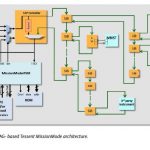

The Catapult family of tools extends beyond just C++ synthesis, so more of these tools were added, as shown below highlighted in green:

Let me explain what some of these boxes are doing in more detail:

- Catapult Design Checker – uncover coding bugs using static and formal approaches.

- Catapult Coverage – C++ coverage analysis, knowing about hardware.

- Assertion Synthesis – auto-generation of assertions in the RTL.

- SCVerify – creates a smoke test, and sets up co-simulation of C++ and RTL, comparing for differences.

- Questa CoverCheck – finds unreachable code using form RTL coverage analysis

Checking C++ Code

So, this newer flow looks pretty automated, yet there can still be issues. For example the C++ is untimed, while RTL has a notion of clock cycles, so during RTL simulation it’s possible for a mismatch to arise. The Catapult Design Checker comes into play here, and when run on several Konica Minolta designs the tool detected some 20 violations of the Array Bound Read (ABR) rule, where an array index is out of bounds. Here’s an ABR violation example:

The fix to this is adding assertions in the C++ code:

With the C++ assertions in place you will see any violations during simulation, plus Assertion Synthesis will add PSL code as shown below that are used during RTL testing.

Code Coverage

The Catapult Coverage (CCOV) tool understands hardware, while the original GCOV tool doesn’t, so CCOV supports coverage of:

- Statement

- Branch

- Focused Expression

- Index Range

- Toggle Coverage

One big question remains though, how close is C++ coverage to the actual RTL coverage? The SCVerify tool was used on 10 designs to compare results of statement and branch coverage, which shows close correlation below with an average statement coverage of 97% and branch coverage of 93% for CCOV.

Unreachable Code

Having any unreachable code is an issue, so using the Questa CoverCheck tool helps to identify and then selectively remove if from the Unified Coverage Database (UCDB). Here’s what an engineer would see after running CoverCheck, the items shown in Yellow are unreachable:

Once a designer sees the unreachable code they decide if this was a real bug or can be waived, if the element is reachable then create a new test for it.

Closing Coverage

During high-level verification the LSI engineers are trying to reach coverage goals, and they can ask the algorithm developers to add more tests. In the future the algorithm developers could use CCOV to reach code coverage, while the LSI engineers use the remaining Catapult tools to reach RTL closure.

Conclusions

Takashi Kawabe’s team at Konica Minolta have successfully been using Catapult tools in a C++ flow over the years to more rapidly bring products to market than with traditional RTL entry methods. By using the full suite of Catapult tools they are simulating 100X faster in C++ than at the RTL level, and have shown that C++ level signoff is now possible.

The design world has come a long way since the 1970’s, and using C++ level design and verification is here to stay. There’s an 11 page White Paper authored by Kawabe-san on this topic, and you can download it online here.

Related Blogs