A lot of the attention in intelligent systems is on object detection in still or video images but there’s another very active area, in smart audio. Amazon and Google smart speakers may be the best-known applications but there are more obvious (and perhaps less novelty-driven) applications in enhancing the hearing devices we already use, in headphones, earpods and hearing aids, also in adding voice-control as a new dimension to human/machine interfaces.

I talked to Jim Steele, VP of technology strategy at Knowles, a company that may not be very familiar to my readers. Knowles has been working in the audio space for around 70 years and is now addressing mobile, hearable and IoT markets. They provide for example microphones and smart microphones, audio processors, and components for hearing aids and earpods. They’re inside the Amazon Echo, cellphones and many hearing aids. Not surprising that they claim “Knowles inside” for many audio experiences.

About 4 years ago, Knowles acquired Audience, a company that specialized in mobile voice and audio processing. Audience was already used in a number of brand-name mobile phones and was apparently the first proponent for using multiple microphones together with auditory intelligence (I would guess beamforming, acoustic echo cancellation [AEC], etc.) to suppress background interference in noisy environments. Combining Knowles and Audience technologies provided a pretty rich set of capabilities which they recently spun into their IA8201 chip, the latest product in their AISonic family of audio edge processors. This is a variant on their IA8508 core, right-sized especially for ultra-low power always-on applications with trigger-word detection.

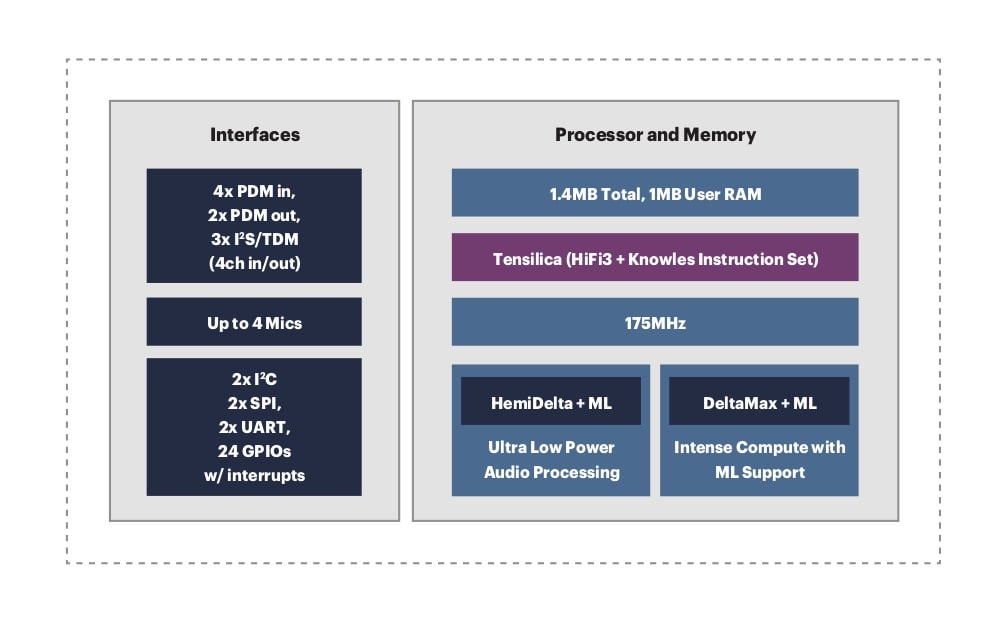

The design (block diagram pictured above) is based on three Tensilica cores, adapted with Knowles customization. The DeltaMax (DMX) core does the heavy lifting in beamforming, AEC, barge-in (you want to give a command while music is playing), noise suppression and multiple other functions. The HemiDelta handles ultra-low power wake-word detection and the Tensilica HiFi 3 core provides audio and voice post processing for leveling, equalization and other functions.

There’s a lot of technology here packed into a small space. This device supports up to 4 microphones which, together with beamforming and other features, should provide excellent speaker discrimination in most environments. This will be a real boon for the hard of hearing (yes, there are already hearing aids on the market with multiple microphones in each device).

Trigger-word detection can be as low as 1-2mW, allowing for extended use between charges. Certainly useful for a battery operated smart-speaker, but also useful for using your earbuds to make a call through a Bluetooth connection to your phone. Just say “Hey Siri, call my office”. Barge-in lets the command through, the trigger word is recognized and microphones in the earbuds pick up your voice through bone conduction (sounds creepy but that’s the way it works).

Voice recognition for command-processing runs on the DMX code, through temporal acceleration via 16-way SIMD with an instruction set optimized for machine-learning. Wake-word training can be supported by Knowles for OEMs and user-based training is also supported for command words and phrases.

Jim added that applications are not just about voice-support. A growing area of interest in products in this area is for contextual awareness: listening for significant sounds like sirens (while you’re driving – maybe you need to pull over), a baby crying, a dog barking or glass breaking (while you’re not at home). All of these can provide important alert signals. Of course you don’t want to be bothered with false alarms. Jim said that false accepts are down to 1 in 100 (for wake-word also) and can improve with training. Also alarm-type signals, OEMs might send video snip with the alert to help quickly determine if there is cause for further action.

Jim sees a lot of applications for this device, for hearables, for home safety and for IoT voice-control applications in appliances, TVs and other home automation devices. Lots of opportunity to get away from annoying control panels or phone apps, rather moving right to the way we want to control these devices – through direct commands. You can learn more HERE.

Share this post via:

Flynn Was Right: How a 2003 Warning Foretold Today’s Architectural Pivot