One of the interesting tidbits of information to come from Intel’s October earnings call was that Brazil, a country of nearly 200M people, has moved up to the #3 position in terms of PC unit sales. This was a shock to most people and as usual brushed aside by those not familiar with the happenings of the emerging markets (i.e. the countries keeping the world out of a true depression). A few days ago I saw an article about Brazil’s economy posted on one of my favorite web sites called Carpe Diem. The picture to the left and the following article should put things in perspective (see Brazil to Surpass U.K. in 2011 to Be No. 6 Economy). Brazil’s economy (GDP) has increased 500% since 2002 and is expected to grow another 40% in the next 4 years. Does this not look like the Moore’s Law parabolic curve with which we are all familiar?

For the past year, Intel has re-iterated on every conference call and analyst meeting that they conservatively saw an 11% growth rate for the PC market over the next 4 years. The Wall St. analysts scoffed that Intel was overly optimistic and used data from Gartner and IDC to back up them up. Gartner and IDC were in Intel’s words not able to accurately count sell through in the emerging markets. For those of you not familiar with the relationship between Intel and Gartner/IDC, let’s just say Intel NEVER Shares Processor Data with analysts. It’s a guessing game at best and therefore Gartner and IDC put together forecasts that are backward looking and biased towards the US and Western Europe. If these two regions are flat while the emerging markets are growing, then you get the picture.

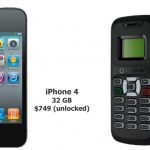

The result of all this is that the understanding of the worldwide PC and Apple markets is skewed towards what sits on the analyst’s desk and not what is sold in the hinterlands. Intel knows best that what is going on is that there are three distinct markets in the world. There is the Apple growth story that is playing out in the US and Western Europe, cannibalizing the consumer/retail PC market at a fast clip. Then we have the corporate market that is tied to the Wintel legacy and these are selling at an awesome rate. How do we know, Intel’s strong revenue and gross margins tell us this. Finally there is the emerging market that is based almost solely on Intel or AMD with some fraction of Windows. (real or imaginary). For this market, the iPAD and MAC notebooks are too expensive. Given the growth rate of the emerging market economies, the PC will have a strong future.

Considering that Brazil is just surpassing the UK in GDP and Brazil is 3 times the population of the UK, then one can see several trends. First, income is rising to the point PCs are affordable. Second there is much more demand coming on stream the next few years from younger countries with rising salaries. And finally, if as one would expect that LCD prices will continue to fall, DVD drives discarded, and that SSDs will finally enter the mix as a cheaper alternative to HDDs in the next 24 months, then there is further room for notebooks to move lower in price. A $300 notebook today that trends to $200 and below may result in a new parabolic demand curve. Moore’s Law shows up again in another unsuspecting place.