Evolving opportunities call for new and improved solutions to handle data, bandwidth and power. Moving forward, what will be the high-growth applications that drive product and technology innovation? The CAGRs for smartphone and data center continue to be very strong and healthy.

Continue reading “FinFET For Next-Gen Mobile and High-Performance Computing!”

Double Digit Growth and 10nm for TSMC in 2016!

Exciting times in Taiwan last week… I met with people from the Taiwanese version of Wall Street. They mostly cover the local semiconductor scene but since that includes TSMC and Mediatek they are interested in the global semiconductor market as well. They also have an insider’s view of the China semiconductor industry which is very complicated.

The big news of course is that TSMC is predicting double digit revenue growth and 10nm is on schedule for production in 2016. What that really means is that Apple will use TSMC 16FFC exclusively for the A10 (iPhone 7) and 10nm will be ready for the A10x. Morris Chang of course predicted this last year when he said TSMC would regain FinFET market leadership in 2016. This also means that TSMC will officially have the process lead in 2016 since Intel has pushed out 10nm until 2017. So congratulations to the hard working people at TSMC, absolutely!

The other big news is that 7nm is also on track. It will be déjà vu 20nm to 16nm for TSMC where 10nm will be a very quick transitional node right into 7nm. 20nm and 16nm used the same fabs which is why 16nm ramped very quickly, one year after 20nm. 10nm and 7nm will also share the same fabs so yes we will see 7nm in 2017 and that means TSMC 7nm will again have the process lead over Intel 10nm. Exciting times for the fabless semiconductor ecosystem!

Given the quick transition of 10nm to 7nm, quite a few companies will skip 10nm and go right to 7nm. Xilinx has already publicly stated this, I’m sure there will be more to follow. SoC companies like Apple, QCOM, and Mediatek that do major product releases every year will certainly use 10nm. I would guess AMD will use 10nm as well to get a jump on Intel. That would really be interesting if AMD released 10nm and 7nm CPUs before Intel. The server market would certainly welcome the competition.

The other interesting news is that Chipworks confirmed that the A9x in the iPad Pro is manufactured using TSMC 16FF+. I have read the reviews of the iPad Pro and have found them quite funny. One very young “Senior Editor” from Engadget, who has zero semiconductor experience and doesn’t even own an iPad Pro, made this ridiculous statement:

“It’s often vaunted that ARM-based chips are more power efficient than those based on Intel’s x86. That’s just not true. ARM and x86 are simply instruction sets (RISC and CISC, respectively). There’s nothing about either set that makes one or the other more efficient.”

I brought my iPad Pro with me to Taiwan and must say it is a very nice tablet. When it first arrived I was a little shocked at how big it actually was but the performance, display, and battery life is absolutely fabulous! I’m comparing it to a Dell Core i7 based laptop and an iPad 2 of course so the bar is pretty low. But it also runs circles around my iPhone 6. Given the size of the A9x (147mm) versus the A8x (128mm) I’m wondering if it will be used for the next iPad Air. If so, that would be the tablet of the year for sure.

And can you believe our own Oakland Warriors are 20-0 to start the season which is an NBA record!?!?!?!? GO WARRIORS!!!!!

When Talking About IoT, Don’t Forget Memory

Memory is a big enough topic that it has its own conference, Memcon, which recently took place in October. While I was there covering the event for SemiWiki.com I went to the TSMC talk on memory technologies for the IoT market. Tom Quan, Director of the Open Innovation Platform (OIP) at TSMC was giving the talk. IoT definitely has special needs for memory because of the need for low power, data persistence and security.

Tom Quan started the talk with an informative view of the IoT market. I have heard a lot of IoT overviews, but I listed with a keen ear to learn how TSMC views this market. Since 1991 there have been three big growth drivers for the semiconductor industry. IoT promises to be the fourth.

The first was personal computing which saw 7X growth from 1991 to 2000. Next came mobile handsets demonstrating 9X growth from 1997 to 2007. Last we have smart mobile computing, which lumps together mobile computing, internet, mobile communications, and sensing. This segment grew by 12X from 2007 to 2014. Clearly the IoT is the next big thing and will likely continue this accelerating this trend. The conclusion is that IoT will be the next big growth driver for the semiconductor industry. The sum of PC’s smartphones, tablets and IoT is expected to approach 20 billion units by 2018, compared to roughly 8 billion total today. The last year there is hard data was 2013 with ~5 billion units.

IoT is really an extension of mobile computing. Tom’s talk broke it down into the four umbrella categories of smart wearables, smart cars, smart home and smart city. It will consist of smart devices on smart things. Think of health sensors, gesture and proximity, chemical sensors, positional sensors and more. So where do today’s technologies stand as far as meeting the requirements of the IoT?

Probably every metric for design will be stressed by IoT. Unit volumes will go from the single-digit billions of the PC era to hundreds of billions in the IoT era ahead. Operating times for devices will need to go from hours to years. This in turn demands that the hundreds of watts that PC’s used transform into nano watts for edge sensors and the like. Additionally, new technologies, materials and architectures will need to be developed.

To make these transitions everything will need to be moved forward technologically. The slide below shows how this might work for the wearables segment

A huge part of this will involve embedded memory. Right now SRAM is used primarily for volatile memory. There are a number of solutions for non-volatile memory (NVM), including several future technologies that are very promising. The two major axes for NVM are density (size) and endurance (re-writability). Small sizes that do not need high endurance are things like configuration bits, analog trim info, and calibration data. The work well with one time programmable (OTP) approaches.

Even some boot code can be stored in OTP when it is configured to simulate re-writable memory. However, the number of re-writes will be limited as the non-reusable bit cells are utilized.

Flash and eeprom are good for applications that require larger sizes and more re-write endurance. But they come with the penalty of requiring additional layers or special processes.

Tom suggested that magneto-resistive RAM (MRAM) is one technology that shows some promise. It harkens back to the old core memories, but is scaled to nanometer size. Of the two original approaches for MRAM only the spin-transfer torque (STT) technology has been proven to scale well. There are two competing approaches for this: In-Plane and Perpendicular. MRAM using STT is very fast and uses low power. So it looks very promising as a NVM replacement that can also be used for SRAM replacement.

Another area of promise for future NVM solutions is resistive RAM or RRAM which uses the memristor effect in solid state dielectrics. There are several flavors of this technology being researched. But RRAM is not as far along commercially as MRAM is. However, this is an active area of research and the frequently use high-k dielectric HfO2 material has been discovered to work as RRAM.

Advances in NVM will have a huge impact on IoT. Power savings from having readily available persistent storage will open up new application areas. Think of not having to save system RAM when entering sleep. To further save power TSMC continues to add ultra low power processes to its existing process nodes. IoT will be driven in large part by technologies that allow edge node devices to be power sipping.

For more info on TSMC’s Open Innovation Platform look here.

Are FinFETs too Expensive for Mainstream Chips?

One of the most common things I hear now is that the majority of the fabless semiconductor business will stay at 28nm due to the high cost of FinFETs. I wholeheartedly disagree, mainly because I have been hearing that for many years and it has yet to be proven true. The same was said about 40nm since 28nm HKMG was more expensive, which is one of the reasons why 28nm poly/SiON was introduced first.

Continue reading “Are FinFETs too Expensive for Mainstream Chips?”

Who Will Get the Next Bite of Apple Chip Business?

Recent reports have Intel displacing Qualcomm as the modem supplier and TSMC as the foundry for the next Apple A10 SoC. That is if you call this a credible report: Continue reading “Who Will Get the Next Bite of Apple Chip Business?”

Wafer-Level Chip-Scale Packaging Technology Challenges and Solutions

At the recent TSMC OIP symposium, Bill Acito from Cadence and Chin-her Chien from TSMC provided an insightful presentation on their recent collaboration, to support TSMC’s Integrated FanOut (InFO) packaging solution. The chip and package implementation environments remain quite separate. The issues uncovered in bridging that gap were subtle – the approaches that Cadence described to tackle these issues are another example of the productive alliance between TSMC and their EDA partners.

WLCSP Background

Wafer-level chip-scale packaging was introduced in the late 1990’s, and has evolved to provide an extremely high-volume, low-cost solution.

Wafer fabrication processing is used to add solder bumps to the die top surface at a pitch compatible with direct printed circuit board assembly – no additional substrate or interposer is used. A top-level thick metal redistribution layer is used to connect from pads at the die periphery to bump locations. The common terminology for this pattern is a “fan-in design”, as the RDL connections are directed internally from pads to the bump array.

[Ref: “WLCSP”, Freescale Application Note AN3846]

WLCSP surface-mount assembly is now a well-established technology – yet, the fragility of the tested-good silicon die during the subsequent dicing, (wafer-level or tape reel) pick, place, and PCB assembly steps remains a concern.

To protect the die, a backside epoxy can be applied prior to dicing. To further enhance post-assembly attach strength and reliability, an underfill resin with an appropriate coefficient of thermal expansion is injected.

A unique process can be used to provide further protection of the backside and also the four sides of the die prior to assembly – an “encapsulated” WLCSP. This process involves separating and re-placing the die on a (300mm) wafer, which will be used as a temporary carrier.

The development of this encapsulation process has also enabled a new WLCSP offering, namely a “fan-out” pad-to-bump topology.

Chip technology scaling has enabled tighter pad pitch and higher I/O counts, which necessitate a “fan-out” design style to match the less aggressively-scaled PCB pad technology. TSMC’s new InFO design enables a greater diversity of bump patterns. Indeed, it offers comparable flexibility as conventional (non-WLCSP) packaging.

Briefly, the fan-out technology starts by adding an adhesive layer to the wafer carrier. Die are (extremely accurately!) placed on this layer at a precise separation, face-down to protect the active top die surface. A molding compound is applied across the die backsides, then cured. The adhesive layer and original wafer are de-attached, resulting in a “reconstituted” wafer of fully-encapsulated die embedded in the compound:

(Source: TSMC. Molding between die highlighted in blue. WLCSP fan-out wiring to bumps extends outside the die area.)

This new structure can then be subjected to “conventional” wafer fabrication steps to complete the package:

- addition of dielectric and metal layer(s) to the die top surface

- patterning of metals and (through-molding) vias

- addition of Under Bump Metal, or UBM (optional, if the final RDL layer can be used instead)

- final dielectric and bump patterning

- dicing, with molding in place on all four sides and back

- back-grind to thin the final package

As illustrated in the figure, multi-chip and multi-layer wiring options are supported.

InFO design and verification Cadence tool flow

Cadence described the tool enhancements developed to support the InFO package. The key issue(s) arose from the “chip-like” reconstituted wafer process fabrication.

For InFO physical design, TSMC provides design rules in the familiar verification infrastructure for chip design, using a tool such as Cadence’s PVS. As an example, there are metal fill and metal density requirements associated with the fan-out metal layer(s) that are akin to existing chip design rules, a natural for PVS. (After the final package is backside-thinned, warpage is a major concern, requiring close attention to metal densities.)

Yet, InFO design is undertaken by package designers familiar with tools such as Cadence’s Allegro Package Designer or SiP Layout, not Virtuoso. As a result, the typical data representation associated with package design (Gerber 274X) needs to be replaced with GDS-II.

Continuous arcs/circles and any-angle routing need to be “vectorized” in GDS-II streamout from the packaging tools, in such a manner to be acceptable to the DRC runset – e.g., “no tiny DRC’s after vectorization”.

Viewing of PVS-generated DRC errors needs to be integrated into the package design tool environment.

Additionally, algorithms are needed to perforate wide metal into meshes. Routing algorithms for InFO were enhanced. Fan-out bump placements (“ballout”) should be optimized during co-design, both for density and to minimize the number of RDL layers required from the chip pinout.

For electrical analysis of the final design, integration of the InFO data with extraction, signal integrity, and power integrity tools (such as Cadence’s Sigrity) is required.

Cadence will be releasing an InFO design kit in partnership with TSMC, integrated with their APD and SiP products, to enable package designers to work seamlessly with (“chip design-like”) InFO WLCSP data. The bridging of these two traditionally separate domains is pretty exciting stuff.

-chipguy

Cadence Outlines Automotive Solutions at TSMC OIP Event

I used to joke that my first car could survive a nuclear war. It was a 1971 Volvo sedan (142) that was EMP proof because it had absolutely no semiconductors in the ignition system, just points, condensers and a coil. If you go back to the Model T in 1915 you will see that the “on-board electronics” were not that different. However, today’s cars have an ever increasing amount of semiconductor content. Let’s just hope there are no EMP’s anytime soon.

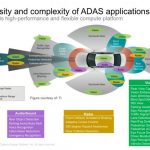

Automotive electronics systems now have enormous requirements for computation, bandwidth and reliability. I attended a talk by Cadence’s Charles Qi at the recent TSMC Open Innovation Platform (OIP) Forum where he outlined the major trends in this area.

By comparison to current needs, the previous bandwidth requirements were quite low. Cars have used simple networks like CAN, but increasing complexity is leading to the adoption of Ethernet standards specifically adapted to automotive needs and environments. The diagram below highlights the kinds and numbers of systems that are going to be built into cars.

As you can tell, there is audio, visual and sensor data that needs to be handled. There are strict requirements for timely delivery of certain data in automotive systems. The umbrella term for this is AVB, or Audio Video Bridging. It is broken down into a set of IEEE standards within 802.1BA. These standards address time synchronization, traffic shaping and priority queuing. Below is a diagram that lists the applicable IEEE standards for automotive communication.

Ethernet offers many advantages. It can run over twisted pair, it can carry power and it is an established standard that will continue to provide legacy support for many years. Charles outlined Cadence’s IP offerings which address the evolving Ethernet communication needs in automotive applications. Here is his slide that summarizes Cadence’s solution.

Many of the systems in the first diagram require significant processing power. Today’s systems already perform Advanced Driver Assistance (ADAS), and will be pushed even further when self driving cars arrive in earnest. Already radar based features include front collision avoidance braking, adaptive cruise control and rear collision detection. Additionally, there are vision and audio/sound based systems that will require computing power.

Cadence is positioning its Tensilica IP as a solution for automotive processing. They have a power sipping architecture that can be fine tuned to specific applications; and the development tools work to create custom compilers and libraries for many of the coding needs encountered in developing systems for emerging automotive standards.

Tensilica also offers customizable DSP cores to further accelerate automotive product development. Their IVP DSP’s are well suited for ADAS development. The IVP DSP offers VLIW and 64 way SIMD. Coming in under 300mW, they offer impressive performance with minimal power draw. One of the most interesting slides was on the problem of pedestrian detection. ADAS systems will need to do this efficiently and reliably, even if the person only shows in as small an area as 64×128 pixels.

Charles also spoke about ISO26262; we will be hearing a lot more about this in the coming years. It is a comprehensive standard for ensuring functional safety starting at the requirements phase and going through implementation. Unlike phones and fitness computers, automotive electronics play a role in life or death situations. Everything designed for automotive applications will need to comply with ISO26262. Here is a slide from Charles’ talk that gives an overview.

Well, I am still waiting for my self driving car. However, it is clear that automobiles will be competing on a lot more than looks and horsepower. In the meantime I look forward to the increased safety and possibly easier driving that things like front collision avoidance braking and adaptive cruise control will offer. For more information on Cadence IP for automotive applicationslook here at their website.

Xilinx Skips 10nm

At TSMC’s OIP Symposium recently, Xilinx announced that they would not be building products at the 10nm node. I say “announced” since I was hearing it for the first time, but maybe I just missed it before. Xilinx would go straight from the 16FF+ arrays that they have announced but not started shipping, and to the 7FF process that TSMC currently have scheduled for risk production in Q1 of 2017. TSMC already have yielding SRAM in 7nm and stated that everything is currently on-track.

See also TSMC OIP: What To Do With 20,000 Wafers Per Day although I screwed up the math and it is really over 50,000

I think that there are two reasons for doing this. The first is that TSMC is pumping out nodes very fast. Risk production for 10FF is Q4 of 2015 (which starts inext week) and so there are only 6 quarters between 10FF and 7FF if all the schedules hold. I think that makes it hard for Xilinx to get two whole families designed with some of the design work going on in parallel. It costs about $1B to create a whole family of FPGAs in a node. On the business side of things, 10nm would be a short-lived node. The leading edge customers would move to 7nm as soon as it was available so the amount of production business to generate the revenue to pay for it all and make a profit might well be too limited.

I contacted Xilinx to try and they pretty much confirmed my guess:The simple reason is that our development timelines & product cadence lined up better with 7nm introduction. TSMC has a very competitive process technology and world class foundry services and their timeline for their 7nm introduction lines up well with our needs and plans.

There have been rumors that Intel might skip 10nm too, although the recent rumors are that they will tape out a new 10nm core M processor early next year. I don’t know lf anything much that Intel has said about 7nm, either from a technology or a timing point of view.

See also Intel to Skip 10nm to Stay Ahead of TSMC and Samsung?

See also Intel 10nm delay confirmed by Tick Tock arrhythmia leak-“The Missing Tick”

That brings up the second big reason. All processes with the same number are not the same. TSMC’s 16FF process has the same metal stack (BEOL) as their 20nm process. It is their first FinFET process and so presumably they didn’t want to change too many things at once. Interestingly, Intel made the same decision the other way around at 22nm, where they had their first FinFET process (they call it TriGate) but kept the metal pitch at 80nm so it could still be single patterned. The two derivative TSMC 16nm processes, 16FF+ and 16FFC, have the same design rules and so the same 20nm metal. This limits the amount of scaling from 20nm to 16nm. There is a big difference in speed and power but not so much in density.

See also Scotten Jones’s tables in Who Will Lead at 10nm?

At 10nm Intel has a gate pitch of 55nm and a metal 1 pitch of 38nm (multiplied together gives 2101nm[SUP]2[/SUP] although I get 2090nm[SUP]2[/SUP]). TSMC at 10nm has a gate pitch of 70nm and a metal 1 pitch of 46nm, for an area of 3220nm[SUP]2[/SUP]. But perhaps more tellingly, Intel’s 14nm has a gate pitch of 70nm (same as TSMC’s 10nm) and a metal 1 pitch of 52nm, only a little looser than TSMC’s 10nm pitch of 46nm. So another reason Xilinx might skip 10nm is that it would not look good against Altera’s products in 14nm.

TSMC say that 10nm is about 50% smaller than their 16nm processes. TSMC said that 7FF will be 45% of the area of 10FF. Without any information to go on, it is still clear that Intel’s 7nm will be higher density than TSMC’s. The TSMC 7nm process will probably close to the Intel 10nm process. This is not necessarily a criticism of anyone. Intel is totally focused on bringing out server microprocessors and can read the riot act to all their designers as to how restrictive their methodology has to be and the designers have to suck it up. TSMC has to accept a much wider range of designs from a broad group of customers that they do not control in the same way.

[TABLE] style=”width: 400px”

|-

| Intel: you will do designs this way

Intel designers: but…

Intel: you will

Intel designers: OK

| TSMC: you will do designs this way

Apple engineers: no we won’t

TSMC: OK

|-

One wrinkle in all of this is also the Intel acquisition of Altera, Xilinx’s primary competitor. They seem to have been struggling to tape-out their designs in Intel’s 14nm process. If Intel is serious about using FPGAs in the datacenter, especially if they want to put the arrays on the same substrate as the processor, then they will need to get Altera’s fabric into 10nm and then 7nm hot on the heels of the server processors themselves. Xilinx’s worst nightmare would be if they produced a family of arrays in TSMC 10nm (only slightly better than Intel 14nm) and Altera got a family out in Intel’s 7nm which is a generation ahead.

So, Xilinx skipping 10nm and Altera being acquired by Intel with an opaque roadmap makes for an interesting spectator sport.

How to Build an IoT Endpoint in Three Months

It is often said that things go in big cycles. One example of this is the design and manufacturing products. People long ago used to build their own things. Think of villagers or settlers hundreds of years ago, if they needed something they would craft it themselves. Then came the industrial revolution and two things happened. One is that if you wanted something like furniture or tools you were better off buying them. The other was there was a loss of skills; people ‘forgot’ how to make things. This meant that the ability to create was concentrated in the hands of a few, and individuals had less control over what was available to them.

The maker movement has changed all that. The ability to design and build things has come full circle. Now if you want to design with 3D Printers and Arduino boards you can design a range of things, from simple everyday items to sophisticated appliances. In many ways the Internet of Things was started through this same pathway. People took low cost development systems and tools, added sensors, wireless and often servos to make a wide variety of useful things.

Semiconductor design has followed an analogous path. Early on design teams were small and they built chips that became the components of that era’s products. I remember calling on chip design companies in the late 90’s where it was literally three guys with a Sun workstation running layout software.

That era has ended and it seems that recently the only feasible way to design chips was at places like Nvidia, Intel, Freescale, Marvell, etc. They can apply design teams with hundreds of people to build their products. If you had an idea for a design and did not have the manpower, your idea went un-built.

However, things are changing again. The same market and technology forces that drove the maker movement, and pushed for standardization of building blocks, has spilled over into the internals of chip design. With the need for increased sophistication, the tools for building integrated platforms for IoT have been growing and maturing. We all know the formula by now: MCU, on board NVM, one or more radios, ultra low power, security, interfaces to sensors and a SW development environment to build user applications.

Differentiation is the key to success; product developers know they need to optimize their platform for their specific needs. ARM recently embarked on a project to test out the real world feasibility of having a small team build a custom IoT end point device in a fleeting 3 months. ARM used the TSMC Open Innovation Platform Forum in September to present their results.

ARM Engineering Director Tim Whitfield gave a comprehensive presentation on their experience. The challenge was to go from RTL to GDS in 3 months with 3 engineers. additionally, there were hard analog RF blocks that needed to be integrated. They went with the ARM mbedOS to make it easy to prototype. They also included standard interfaces like SPI and I2C for easy integration of external sensors.

ARM used their arsenal of building blocks which includes the Cortex M3, Artisan physical IP, mbedOS, Cordio BT4.2, ancillary security hardware and some TSMC IP as well. The radio was the most interesting part of the talk. A lot of things have to be done right to put a radio on the same die as digital. The Cordio radio is partitioned into a hard macro containing all the MS and RF circuitry. In the hard IP there is also real-time embedded firmware and an integrated power management unit (PMU) – critical for effective low power operation. It comes with a Verilog loop-back model for verification. The soft IP for the radio is AMBA-3 32-bit AHB compliant. It is interrupt driven and can operate in master & slave mode with fully asynchronous transmit and receive.

When adding the radio to the design, designers are given guidelines to avoid supply coupling in the bond wires. This is provided by adding 100pF decoupling per supply. They used CMOS process friendly MOM caps. They did receive some guidance from the radio team on how to prevent substrate coupling. They used a substrate guard ring with well-ties. Tim suggested that the guard ring could possibly be delivered as a macro in the future.

They discovered that if there was no cache that 80% of their power would be used for reading the flash and 20% used running application code. So they reduced the power overhead by using caching. Tim sees opportunity to further improve power performance with additional cache enhancements.

They already taped out in August, and are now waiting for silicon from TSMC in October. That, of course, will be the real test. Whatever lessons learned will be applied to improve the process for customers down the road.

This is certainly just a “little bit more” impressive than a maker getting their Arduino project working. Nonetheless, it is definitely a branch of the same tree. Enabling this kind of integration and customization democratizes product development and will in turn create new opportunities. I look forward to hearing how the first silicon performs.

New Sensing Scheme for OTP Memories

Last week at TSMC’s OIP symposium, Jen-Tai Hsu, Kilopass’s VP R&D, presented A New Solution to Sensing Scheme Issues Revealed.

See also Jen-Tai Hsu Joins Kilopass and Looks to the Future of Memories

He started with giving some statistics about Kilopass:

- 50+ employees

- 10X growth 2008 to 1015

- over 80 patents (including more filed for this new sensing scheme)

- 179 customers, 400 sockets, 10B units shipped

Kilopass’s technology works in a standard process using antifuse, causing a breakdown of the gate-oxide. Since the mechanical damage is so small it is not detectable even by invasive techniques, unlike eFuse technologies where the breaks in the fuse material are clearly visible by inspection. Over the generations of process nodes they have reduced the power by a factor of 10 and reduced the read access time to 20ns. Since the technology scales with the process, the memory can scale as high as 4Mb. It also is low power and instant-on.

Kilopass has focused on 3 major markets:

- security keys and encryption. This only requires Kb of memory. The end markets are set-top box, gaming, SSD, military

- configuration and trimming of analog. This also requires Kb of memory. End markets are power management, high precision analog and MEMS sensors

- microcode and boot code. This requires megabits to tens of megabits. Applications are microcontrollers, baseband and media processors, multi-RF, wireless LAN and more

The diagram above shows how the programming works. There are two transistors per cell. The top one remains a transistor for a 0 (gate isolated from the source/drain) but after programming a 1 the oxide is punched through and the gate has a high resistance short to the drain. Since the actual damage to the gate oxide might occur anywhere (close to the drain or far from it), the resulting resistance is variable.

The traditional way to read the data is as follows. The bitline (WLP) is pre-charged, then the appropriate wordline (WLR) is used for access and the bitline (BL) is sensed and compared against a reference in the sense amp. Depending on whether the “transistor” is a transistor or a resistor, the current will be higher than the reference bitline current or not. If it is higher then a 1 is sensed, lower and a zero. The challenge is to sense the data fast, since the longer the time taken, the clearer the value, but all users want a fast read time. See the diagram below.

Historically this has worked well. In older nodes, the variations are small relative to the drive strengths of the transistors. But increasingly it gets harder to tell the difference between a weak 1 cell and an noisy 0 cell, which risks misreading the value. As a result it can take a long time to sense “to be sure.” As we march down the treadmill of process nodes, like many other things, the variation is getting so large it is approaching the parameters of the device itself. A new approach is needed.

The new approach the Kilopass have pioneered adds a couple of steps. Once the word line is used for access, after a delay the bitline reference is shut off. The bit line is sensed and the data latched and then the sense amp is shut off. The new sense amp incorporates the timing circuitry. The whole scheme is more tolerant of process variation and should be suitable for migration all the way to below 10nm. This approach is more immune to ground noise and has greater discrimination between weak 1 and noisy 0. Finally, shutting off the sense amp at the end saves power.

It turns out that this scheme works particularly well with TSMC’s process since their I[SUB]ref[/SUB] spread is half that of other fabs. The new sensing scheme coupled with tighter cell means doubling the read speed.