[News] Samsung Reportedly Trials 2nd Gen 3nm Chips, Aims for 60%+ Yield | TrendForce Insights

According to industry sources cited by South Korean media The Chosun Daily, Samsung has commenced the production of prototypes for its second-generati...

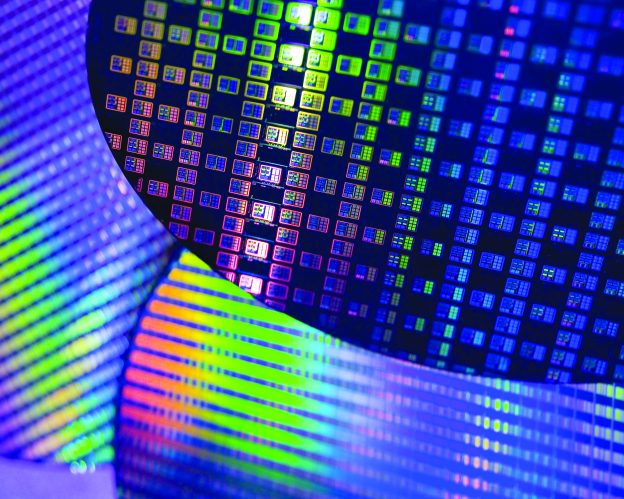

According to industry sources cited by South Korean media The Chosun Daily, Samsung has commenced the production of prototypes for its second-generation 3nm process and is testing the chip’s performance and reliability. The goal is to achieve a yield rate of over 60% within the next six months.

TSMC and Samsung are both actively vying for customers. Samsung is preparing to commence mass production of the second-generation 3nm GAA architecture in the first half of the year. The key to success in the competition lies in whether Samsung can meet the demands of major clients such as Nvidia, Qualcomm, AMD, and simultaneously achieve a rapid increase in production.

Samsung is currently testing the performance and reliability of prototypes for the second-generation 3nm process, with the initial product set to feature in the soon-to-be-released Galaxy Watch 7 application processor (AP). It is expected to be used in the Galaxy S25 series Exynos 2500 chip next year.

If the production yield and performance of the second-generation 3nm process are stable, there is a chance that customers who had previously switched to TSMC may return to Samsung, especially considering Qualcomm’s movements.

As per report, Qualcomm is collaborating with TSMC in the production of the next-generation Snapdragon 8 Gen 3. Additionally, Nvidia’s H200, B100, and AMD’s MI300X are expected to adopt TSMC’s 3nm process.

Samsung announced in November of last year that it would commence mass production of the second-generation 3nm process in the latter half of 2024. While Samsung has not responded to Chosun’s report regarding the production of prototypes for the second-generation 3nm process, the timeline seems plausible.

However, the report mentions a chip yield rate of 60% without specifying transistor count, chip size, performance, power consumption, or other specifications.

Furthermore, according to Tom’s Hardware’s report, the chip size, performance, and power consumption targets for processors used in smartwatches, mobile phones, and data centers are entirely different. A 60% yield rate for small chips would make commercial use challenging, but for chips with a reticle size of 60% yield rate, it would be reasonably acceptable.

However, caution is advised in interpreting this report due to the uncertainties surrounding Samsung’s second-generation 3nm process production targets at its semiconductor foundries.

Nonetheless, the commencement of the second-generation 3nm process production is a significant development for both Samsung and the semiconductor industry as a whole.