Effective and efficient functional verification is one of the biggest hurdles for today’s large and complex system-on-chip (SoC) designs. The goal is to verify as close as possible to 100% of the design’s specified functionality before committing to the long and expensive tape-out process for application-specific integrated circuits (ASICs) and full custom chips. Field programmable gate arrays (FPGAs) avoid the fabrication step, but development teams still must verify as much as possible before starting debug in the bring-up lab. Of course, verification engineers want to use the fastest and most comprehensive engines, ranging from lint, static and formal analysis to simulation, emulation and FPGA prototyping.

However, leading-edge engines alone are not enough to meet the high demands for SoC verification. The engines must be linked together into a unified flow with common metrics and dashboards to assess the verification progress at every step and determine when to tape out the chip. The execution of all the engines in the flow must be managed in a way to minimize project time, engineering effort and compute resources. Verification management must span the entire length of the project, satisfying the needs of multiple types of teams involved. It must also provide high-level reports to project leaders to help them make critical decisions, including tape-out. This article presents the requirements for effective SoC verification management.

Phases of Verification

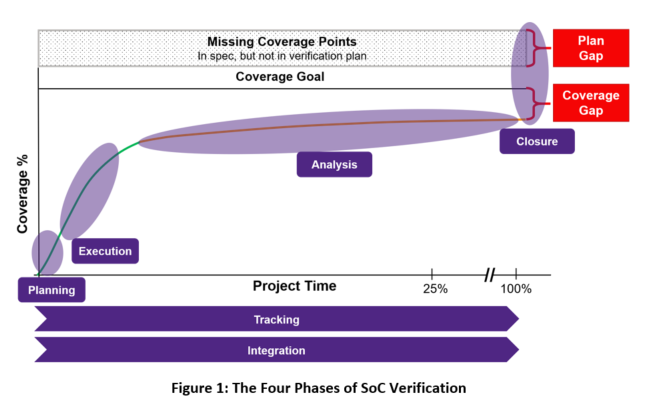

There are four significant phases of verification: planning, execution, analysis and closure. As shown in Figure 1, these phases can be viewed as linear across the project’s duration. In a real-world application, the verification team makes many iterative loops through these phases. Identification of verification holes during the analysis phase may lead to additional execution runs or even revisions to the verification plan. Similarly, insufficient results to declare verification closure and tape out may ripple back to earlier phases. This is a normal and healthy part of the flow, as verification plans and metrics are refined based upon detailed results.

A unified flow and intelligent decision-making throughout the project require tracking verification status and progress in all four phases. Also, there are multiple points where the verification flow must integrate with tools for project and lifecycle management, requirements tracking and management of cloud or grid resources. Figure 1 also shows the two major gaps that must be closed before tape-out. The coverage gap represents the verification targets identified during the planning phase but not yet reached during the execution phase. The plan gap refers to any features in the chip specification not yet included in the verification plan. The verification team must close both gaps as much as possible.

Planning Phase Requirements

The verification plan lies at the heart of functional verification. At one time this was a paper document listing the design features and the simulation tests to be written for each feature to verify its proper functionality. The verification engineers wrote the tests, ran them in simulation and debugged any failures. Once the tests passed, they were checked off in the document. When all tests passed, verification was deemed complete and project leaders considered taping out. This approach changed with the availability of constrained-random testbenches, in which there is not always a direct correlation between features and tests.

In an executable verification plan, engineers list the coverage targets that must be reached for each feature rather than explicit tests. They may use constraints to aim toward specific features and coverage targets with groups of tests, but it is a much more automated approach than traditional verification plans. Coverage targets may exist in the testbench or the design and may include code coverage, functional coverage and assertions. Verification planning includes determining which engine will be used for which features. Simulation remains the primary method. Still, formal analysis, emulation and other verification engines might be more appropriate for some targets.

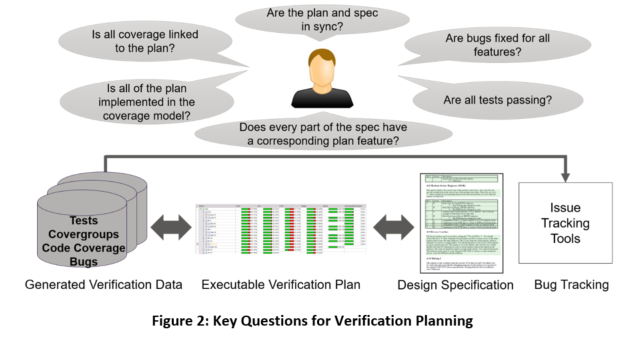

As shown in Figure 2, the verification plan features must be linked directly to content (text, figures, tables, etc.) in the design specification. This reduces the chances of features being missed since specification reviews will reveal any gaps in the verification plan. The plan must be kept in sync with the specification so that the verification team can see whether goals are being met at every phase of the project. The planning phase springboards from this plan, defining milestones for progress toward 100% coverage. Coverage tends to grow quickly early in the project but converges slowly as deep features and corner cases are exercised. Results from all engines must be aggregated intelligently and then back annotated into the verification plan so that the team has a unified view of achieved coverage and overall verification progress.

Execution Phase Requirements

Once the initial plan is ready, the verification team must run many thousands of tests, often on multiple engines, trying to reach all the coverage targets in the plan. This must be highly automated to minimize the turnaround time (TAT) for each regression test run, which will occur many times over the project. Whenever a bug is fixed or additional functionality is added to the design, the regression is executed again. Regressions must also be re-run whenever changes are made to the verification environment. An efficient solution requires an execution management tool that initiates jobs for all verification engines, manages these jobs, monitors progress and tracks results.

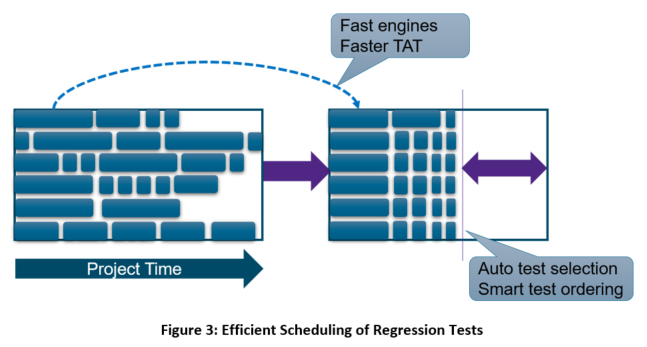

As illustrated in Figure 3, ordering and scheduling of tests are critical to minimize the TAT. Tests must be distributed evenly across all the grid or cloud compute resources so that the regression run uses as much parallelism as possible. Also, the information from each regression run can be used to improve the performance of future runs. The analysis phase must be able to rank tests based on coverage achieved. This information is used by the execution management tool in the next run to skip any redundant tests that did not reach any new coverage targets. This makes regressions shorter and makes better use of compute resources, storage space and verification engine licenses, all of which are managed by the same tool.

Coverage data is considered valid only for tests that complete successfully. Of course, many times tests will fail due to design bugs or errors in the verification environment. Most project teams execute regressions without debug options such as dump file generation to reduce regression runtime. The execution management tool must detect each failing test and automatically rerun it with appropriate debug features enabled. The engine must collect pass/fail results and coverage metrics so that they can be analyzed in the next phase of verification.

Analysis Phase Requirements

Once all tests have been run in the execution phase, the verification team must analyze the results to determine what to do next. This consists of two main tasks: debugging the failing tests and aggregating the passing tests’ coverage results. Access to an industry-leading debug solution is critical, including a graphical user interface (GUI) with a range of different views. These must include design and verification source code, hierarchy browsers, schematics, state machine diagrams and waveforms. It must be possible to cross-probe and navigate among the views easily, for example, selecting a signal in source code and seeing its history in a waveform. Once the reason for a test failure is determined, it is fixed and then verified in the next regression run.

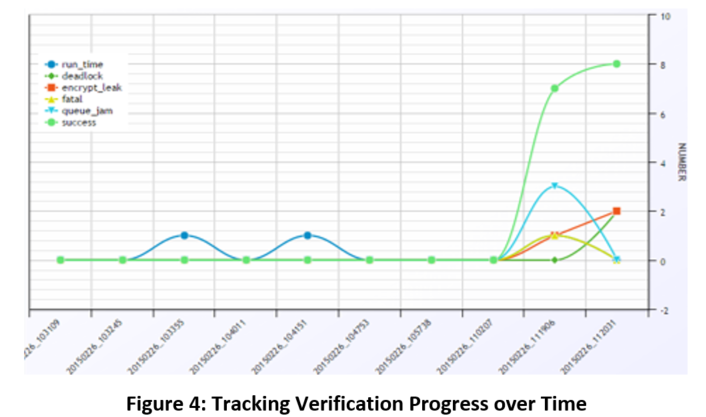

Aggregating the coverage results can be tricky because different verification engines sometimes provide different results. Formal analysis can provide proofs of assertions and determine unreachable coverage targets, which simulation and emulation cannot. The verification team may also define custom coverage metrics that must be aggregated with the standard types of coverage. The aggregation step must be highly automated, producing a unified view of chip coverage. The coverage results and other data from the regression runs must be stored in a database and tracked over time, as shown in Figure 4. Verification trends are a crucial factor in assessing project status, projecting a completion date and making the critical tape-out decision.

The analysis phase must include test ranking by contribution to coverage to reduce the TAT for regression runs in the execution phase. Deeper analysis begins as the verification team examines the coverage holes that occur when the passing regression tests do not yet reach coverage targets. The verification engineers should study the unreachable targets identified by formal analysis carefully to ensure that design bugs are not preventing access to some of the design’s functionality. Aggregating unreachability results improves coverage metrics and prevents wasting regression time on targets that cannot be reached. This is another way to reduce execution TAT by making efficient use of cloud and grid resources.

Closure Phase Requirements

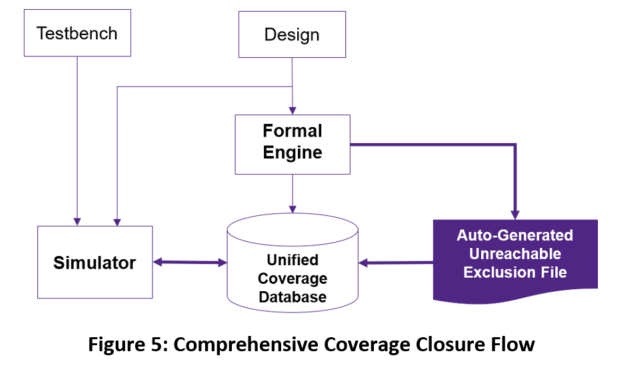

Once the verification engineers have eliminated coverage targets formally proven unreachable, as shown in Figure 5, they must consider the remaining targets not yet reached by the regression tests. Coverage closure is the process of reaching these targets or excluding them from consideration. If the verification engineers decide that a coverage target does not need to be reached, it can be added to an exclusions list. The analysis engine must support adaptive exclusions to be persistent even if there are small changes in the design. Reaching a coverage target may involve modifying existing tests, developing additional constrained-random tests, writing directed tests or running more formal analysis.

Modifying tests usually entails tweaking the constraints to bias the tests toward the unreached parts of the design. The results are more predictable if the closure phase supports a “what-if” analysis, in which the verification engineers can see what effect constraint changes will have on coverage. Ideally, this phase results in 100% coverage for all reachable targets. In practice, not all SoC projects can achieve full coverage within their schedules, so the team sets a lower goal that is typically well above 95%. The goal must be high enough to make managers feel confident in taping out and to minimize the chance of undetected bugs making it to silicon.

Tracking Process Requirements

Effective and efficient SoC verification requires the ability of the verification team and project management to observe results during any of the four phases and to track these results over time. Information from every regression run and analysis phase should be retained in a database so that both current status and trends over time can be viewed on-demand. The verification management flow must generate a wide variety of charts and reports tailored to the diverse needs of the various teams involved in the project.

Integration Process Requirements

There are many tools used in the SoC development process beyond the verification engines and the verification management solution. Information in the verification database must be exported to these other tools; other types of data may be imported. Many development standards mandate the use of a requirements management and tracking system. The features in the specification and verification plan must be linked to the project requirements. The verification management solution must also link to project management tools, product lifecycle tools and software to manage cloud and grid resources. Finally, verification engineers must be able to integrate with custom tools via an open database and an application programming interface (API).

Summary

Verification consumes a significant portion of the time and expense of an SoC development project. The planning, execution, analysis and closure phases must be automated to minimize resource requirements and reduce the project schedule. This article has presented many key requirements for these four phases and two critical processes that span the project. Synopsys has developed a verification management solution that meets all these requirements and is actively used on many advanced chip designs. For more information, download the white paper.

Also Read:

Techniques and Tools for Accelerating Low Power Design Simulations

A New ML Application, in Formal Regressions

Change Management for Functional Safety

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.