There is an intriguingly amorphous term in FPGA design circles lately: Quality of Results, or QoR. Fitting a design in an FPGA is just the start – is a design optimal in real estate, throughput, power consumption, and IP reuse? Paradoxically, as FPGAs get bigger and take on bigger signal processing problems, QoR has become a larger concern.

FPGAs started out as logic aggregators, but quickly evolved into signal processing machines because they offer a way to create and connect fast multiply accumulate blocks without most of the surrounding overhead of a general purpose machine. Data comes in, and is subjected to the same predictable operations compiled – or in modern terms, synthesized – into a machine. As FPGAs improve in clock speed, fabric throughput, and logic capability, they have outstripped other approaches to signal processing in terms of raw performance.

It would seem at first there would be few concerns over how an algorithm fits into FPGA logic; after all, the logic is customizable, and designers control what goes where. However, that is only true to a point – FPGAs are not magic. There are specific architectural elements that support flexible, but not infinite programmability. At some point, an infinite range of design choices has to be distilled into finite blocks.

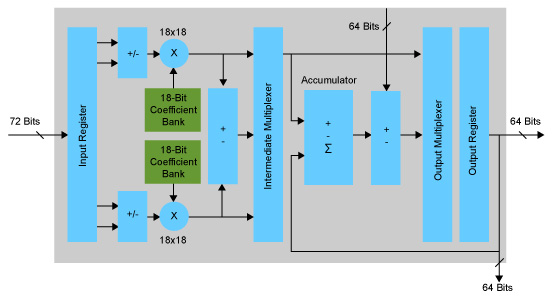

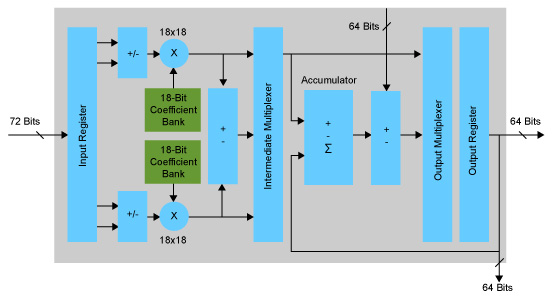

In a recent webinar, Chris Eddington, Sr. Technical Marketing Manager at Synopsys points out today’s FPGA devices are huge and complex, with as many as 4000 DSP blocks, and each block is capable of around 1000 modes of operation. While the general intent of DSP blocks is quite similar, the exact capability and programming of blocks varies widely between FPGA vendors.

How does a designer map their algorithm to actual logic blocks in an FPGA? Are the right blocks in the right modes in the right places? How are they clocked? Are there flow control issues? How are resources like block RAM utilized? This may sound foreign to a designer used to hand-coding, but in the reality of gigantic FPGAs and third-party IP, the chances things fall into place optimally are getting smaller. Eddington proposes the solution is using a high-level synthesis tool with knowledge of multiple FPGA architectures to help.

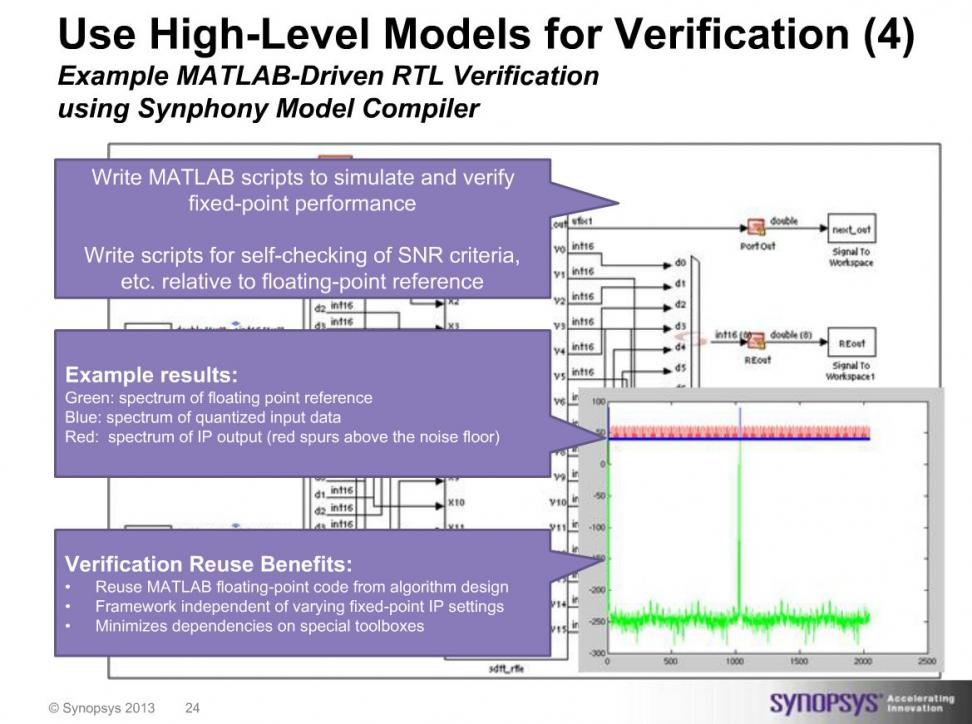

Many developers are modeling systems today in MATLAB, which makes evaluating and tuning the signal processing algorithms very productive. Eddington ran an informal poll of his audience, and a few more than half who responded were already doing so, “Even if you have a high-level algorithmic design, there may be many, many choices in mapping,” observes Eddington.

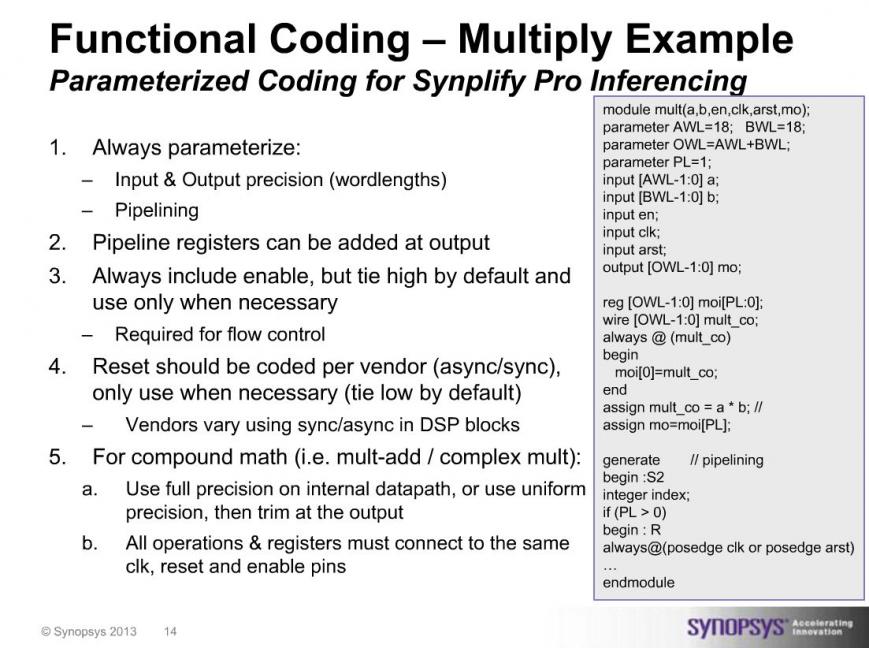

By using high-level synthesizable code, leveraging inferences to resources instead of explicit instances, synthesis tools can boost productivity and QoR. With a global view of the on-chip resources and the design, tools can evaluate options and make some informed mapping choices. In a simple example of a multiplier, Eddington showed how parameterized code is more readable, more synthesizable, and more portable all at the same time.

Another example looks at how the MATLAB model represents data in floating or fixed point formats, which may be different from the fixed point mode of operation chosen for the FPGA DSP blocks involved. Mismatches in precision leading to uncontrolled truncation in a pipeline can be a QoR disaster waiting to happen. One capability of Synphony Model Compiler targets the fixed point problem: RTL can be instantiated inside of Simulink, and its fixed point operation simulated and verified using MATLAB scripts with the expected precision. Once verified, that exact same RTL can be synthesized into the FPGA.

Eddington goes on to talk about the uses of cycle-accurate C models for simulation, offering as much as a 40x speedup over RTL simulation, and what to look for in high-level IP to help reduce issues. For example, he has a good discussion on using flow control, which helps with mapping storage requirements to FPGA block memory. He also brings in an example of a parallel FFT and how the flow goes from model to verification.

View the entire Synopsys webinar:

High-Throughput FPGA Signal Processing: Trends, Tips & Tricks for QoR and Design Reuse

While the examples invoke the Synopsys tool chain and some of its unique capability, the webinar is very worthwhile in pointing out the general steps to avoid QoR trouble in larger FPGA designs. Experienced designers may think they have good reasons to hand-code some FPGA resources, but as designs get bigger and faster and IP is being reused more, the case for FPGA high-level modeling and synthesis grows stronger.

lang: en_US

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.