Machine learning (ML) is already making its way into EDA tools and flows, but the majority of announcements have been around implementation, especially in guiding toward improved timing and area. This is a pretty obvious place to start; ML is in one sense an optimization technique, trained on prior examples, which should be able to provide further PPA optimization over traditional methods. Conversely, few announcements have been made about ML applications for (functional) verification, perhaps because incremental optimization angles for verification aren’t quite so self-evident, beyond general assertions that “something” ought to be possible. I don’t doubt this situation will change; creative verification tool/flow makers will find ways to apply ML in ways that aren’t so obvious.

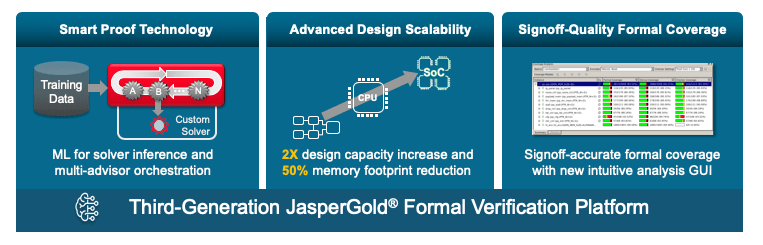

That this is already possible is clear in advances Cadence have made in their new, smart JasperGold Formal Verification Platform. I suspect progress started here first because formal platforms have some things in common with implementation platforms – multiple engines to accomplish a goal in different ways and lots of knobs to control how those engines work. The JasperGold Smart Proof technology exploits these two factors directly. For optimization within an engine (Anirudh calls this ML-inside), the tool provides training data built on 500+ customer designs to parameterize that solver for optimum performance.

There’s also an important optimization in solving across engines. It is common in formal verification to use more than one engine to attempt a problem since engines have different strengths playing to different classes of problem. You could first try one approach and if that doesn’t succeed after some number of cycles, switch to a different approach. But that’s old-school. A newer approach depends on orchestration – a semi-automated method to launch multiple runs, each with a different strategy – to find a best-case outcome as quickly as possible. ML-based orchestration takes this one step further, learning again from runs on those 500+ test cases, how best to optimize that orchestration (Anirudh calls this ML-outside). Orchestration starts with out-of-the-box supervised learning, giving you value from day 1 then adding on-going proof-profiling based on your usage to further improve performance.

What different does this make? Pete Hardee (Dir Product Management at Cadence) tells me that across a representative set of designs, they are seeing ~10X faster properties proven per second, and on a set of known “hard” designs in which it is difficult to get to proofs on many properties, they were able to reduce inconclusive proofs from 46% to 29%.

When you’re king of the hill, you don’t want to rest on just one advance. Pete tells me this new release also compiles 2X faster and in a 2X smaller memory footprint. This has been measured on multiple designs, up to 100M+ gates. Now wait a minute – this is formal, the technology that only works with small designs, right? Pete told me that yes, you’re ultimately going to want to abstract or otherwise reduce to get to a manageable problem for proving, but why force you to do that before you even readin the design? All those steps, including useful analysis (like cone-of-influence) can be done on the build image and you can let the tool take care of the heavy lifting in applying abstraction, etc.

Last and certainly not least, they have put significant work in integrating and simplifying the analysis GUI with aim to support signoff-quality coverage. I’ve talked before how you can integrate formal coverage with dynamic coverage through vManager. They’ve added an all-new coverage analysis GUI in support of the formal side of this, tracking coverage runs versus proof runs, providing easily accessible information on dead-code and over-constraints, a unified view of proof-core and cone-of-influence coverage and a new formal coverage metric looking at both stimuli and checkers.

Not only is Cadence a clear leader in formal, it looks like they’re working hard to stay there. Check out the new updates HERE.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.