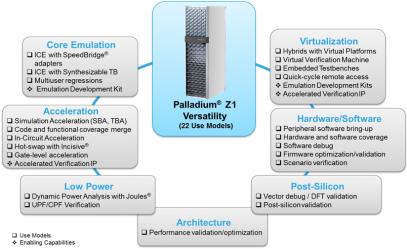

We all know the basic premise of emulation: hardware-assisted simulation running much faster than software-based simulation, with comparable accuracy for cycle-based 0/1 modeling, decently fast setup, and comparably fine-grained debug support. Pretty obvious value for running big jobs with long tests. But emulators tend to be pricey, so you really don’t want them idling waiting for the next big job; how can you leverage that resource so it’s delivering value round the clock? Certainly through virtualization where multiple verification jobs can share a common emulation resource but also by expanding use models beyond the standard “big verification” tasks.

Many of these applications are also familiar – ICE, simulation acceleration, power analysis and co-modeling with software for example. All good use-models in principle, but how are they working out in live projects? I talked with Frank Schirrmeister at DVCon last month to get insight into some customer applications.

I’ll start with simulation acceleration (SA), a use-model where part of the verification task runs in simulation, part runs in emulation and the two parts communicate/synchronize as needed. MicroSemi described their use of this approach at a 2017 DAC session. They had an interesting challenge in moving to an SA configuration since packet-switching within their SoC is controlled by 3[SUP]rd[/SUP]-party firmware which is often not available during the design phase. They work around this in their UVM testbench (TB) by randomizing packet-switching to cover as many switching scenarios as possible. With this setup, in SA they found a 20X speedup in run-times over pure simulation, not quite as exciting as they expected. They subsequently traced this problem to a high level of communication between the UVM TB and the emulation DUT. Putting a little work into optimizing randomization to lower communication boosted the gain to 40X. As they stepped up design size, they saw even bigger gains. The moral here is that SA can be a big win for simulation workloads if you’re careful to manage communication overhead between the TB and the DUT (which of course should be transaction-based).

Frank also mentioned another interesting acceleration application reported by Infineon. Gate-level simulation is becoming very important for signoff in a number of areas, yet often this is timing-based, where emulation can’t help. But emulation can help getting through initialization, beyond which interesting problems usually appear. Runs can hot-swap from an emulation start to timing-based simulation, greatly accelerating this signoff analysis. Infineon reported that this mixed flow reduced initialization run-times of 3 days to 45 minutes, an obvious win. I would imagine that even in simulation applications where you don’t need timing but you do need 4-state modeling or simply interactive debug, a fast start through emulation would be equally valuable.

At an earlier DAC, Alex Starr of AMD talked about using emulation for power intent verification, by which he meant verifying that the design still works correctly as the design operates in or transitions through many power-state sequences (power-down, power-up, etc.). Alex made the point, common to many power-managed designs today, that verification has to consider all possible sources of power switching and DVFS – firmware-driven, software-driven and hardware-driven – requiring a very complex set of scenarios to be tested. What you want to watch out for is, for example, cases where the CPU gets stuck trying to communicate with a powered-down block, or cases where retention logic states are not correctly restored on power-on.

AMD still does some of this testing in simulation, but where emulation really shines is being able to run many passes through many power sequences where simulation might be limited practically to testing one power sequence. Why is this important? Power state sequencing and mission-mode functionality are largely independent, at least in principle, so to get to good coverage across a useful subset of the product of both you need to run many mission mode behaviors against many sequences. Alex stressed that being able to run an emulation model against an external C++ stimulus agent gave them the confidence they needed to a level of coverage which would have been impossible to reach in simulation.

In a different application, when we think of emulation support for firmware we think of development and debug, but Mellanox have used Palladium emulation to help them also profile firmware against the developing hardware. To enable this analysis, they captured instruction pointers, per processor, from their verification runs. Since cycle counts are easily recovered from the run data, they could then run a post process on the emulation results to build the kind of information we normally expect from code profiling (e.g. prof, gprof):

- Map instruction addresses to C code (in the F/W) through e.g. the ELF

- Build a flat profile for each function with how many cycles it consumed, versus line of code

- Build a hierarchical profile showing time consumed by parent/child relationships, versus (hierarchical) lines of code

Mellanox noted that they were able to fully profile and optimize their firmware before hardware was available, while also having full visibility down to the cycle level to debug.

I have only touched on a few customer examples here. You can read about a hardware-performance profiling example HERE and another simulation acceleration example HERE. All of these cases highlight ways that Palladium Z1 emulation can be exploited beyond the core use-model (run verification fast). Worth thinking about when you want to maximize the value you can get out of those systems.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.