Everyone knows that verification is hard and is consuming an increasing percentage of verification  time and effort. And everyone should know that system-level verification (SoC plus at least some software and maybe models for other components on a board) is even harder—which is why you see hand-wringing over how incompletely tested these systems are compared to expectations at the IP level.

time and effort. And everyone should know that system-level verification (SoC plus at least some software and maybe models for other components on a board) is even harder—which is why you see hand-wringing over how incompletely tested these systems are compared to expectations at the IP level.

The problem is not so much the mechanics of verification. We already have good and increasingly well-blended solutions from simulation to emulation to FPGA prototyping. The concept of UVM and constrained random test generation is now being extended to software-driven test generation.There are now tools to enable randomization at the software levels and the developing Portable Stimulus (PS) standard will encourage easy portability of use cases between platforms to trade-off super-fast analysis versus detailed analysis for debug.

The problem at the end of the day is that tools only test what you tell them to test (with possibly some randomization spread). Thanks to the effectively boundless state-space of a system level design, conventional views of test coverage are a non-starter. The burden of how well the design has been tested falls on a (hopefully) well-crafted and vigorously debated verification plan. This has to comprehend not only internally-driven and customer-driven requirements but also new standards (like ISO26262 for example) and regulatory requirements (e.g. for medical electronics).

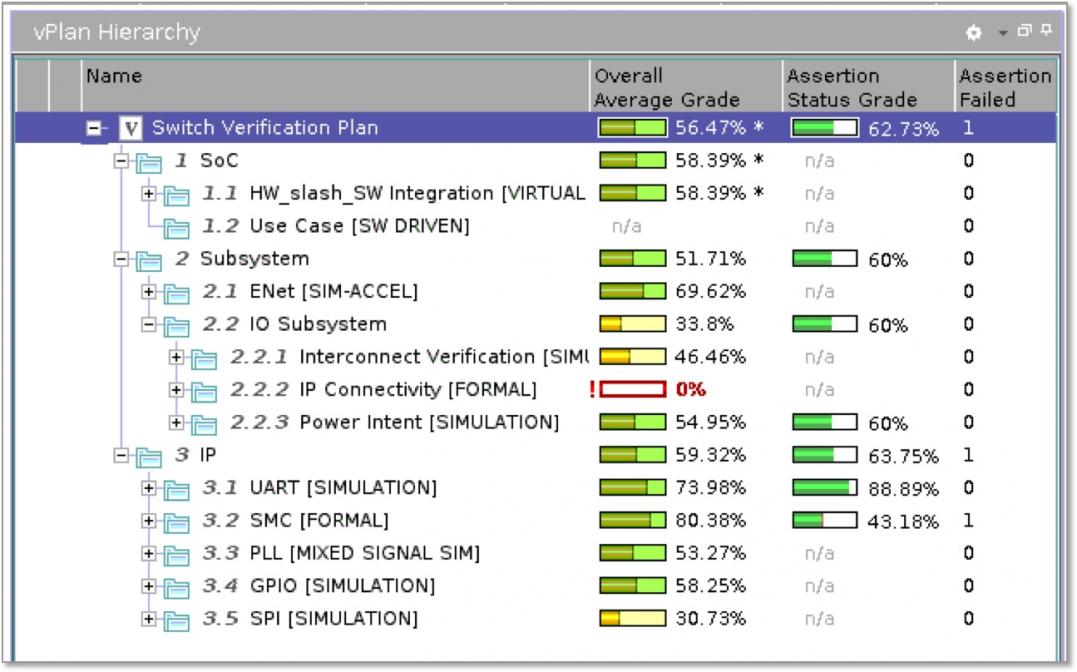

Once you have that verification plan, you can quantify how far you have progressed towards “done” and more accurately predict functional closure. That has to depend on unambiguous metrics across each line in the verification plan: functional metrics, coverage metrics at the software level and hardware level, register and transaction metrics, performance and latency metrics, connectivity metrics for the top-level and IOs, safety and security metrics – all quantifiable, unambiguous metrics for every aspect of the design. And, for each of these sections there is an agreed upon verification plan which organizes and then breaks down the plan into a long list of sub-items which make up the actual details of the testing. This is metric-driven verification (MDV), now applied to system level.

Cadence Incisive vManager provides a platform to define the test plan (vPlan in Cadence terminology) in an executable format, and also provides the means to roll-up current stats from each of the verification teams and each of the many sources of verification data. You get an at-a-glance view of how far you have progressed on each coverage goal and you can drill down wherever you need to understand what remains to be done and perhaps where you need to add staffing to speed up progress.

One very interesting example where new tools are playing a role in getting to coverage closure is the increasing use of formal to test for unreachable cases. When you see in vManager that coverage on some aspect has flattened at something below 100%, it may be time to deploy formal proving to see if that last few percent is heavily populated by unreachable states. If so, you can call a halt to further testbench development because you know you are done.

While metric-driven verification is not new, Cadence Design Systems pioneered vManager in 2005 to encapsulate these concepts, really driving a more automated and verifiable foundation for system signoff. Instead of using spreadsheets, you can roll-up coverage from simulation, from static and formal analysis, and from emulation and prototyping software-driven verification. Cadence plans to continue integration of yet more metrics across this broad spectrum of technologies to further augment this capability.

To learn more about vManager, click HERE.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.