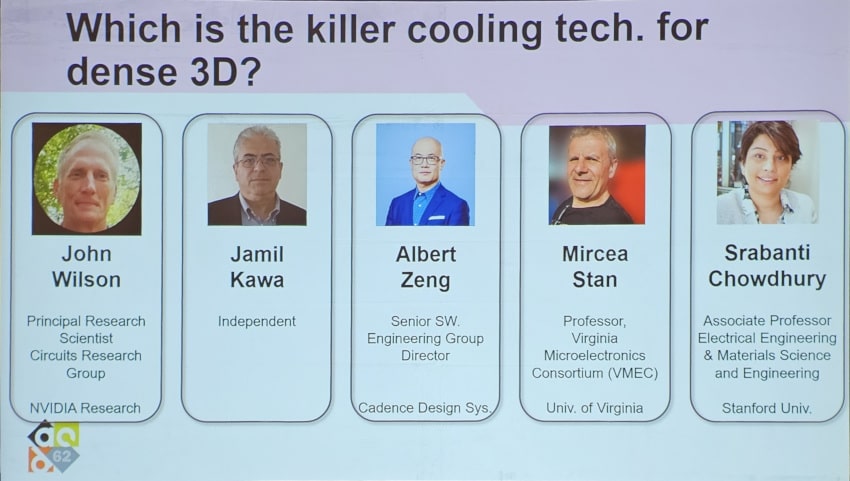

Power densities on chips increased from 50-100 W/cm2 in 2010 to 200 W/cm2 in 2020, creating a significant challenge in removing and spreading heat to ensure reliable chip operation. The DAC 2025 panel discussion on new cooling strategies for future computing featured experts from NVIDIA Research, Cadence, ESL/EPFL, the University of Virginia, and Stanford University. I’ll condense the 90-minute discussion into a blog.

Four techniques to mitigate thermal challenges were introduced:

- Circuit Design, Floor Planning, Place and Route

- Liquid or Cryogenic Cooling

- System-level, Architectural

- New cooling structures and materials

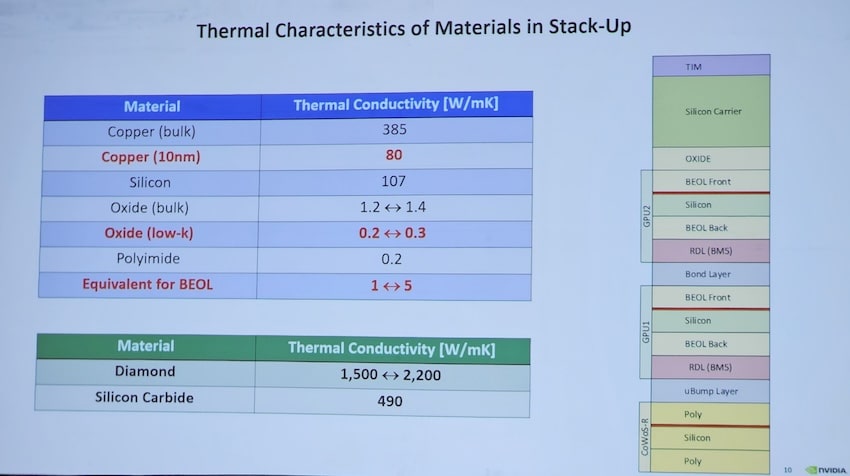

For circuit design, there are temperature-aware floor planning tools, PDN optimization, temperature-aware TSVs, and the use of 2.5D chiplets. Cooling has been done with single-phase cold plates, inter-layer liquid cooling, 2-phase cooling, and 150 – 70k cooling. System-level approaches are advanced task mapping, interleaving memory and compute blocks, and temperature-aware power optimization. The new cooling structures and materials involve diamonds, copper nanomesh, and even phase-change materials.

John Wilson from NVIDIA talked about a 1,000X increase in single chip AI performance in FLOPS over just 8 years, going from the Pascal series to the Blackwell series. Thermal design power has gone from 106W in 2010 to 1,200W in 2024. Data centers using Blackwell GPUs use liquid cooling to attain a power usage efficiency (PUE) of 1.15 to 1.2, providing a 2X reduction in overhead power. At the chip-level, small hotspots cause heat to spread quickly, while heat spreads slowly for larger hotspots. GPU temperatures depend on the silicon carrier thickness and the type of stacking. Stack-up materials such as diamond and silicon carbide impacted thermal characteristics.

A future cooling solution is using silicon microchannel cold plates.

Jamil Kawa said that the energy needs for AI-driven big data compute farm already exceeds our projected power generation capacity through 2035 to the point that Microsoft revived the nuclear reactor at 3-miles Island for their compute farms / Data Center energy needs. It is not a sustainable path . A lower energy consumption per instruction (or per switching bit) is needed. Cold computing provides this answer even after all cooling costs are taken into account. There are alternative technologies that are very energy efficient at cryo temperatures such as Josephson Junction based superconducting electronics operated at < 4K, but they have major limitations, the main part being area per function which is greater than 1000 that of CMOS. Therefore, CMOS technology operated in a liquid nitrogen environment with an operating range of 77K to 150K is the answer. The cooling costs of using liquid nitrogen are offset by the dramatically lower total power to be dissipated at an iso-performance. Operating CMOS in that range allows operation at a much lower VDD (power supply) for iso-performance that is generating much less heat to dissipate,

David Atienza, a professor at EPFL, talked about quantum computing, superconducting, and HPC challenges. He said the temperatures for superconducting circuits used in quantum computing are in the low Kelvin range. Further, for an HPC chip to be feasible, dark silicon is required to reduce power. Microsoft plans to restart the Three Mile Island power plant to power its AI data center. Liquid nitrogen can be used to lower the temperature and increase the speed of CMOS circuits. Some cold CMOS circuits can run at just 350 mV for VDD to manage power.

Albert Zeng, Sr. Software Engineering Group Director at Cadence, said they have thermal simulation software for the whole stack, starting with Celsius Thermal Solver used on chips, all the way up to the Reality Digital Twin Platform. Thermal analysis started in EDA with PCB and packages and is now extending into 3D chips. In addition to thermal for 3D, there are issues with stress and the impact of thermal on timing and power, which require a multi-physics approach.

Entering any data center requires wearing headphones to protect against the loud noise of the cooling system fans. A system analysis for cooling capacity is necessary, as the GP200-based data centers require liquid cooling, and the AI workloads are only increasing over time.

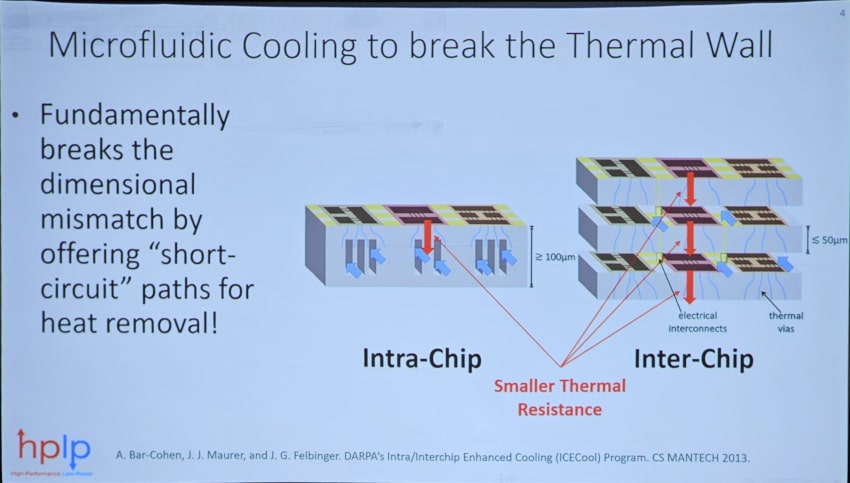

Mircea Stan, a professor from VMEC, presented ideas on limiting heat generation and efficiently removing heat. The 3D-IC approach creates limitations on power delivery and thermal walls. Voltage stacking can be used to break the power delivery wall, and microfluidic cooling will help break the thermal wall.

VMEC has created an EDA tool called Hotspot 7.0 that models and simulates a die thermal circuit, a microfluidic thermal circuit, and a pressure circuit.

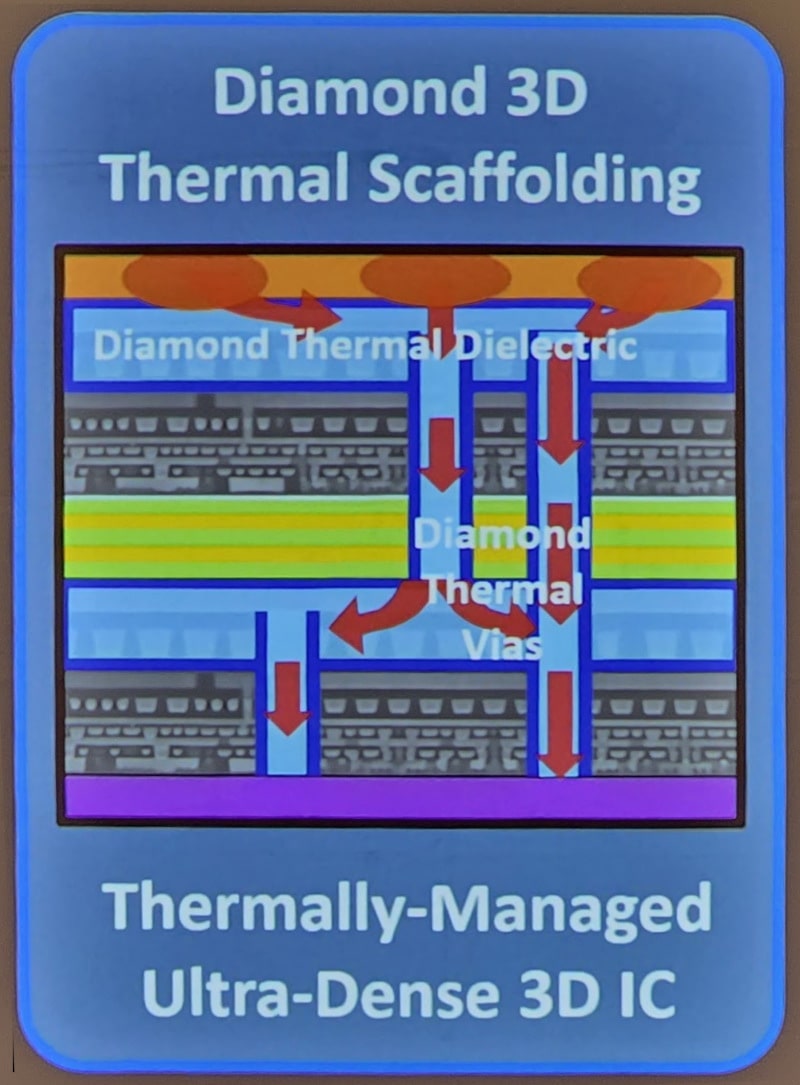

Srabanti Chowdhury from Stanford University talked about maximizing device-level efficiency that scales to the system level. Initially, heat sinks and package fins were used for 2D processors to manage thermal issues. An ideal thermal solution would spread heat within nm of any hotspot, spreading heat both laterally and vertically, and integrate with the existing semiconductor processing materials. Their research has shown that using diamond 3D thermal scaffolding is a viable technique for 3D-IC thermal management.

Stanford researchers have been developing this diamond thermal dielectric since 2016 and are currently in the proof-of-concept phase.

Q&A

Q: What about security and thermal issues?

A: Jamil – Yes, thermal hacking is a security issue, and they’re using schemes to read secret data, so there are side channel mitigation techniques.

Q: Is there a winning thermal technology?

Liquid cooling on the edge is coming, but the overhead of microfluidic needs to be beneficial.

Q: Can we do thermal management with AI engines?

Albert – We can use AI models to help with early design power estimates and thermal of ICs. The data centers are designed with models of the servers, where AI models are used to control the workloads.

Q: Can we convert heat into something useful on the chip?

A: Heat energy cannot be extracted into something useful, sorry, the conversion is not practical, because we cannot convert heat into electricity without increasing the heat even higher.

Q: What about keeping the temperature more constant?

Srabanti – The workloads are too variable to moderate the temperature swings. A change in materials to moderate heat is more viable.

Q: What is the future need for thermal management?

A: Jamil – Our energy needs today already exceed our projected energy generation capacity and therefore the solution is to generate much less energy at a particular performance level by operating at much lower power supply and lower temperature. A study done on a liquid nitrogen cooled GPU at 77K had cooling costs on par with forced cooled air but with a 17% performance advantage.

Q: What about global warming?

A: Liquid nitrogen is at 77K, a sweet spot to use. Placing a GPU in liquid nitrogen vs forced air has about the same cost, but with improved performance.

Q: For PUE metrics, what about the efficiency of servers per workload?

Albert – PUE should be measured at full workload capacity.

Q: Have you tried anything cryogenic?

John – Not at NVIDIA yet.

Related Blogs

- Cadence Celsius Heats Up 3D System-Level Electro-Thermal Modeling Market

- Accelerating Electric Vehicle Development – Through Integrated Design Flow for Power Modules

- Cadence Debuts Celsius Studio for In-Design Thermal Optimization

Comments

There are no comments yet.

You must register or log in to view/post comments.