AI and ML techniques are popular topics, yet there are considerable challenges to those that want to design and build an AI accelerator for inferencing, as you need a team that understands how to model a neural network in a language like Python, turn that model into RTL, then verify that your RTL matches Python. Researchers from CERN, Fermilab and UC San Diego have made progress in this area by developing the open source hls4ml, which is a Python package for machine learning inference in FPGAs. The promise of this approach is to translate machine learning package models into HLS to speed development time.

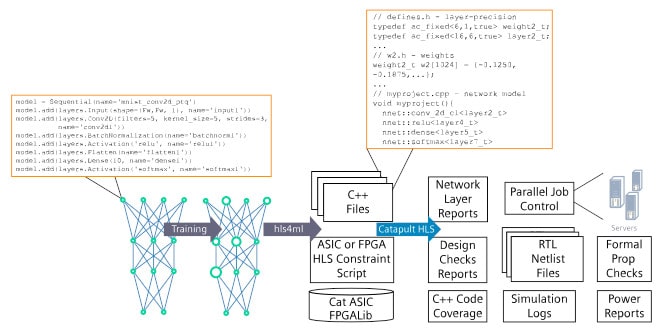

I spoke with David Burnette, Director of Engineering, Catapult HLS with Siemens last week to learn how they have been working with Fermilab and contributors over the past two years on extending hls4ml to support both ASIC and FPGA implementations. The new Siemens tool is called Catapult AI NN, and it takes in the neural network description as Python code, converts that to C++ and then synthesizes the results as RTL code using Verilog or VHDL.

Data scientists working in AI and ML are apt to use Python for their neural network models, yet they are not experts at C++, RTL or hardware concepts. Manually translating from Python into RTL simply takes too much time, is error prone and is not easily updated or changed. Catapult AI NN allows an architect to stay with Python for modeling neural networks, then use automation to create C++ and RTL code quickly. This approach allows a team to do tradeoffs of power, area and performance rapidly in hours or days, not months or years.

One tradeoff that Catapult AI NN provides is for how much parallelism to use in hardware, so you could start with asking for the fastest network, which likely results in a larger chip area, or asking for the smallest design, which would impact the speed. Having quick iterations enables a project to reach a more optimal AI accelerator.

A common database for handwritten digits is called MNIST, with 60,000 training images and 10,000 testing images. A Python neural network model can be written to process and classify these images, then run in Catapult AI NN to produce RTL code in just minutes. Design teams that need hardware that performs objection classification and object detection will benefit from using this new Python to RTL automation.

Machine learning professionals that are used to tools like TensorFlow, PyTorch or Keras can continue to stay in their favored language domain, while automating the hardware implementation using a new tool. When using Catapult AI NN users can see how their Python neural network parameters correlate to RTL code, read reports on implementation area, measure the performance throughput per layer, and know where their neural network is spending time. To improve the speed of High-Level Synthesis a user can choose to distribute jobs for hundreds of layers at one time, instead of sequentially.

Summary

There is a new, quicker path to design AI accelerators, instead of using manual methods to translate from Python code for neural networks to RTL, and reaching an FPGA or ASIC implementation. With Catapult AI NN there’s the promise of quickly moving from neural network models written in Python to C++ and RTL, for both FPGA and ASIC domains. Rapid tradeoffs can be made with this new methodology, resulting in an optimization of power, performance and area for AI accelerators.

Inferencing at the Edge is a popular goal for many product design groups, and this announcement should attract their attention as a way to help meet their stringent goals with less effort, and less time for design and verification. Fermilab has used this approach for particle detector applications, so that their AI experts can create efficient hardware without becoming ASIC designers.

Read the Siemens press release.

Related Blogs

- An Update on HLS and HLV

- A Fresh Look at HLS Value

- HLS in a Stanford Edge ML Accelerator Design

- Computer Vision Design with HLS

Comments

There are no comments yet.

You must register or log in to view/post comments.