For a long time, memories were the primary technology driver for process development. If you built memories, you got access to cutting-edge process information. If you built other products, this could give you a competitive edge. In many cases, FPGAs are replacing memories as the driver for advanced processes. The technology… Read More

Webinar – FPGA Native Block Floating Point for Optimizing AI/ML Workloads

Block floating point (BFP) has been around for a while but is just now starting to be seen as a very useful technique for performing machine learning operations. It’s worth pointing out up front that bfloat is not the same thing. BFP combines the efficiency of fixed point operations and also offers the dynamic range of full floating… Read More

FPGAs in the 5G Era!

FPGAs, today and throughout the history of semiconductors, play a critical role in design enablement and electronic systems. Which is why we included the history of FPGAs in our book “Fabless: The Transformation of the Semiconductor Industry” and added a new chapter in the 2019 edition on the history of Achronix.

In a recent blog… Read More

Network on Chip Brings Big Benefits to FPGAs

The conventional thinking about programmable solutions such as FPGAs is that you have to be willing to make a lot of trade-offs for their flexibility. This has certainly been the case in many instances. Even just getting data across the chip can eat up valuable routing resources and add a lot of overhead. These problems are exacerbated… Read More

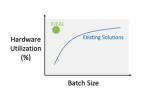

Characteristics of an Efficient Inference Processor

The market opportunities for machine learning hardware are becoming more succinct, with the following (rather broad) categories emerging:

- Model training: models are evaluated at the “hyperscale” data center; utilizing either general purpose processors or specialized hardware, with typical numeric precision of 32-bit

New Generation of FPGA Based Distributed Accelerator Cards Offer High Performance and Adaptability

We have learned from nature that two characteristics are helpful for success, diversity and adaptability. The same has been shown to be true for computing systems. Things have come a long way from when CPU centric computing was the only choice. Much heavy lifting these days is done by GPUs, ASICs, and FPGAs, with CPUs in a support … Read More

Achronix Announces New Accelerator Card at Linley Fall Processor Conference – VectorPath

This blog is my second blog from this year’s Linley Fall Processor Conference. The first two blogs focused on edge inference solutions. Achronix’s discussion was much broader than just AI/ML; it was about where FPGA’s have been going and culminated with a product announcement preview. I’ll get to the announcement in a moment, … Read More

BittWare PCIe server card employs high throughput AI/ML optimized 7nm FPGA

Back in May I wrote an article on the new Speedster7t from Achronix. This chip brings together Network on Chip (NoC) interconnect, high speed Ethernet and memory connections, and processing elements optimized for AI/ML. Speedster7t is a very exciting new FPGA that can be used effectively to accelerate a wide range of processing… Read More

Efficiency – Flex Logix’s Update on InferX™ X1 Edge Inference Co-Processor

Last week I attended the Linley Fall Processor Conference held in Santa Clara, CA. This blog is the first of three blogs I will be writing based on things I saw and heard at the event.

In April, Flex Logix announced its InferX X1 edge inference co-processor. At that time, Flex Logix announced that the IP would be available and that a chip,… Read More

Free webinar – Accelerating data processing with FPGA fabrics and NoCs

FPGAs have always been a great way to add performance to a system. They are capable of parallel processing and have the added bonus of reprogramability. Achronix has helped boost their utility by offering on-chip embedded FPGA fabric for integration into SoCs. This has had the effect of boosting data rates through these systems… Read More

TSMC vs Intel Foundry vs Samsung Foundry 2026