Synopsys recently held a webinar session on this topic and Gustavo Pimentel, Principal Product Marketing Manager at the company led the webinar session. Going into the webinar session, I found myself wondering: why focus on PCIe 5.0, eight years after its release? With the industry buzzing about Edge AI, cloud computing, and high-performance applications, it felt like talking about “old news.” That curiosity turned out to mirror some of the audience questions during the webinar Q&A session. Another common question was whether it made sense to skip PCIe 5.0 entirely and jump straight to PCIe 6.0.

Gustavo offered clear answers. He explained why, for most applications today, migrating from PCIe 4.0 to PCIe 5.0 is the practical path. PCIe 6.0 is only warranted if a customer’s application absolutely demands it. The discussion delved into both design techniques and architectural integration strategies, demonstrating how PCIe 5.0 remains a highly flexible solution for balancing power, performance, area and latency tradeoffs across industries.

Edge AI: Driving the Next Cycle of Innovation

Edge AI is no longer a futuristic concept—it’s driving real change across devices and data centers. By processing data closer to the source, it improves privacy, strengthens security, and delivers faster, more personalized user experiences, all while reducing reliance on the cloud. AI workloads themselves are growing at an unprecedented rate. Estimates suggest that by 2027–2028, about 50% of data center capacity will be AI-driven, up from 20% today. AI model sizes double roughly every four to six months, far outpacing Moore’s Law, and processing demands continue to escalate dramatically.

To efficiently handle this explosion of data, devices and systems need interconnects that can keep pace. PCIe 5.0, with its high bandwidth and low latency, is ideally suited to enable edge SoCs to process AI workloads efficiently, while maintaining strict power and area constraints. Its role is particularly critical in applications where latency, power, and space are all highly constrained, such as autonomous vehicles, mobile devices, and embedded AI systems.

Why PCIe 5.0 Remains Relevant

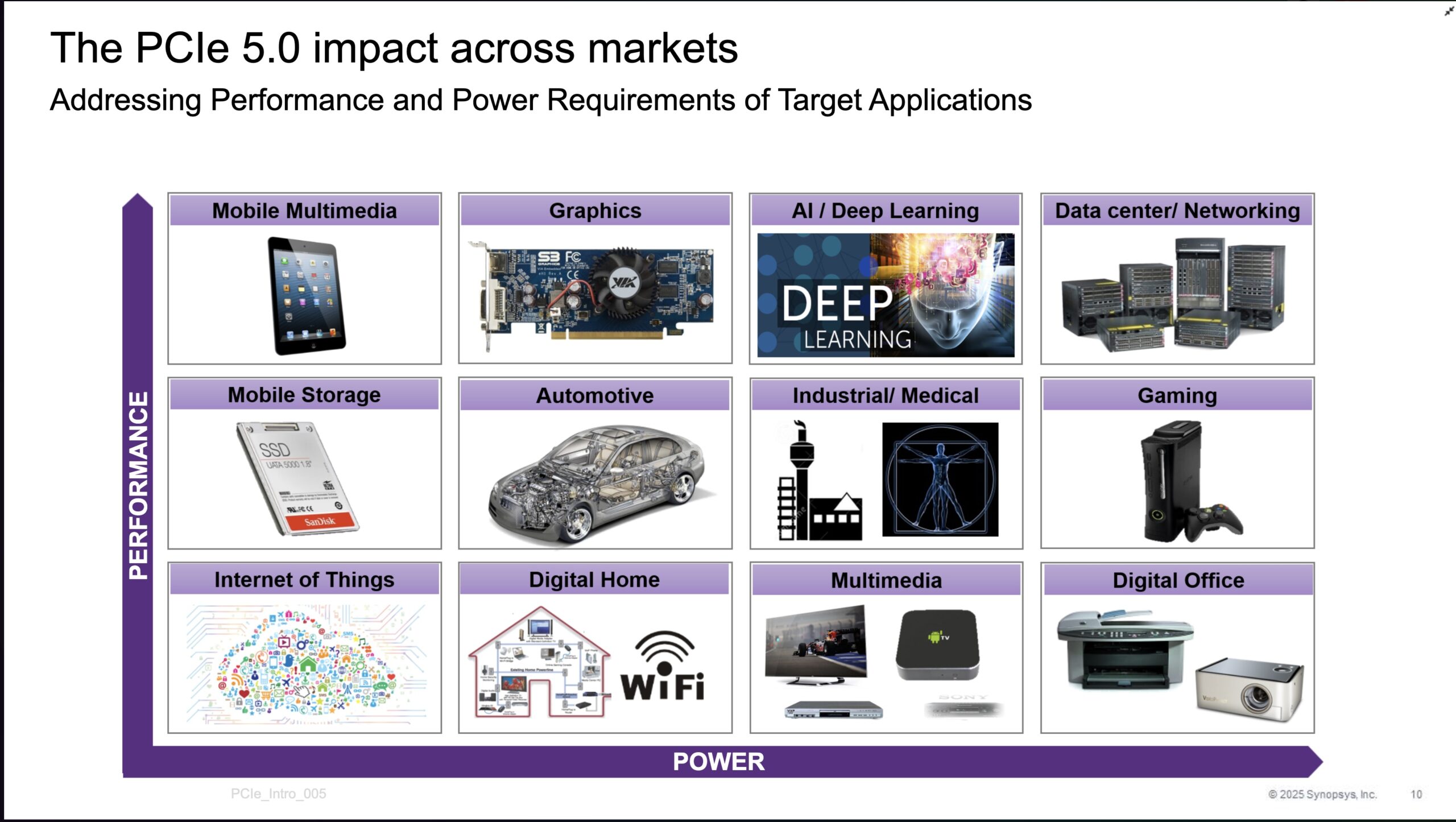

At 32 GT/s per lane, PCIe 5.0 doubles the bandwidth of PCIe 4.0 while remaining backward-compatible with previous generations. Its maturity and interoperability make it a dependable choice for designers navigating complex, high-performance systems. PCIe 5.0 serves a diverse range of applications, from high-performance computing and data centers to mobile multimedia, consumer devices, and automotive Edge AI.

For automotive systems, latency-sensitive workloads demand PCIe 5.0’s high throughput, while consumer electronics often prioritize minimizing footprint and power. In data centers and HPC environments, designers focus on maximizing bandwidth and efficiency. PCIe 5.0 provides the flexibility to achieve the optimal tradeoff in each case, making it a practical, future-ready solution.

Design and Low-Power Techniques

One of the key themes of the webinar was low-power design, essential for both edge devices and energy-efficient HPC systems. PCIe 5.0 includes power states like P1.2, which reduces energy usage while maintaining responsiveness, and P1.2PG, which uses dynamic power gating to further minimize consumption, albeit with slightly longer transitions to active operation.

Channel length also influences performance. Shorter chip-to-chip and card-to-card channels reduce latency and improve signal integrity, enabling devices to fully exploit PCIe 5.0’s high-speed capabilities. Migration strategies from PCIe 4.0 illustrate the flexibility offered by PCIe 5.0: designers can choose to increase bandwidth while keeping area and power nearly constant, or reduce lanes and beachfront size to save area and energy without sacrificing throughput. These design options allow PCIe 5.0 to meet the highly variable requirements of modern AI and computing workloads.

Integration Considerations for Edge SoCs

Incorporating PCIe 5.0 into edge SoCs requires careful planning around cost, time-to-market, reliability, and readiness. The webinar highlighted how integration strategies, alongside careful design techniques, allow PCIe 5.0 to support demanding workloads efficiently. By optimizing lane configuration, channel length, and power management, designers can create systems that balance high bandwidth, low latency, and power efficiency, tailored to specific application domains.

Adoption and Real-World Use

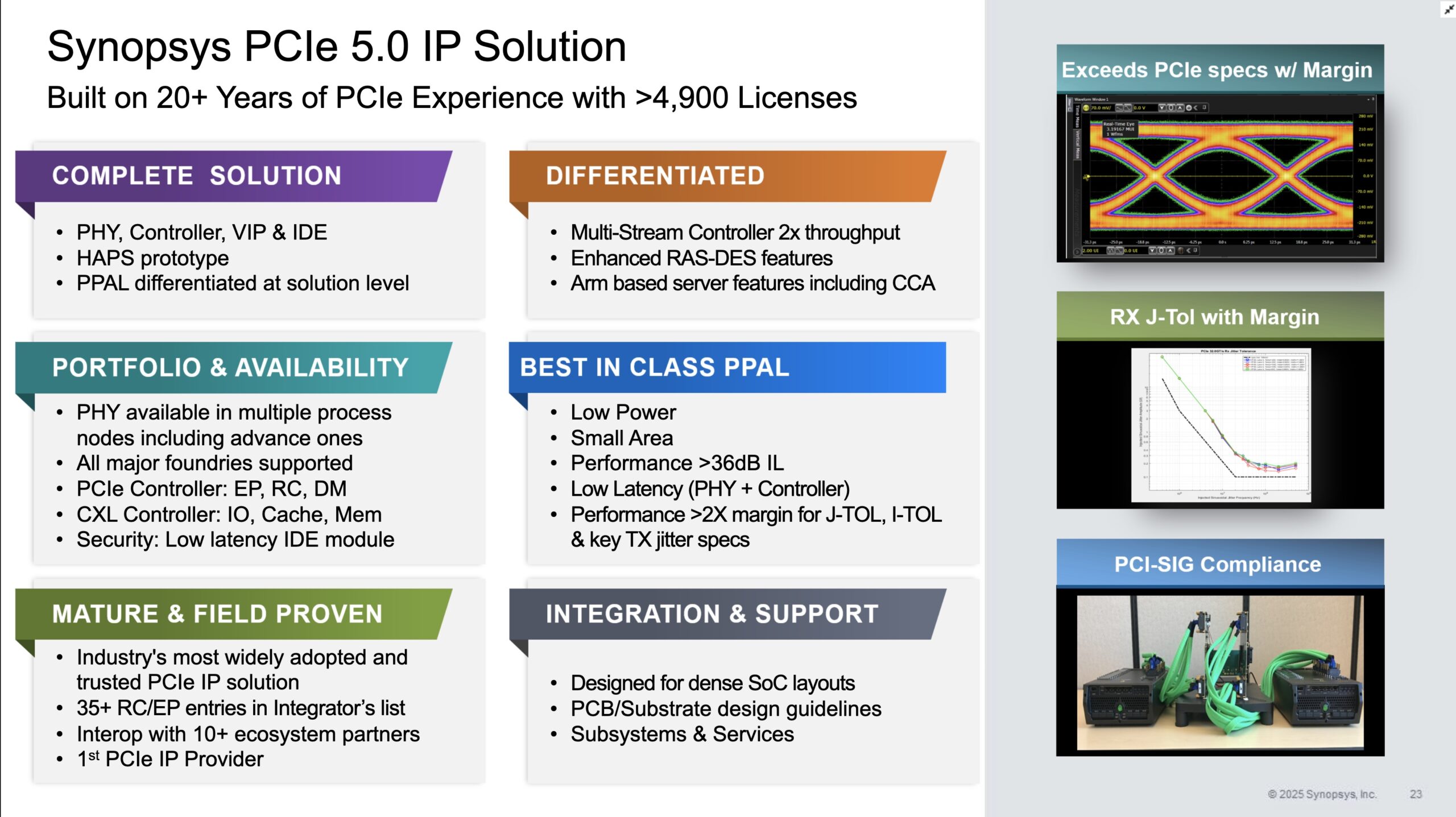

PCIe 5.0 adoption has progressed steadily. The automotive market, in particular, has ramped up faster than others, driven by latency-critical AI workloads and strict reliability requirements. By the end of 2022, PCIe 5.0 was widely deployed in automotive applications. In high-performance computing, standard PCIe 5.0 continues to deliver maximum throughput, while low-power, short-reach variants are increasingly common in edge and embedded devices. Production-proven IP solutions, such as those from Synopsys, demonstrate broad interoperability and first-pass silicon success, proving that PCIe 5.0 is both mature and ready for next-generation AI applications.

Audience Questions: Key Insights

The Q&A session addressed several questions that clarified PCIe 5.0’s ongoing relevance. When asked why not skip directly to PCIe 6.0, the answer was clear: PCIe 6.0 requires major changes to PHYs and controllers, which increase area and power significantly. Adoption of PCIe 5.0 is already sufficient for the vast majority of use cases. Tradeoff decisions—whether to increase bandwidth while keeping area constant, or reduce area while maintaining bandwidth—depend entirely on the application. Standard PCIe 5.0 supports HPC workloads, while low-power, short-reach variants are increasingly deployed for edge and embedded systems.

Summary

PCIe 5.0 may have been released quite a few years ago, but it remains a critical enabler for Edge AI and high-performance applications. Its combination of maturity, interoperability, high bandwidth, and flexible design tradeoffs makes it a practical choice across markets, from automotive to consumer electronics and data centers. Far from being “old technology,” PCIe 5.0 allows designers to deliver high performance where it matters, while balancing power and area efficiently. For Edge AI, HPC, and embedded applications alike, PCIe 5.0 continues to be a versatile and reliable solution, helping to drive the next cycle of innovation.

To listen to the webinar, visit here.

To learn more about Synopsys PCIe IP Solutions, Click here.

Also Read:

Synopsys and TSMC Unite to Power the Future of AI and Multi-Die Innovation

AI Everywhere in the Chip Lifecycle: Synopsys at AI Infra Summit 2025

Comments

There are no comments yet.

You must register or log in to view/post comments.