On my last graphics chip design at Intel the project manager asked me, “So, will this new chip work when silicon comes back?”

My response was, “Yes, however only the parts that we have been able to simulate.”

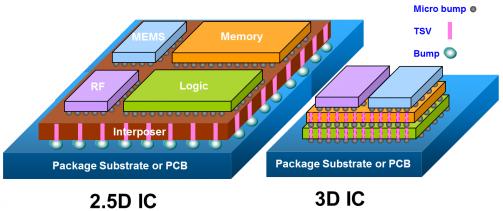

Today designers of semiconductor IP and SoC have more approaches than just simulation to ensure that their next design will work in silicon. Formal analysis is an increasingly popular technology included in functional verification.

DVCon 2012

I received notice of DVCon 2012 coming up in March, and saw a tutorial session called: Using “Apps” to Take Formal Analysis Mainstream. I wanted to learn more about the tutorial so I contacted the organizer, Joe Hupcey III from Cadence and talked with him by phone.

Joe Hupcey III, Cadence

Q What is an App?

A: An app is a well documented capability or feature to solve a difficult, discreet problem. An App has to be more efficient to use (like how formal can be more efficient than a simulation test bench alone). An app has to be easy enough to use without having a PhD in Formal analysis.

Q: Who should attend this tutorial?

A: Design and verification engineers that could benefit from the use of formal. Little coaching and documentation is needed to get up to speed. Also Formal experts can benefit. Design and verification engineers that want to quickly and easily take advantage of the exhaustive verification power that formal and assertion-based verification has to offer.Formal experts that what to branch out, and make all their colleagues more productive, plus in the case of the apps they tie into the Metric-Drive Verification flows the contribution made by formal can be mapped to simulation terms.

Q: Does it matter if my HDL is Verilog, VHDL, SystemVerilog or SystemC?

A: All languages benefit from formal, PSL or SystemVerilog Assertions are talked about and used.

Q: What are the benefits of attending this tutorial?

A: Everyone on the design and verification team gets some value out of formal tools and methodology. We’ll be showing 5 or 6 apps that are available for use today. As I noted above, the “apps” approach starts with hard problems where formal, or formal and simulation together, are more efficient than simulation alone – then structures a solution that’s laser focused on the problem. There are quite a few apps available today – so if you are a Cadence customer this tutorial will help you get the most of the licenses you already have.

… plus we are hoping to include a bonus, guest speaker from a world-wide semiconductor maker who will speak about the app he created for a current project. (The engineer is working with his management to get approval now)

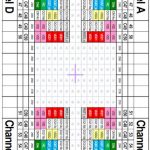

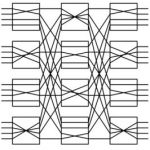

Our lead example app is one for SOC connectivity – we show how to validate the connectivity throughout the entire SOC, adding BIST, plus using low-power mode controls. You could create a test bench, simulate and verify that connectivity is correct (couldn’t test exhaustively all combinations). SOC Connectivity app accepts input as an Excel spreadsheet with connectivity, it then turns that into assertions, finally the Formal tool verifies that assertions are true for all cases (finds counter-examples where design fails). This takes only hours to run, not weeks to run like simulation. This is just part of the Cadence flow – assertion driven simulation is kind of unique to Cadence (take formal results, feed into coverage profile to help improve test metrics).

Q: Why should my boss spend the $75?

A: Because these apps can help save you design and verification time faster than running pure simulation alone. Case studies are use to provide measured improvements. You can leave the tutorial, go back to work, and start using the formal approaches. The main presenters are experts in each area.

Christopher Komar – Formal Solutions Architect at Cadence Design Systems, Inc.

Dr. Yunshan Zhu – Presdient and CEO, NextOp Software. They have an assertion synthesis tool that reads the TB and the RTL for the DUT, then creates good assertions (not a ton of redundant assertions). BugScope will be shown along with case studies.

Vigyan Singhal – CEO at Oski Technology, they make formal apps for both the design and verification engineers and will talk about assertion-based IP.

Source: Oski Technology

Summary

To learn more about formal analysis applied to IP and SoC design then consider attending the half-day tutorial at DVCon on March 1 in San Jose. You’ll hear from people at three different companies:

For just $75 you receive the slides on a USB drive and they provide coffee and feed you lunch.