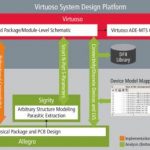

I recently wrote an article on SemiWiki talking about the integrated Electronic/Photonic Design Automation (EPDA) flow that is being developed by Cadence Design Systems, Lumerical Solutions and PhoeniX Software and how that flow is now expanding into the system level through SiP (system in package) techniques.

Up till recently, demos were all being done using a theoretical PDK but this changed last week (May 24[SUP]th[/SUP], 2017) when Cadence, Lumerical and PhoeniX presented a demonstration of the EPDA flow using a real foundry PDK. The PDK was from AIM Photonics and the demonstration was given at the AIM Proposers Meeting in Rochester, NY. This is a key milestone for Cadence, Lumerical and PhoeniX Software as this is the first public demo of the EPDA tool flow with a real foundry PDK.

There are other production PDKs also in the works for the flow, however it’s too early to drop names just yet. Suffice it to say that momentum continues to grow. Cadence’s partnership with Lumerical for circuit level photonic simulation and with PhoeniX Software for photonic physical design gives it a significant jump start towards bringing PDK support online for the full EPDA flow as both Lumerical and PhoeniX Software have extensive PDK support from existing photonics foundries. With only a modest amount of effort, these existing PDKs are now being synced up and used to populate PDKs for the entire EPDA flow.

If you haven’t seen the EPDA flow yet, it will be presented at this year’s Design Automation Conference in a presentation entitled, “Capture the Light. An Integrated Photonics Design Solution from Cadence, Lumerical and PhoeniX Software”. The presentation will be given in the DAC Cadence Theater at 10:00a on Tuesday, June 20th.

Momentum for the new EPDA flow continues to grow as the three companies will also be engaging with more engineers at a five-day class entitled ‘Fundamentals of Integrated Photonics Principals, Practice and Applications’. This class is being put on by the AIM Photonics Academy and will be taking place the last week of July at the MIT campus in Boston, MA.

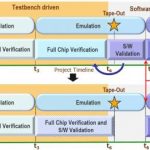

There are also multiple customer engagements underway for the EPDA flow. Again, it’s too early yet to release those customer’s names. It is however these same customers that are now pushing the trio to work on the advanced system-level flow that was eluded to in my last SemiWiki article (see link below).

As part of this effort, Cadence, Lumerical and PhoeniX Software are also planning to host a second photonics summit in the early September time frame. Like the 2016 photonic summit, this will be a two-day event hosted at the Cadence campus in San Jose. The first day will focus on technical presentations discussing challenges and progress towards implementing integrated photonics systems. The second day, like last year, will again be a hands-on session that will highlight progress made towards extending the existing EPDA flow for integrating the full system (electronics, lasers, and photonics) into a common package. Watch for more details on how to register for this summit in the upcoming weeks.

It’s still early days for integrated photonics but capabilities are rapidly being put into place. If it’s time for you to come up to speed on integrated photonics I would encourage you to attend one or more of these upcoming opportunities to learn.

See Also: