Having started my career during the mini computer revolution it has been an incredible journey from using computers that consumed entire rooms – to the desktop – and now we have supercomputers in our pockets. It makes me chuckle when I hear people complaining about their smartphones when they should be jumping up and down saying, “I have a supercomputer I have a supercomputer!”

Our book, “Mobile Unleashed”, documents how computing went from desktops to our hands and wrists through the history of ARM and their work with Apple, Qualcomm, and Samsung. As Sir Robin Saxby wrote in the book foreword:

“If you’re not making any mistakes, you’re not trying hard enough. I’ve been quoted as saying that in an interview some years ago and when you find yourself defining a new industry, as we did, you try very hard and you make a lot of mistakes. The key is to make the mistakes only once, learn from them and move on.”

ARM certainly made mistakes, mistakes that the next computing revolutionaries have learned from which brings us to RISC-V and SiFive.

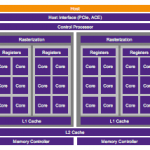

RISC-V (pronounced “risk-five”) is a new instruction set architecture (ISA) that was originally designed to support computer architecture research and education and is now set to become a standard open architecture for industry implementations under the governance of the RISC-V Foundation. The RISC-V ISA was originally developed in theComputer Science Divisionof the EECS Department at theUniversity of California, Berkeley.

Going up against a monopoly like ARM is not an easy task but knowing what I know today I would do it exactly like RISC-V and SiFive. Start at the academic level, open source it (Linux versus Microsoft), and move up from there. I’m convinced that some of “the next big things” will come from young minds so open source and Universities is the place to be.

SiFive is the first fabless provider of customized semiconductors based on the free and open RISC-V instruction set architecture. Founded by RISC-V inventors Krste Asanovic, Yunsup Lee and Andrew Waterman, SiFive democratizes access to custom silicon by helping system designers reduce time-to-market and realize cost savings with customized RISC-V based semiconductors.

We started following SiFive last year and published two articles that are worth reading:

RISC-V opens for business with SiFive Freedom

SiFive execs share ideas on their RISC-V strategy

This week SiFive announced a new CEO, Dr. Naveed Sherwani, to take RISC-V to the next level. I was able to chat with Naveed right before the announcement. It is interesting to note that Naveed is an academic with: EDA experience (he wrote an EDA textbook: Algorithms for VLSI Physical Design Automation), Semiconductor experience (started the Microelectronics Services Business at Intel and co-founded Brite Semiconductor), and ASIC experience (he was co-founder and CEO of Open Silicon). So who better to lead RISC-V into the design enablement phase of business?

When you talk to Naveed you will discover all of this experience bundled into a very high energy and passionate leader. The first question I asked of course was, “Why did you join SiFive?” The answer is, “Passion for computer architecture, the impressive list of RISC-V Members, the academic connection, the country connection (India for example has adopted RISC-V versus the advent of paying billions of dollars to ARM), and the global connection (open source spurs innovation all over the world to benefit mankind).”

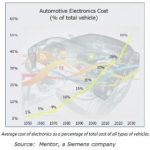

The second question I asked was, “How will they go from the “Not ARM” market to the “Better than ARM” market?” The answer was cost, power, and performance.

The third question I asked was, “How will SiFive make money?” The answer was, “Think RedHat for semiconductor IP”:

We make buying Coreplex IP easy and hassle-free.

- Choose and customize your Coreplex IP, with open pricing

- Fill out your ordering information

- We’ll electronically send you our straightforward, 7-page license agreement

- It’s that simple

- No long sales calls

- No restrictive NDAs.

- No opaque pricing

- Just customize, order, and sign, and you’ll have your IP in several business days

- All of SiFive’s Coreplex IP come with 1-year of commercial support

According to a recent study by ARM, more than one trillion IoT devices will be built between 2017 and 2035. If ARM is challenged on both technology and price I would expect that number to be significantly higher, absolutely.