For those of you who missed the NetSpeed Systems, Imagination Technologies webinar, “Alexa, can you help me build a better SoC”, you’ll be happy to hear that the session was recorded and can still be viewed (see link at the bottom of this page). I’ll warn you now however, that this was a high-bandwidth session packed with information, so much so that I had to listen through it several times to absorb everything. Here’s a condensed version for those looking for the salient points.

SoCs for autonomous automotive applications are some of the most complex ICs designed on the planet. These designs must fuse data from multiple sensors (RADAR, LIDAR, ultra-sonic, video, cameras) in real time, while also dealing with ultra-high functional safety and security requirements. Real-time processing of multiple different data streams implies a heterogeneous mixture of CPUs, DSPs, GPUs, ISPs, and dedicated specialty hardware accelerators all communicating with each other using a sophisticated network-on-chip (NoC) capable of handling coherent memory and coherent I/O access.

NetSpeed Systems and Imagination Technologies partnered to deliver a next generation autonomous automotive platform that is now used by three of the top four auto-pilot players. NetSpeed Systems delivers the NoC while Imagination Technologies delivers the MIPS I6500-F processor architecture. Their autonomous automotive SoC platform enables designers to create a scalable, heterogeneous solution using AI software techniques to synthesize an optimal hardware solution while trading off power, performance, area (PPA) and functional safety (FuSa).

Some key items used in this collaboration include:

- NetSpeed’s GEMINI Coherent NoC, certified ASIL-D ready per the ISO 26262 standard.

- NetSpeed’s NocStudio development environment, with machine learning-based interconnect synthesis that uses NetSpeed’s CRUX (streaming interconnect transport), ORION (AMBA and OCP bridging), GEMINI (NoC coherent cache and I/O management) and PEGASUS (NoC L2, L1, and LLC cache support) template libraries.

- Imagination’s MIPS I6500-F variant of the MIPS 6500 CPU, certified automotive ASIL-B(D) and Industrial Control applications IEC 61508 ready as a Safety Element out of Context (SEooC).

- Enhanced heterogeneity using the MIPS IOCU ports and dedicated CPU threads to enable low latency paths through L2 cache between hardware accelerators and the CPU.

- FuSa capabilities of the MIPS I6500-F including ECC across memories, redundant logic and parity protection, time-outs and the use of logic built-in-self-test (LBIST) that checks the hardware at both boot-up and during processing cycles when CPUs are not busy.

- FuSa capabilities of the NetSpeed NoC synthesis process including path redundancy and guaranteed fail-safe deadlock-free solutions.

The thing that makes this joint solution so fascinating is the fact that it is indeed scalable and programmable, enabling designers to truly customize a SoC to meet their specific requirements. NetSpeed’s NoC is able to manage up to 64 cache coherent clusters and 250 I/O coherent IPs and the NoC is compatible with popular protocols ACE, CHI, AXI, AHB, APB and OCP. Similarly, the MIPS 6500 architecture is able to support cache coherent arrays of clusters with multi-threaded CPU cores.

Added to this is the fact that the design environment (per the title of the webinar) makes use of artificial intelligence (AI) techniques to help designers make intelligent PPA/FuSa trade-offs. Once the design has been iterated, the solution then uses NetSpeed’s NocStudio software to synthesize the resulting architectural RTL code.

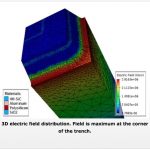

A nice feature of the joint solution is that NocStudio can simulate system data traffic and interactions between the processing units and the coherent cache and coherent I/Os. In so doing, NocStudio can score both PPA and FuSa results on a path-by-path basis. Additionally, each NoC path from master to slave is user configurable in terms of its power, performance (latency and quality of service or QoS), area and Functional Safety requirements. NocStudio considers the requirements of each master-slave path of the system along with higher-level constraints such as expected data traffic for various paths, physical locations of master-slave paths on the IC, numbers of competing paths in the same area and the priority of the paths with respect to desired network redundancy. Depending on the specifications for each path, NocStudio’s AI algorithms synthesize and optimize a NoC to meet the requested constraints.

Many different FuSa trade-offs can be made with NocStudio for each master-slave path. Examples of this include end-to-end parity checks, ECC checks and checksums for packets as well as hop-to-hop checks and the synthesis of redundant paths to compensate for paths that may develop errors. Because NetSpeed Systems and Imagination Technologies have partnered on the solution, the NocStudio environment can also dovetail the parity checks applied by the MIPS I6500-F processor with the checks being done by the NoC, leading to system-level coverage for all components controlled by the MIPS processor.

The solution platform generates a plethora of data files and information that can then be used to implement the SoC including:

- Synthesizable RTL,

- Verification checkers, monitors and scoreboards

- Files to aid physical design (block placements in DEF, timing constraints in SDC, Clock skew and physical design scripts to run place and route)

- SoC integration files such as IPXACT, CPU/UPF, and an architectural manual

- FuSa documents including FMEDA and a Safety manual that can be used for ISO 26262 certification.

The most impressive part about this is solution is that it is silicon proven and in use today by several leading autonomous automotive IC providers with successful implementations such as the one done by MobileEye (see SemiWiki article).

To dive deeper into what NetSpeed and Imagination Technologies have to offer in this space you can watch the full webinar at this link.

See Also:

NetSpeed Systems

Imagination Technologies