At Intel back in the late 1970’s we wanted to know what process corner each DRAM chip and wafer was trending at so we included a handful of test transistors in the scribe lines between the active die. Having test transistors meant that we could do a quick electrical test at wafer probe time to measure the P and N channel transistor characteristics, providing valuable insight for the specific processing corner. We would’ve loved to have this kind of test transistor data for processing embedded into each die as well. The increase in transistor complexity for DRAM chips has been quite dramatic over the years, so in 1978 we had 16Kb DRAM capacity while today the technology has reached 16Gb, an increase of 1,000,000X. On the SoC side we see an equally impressive increase such that a GPU from NVIDIA now contains 21 billion transistors and 5,120 cores using a 12nm process from TSMC. So whether you are designing a CPU, GPU, SoC or a chip for IoT applications requires that IC designers understand how process variability impacts each die and packaged part during operation. Other design concerns include:

- Manufacturability and yield

- Timing, clock speed, power values within spec

- Reliability effect like aging, internal voltage drop

- Avoiding field failures

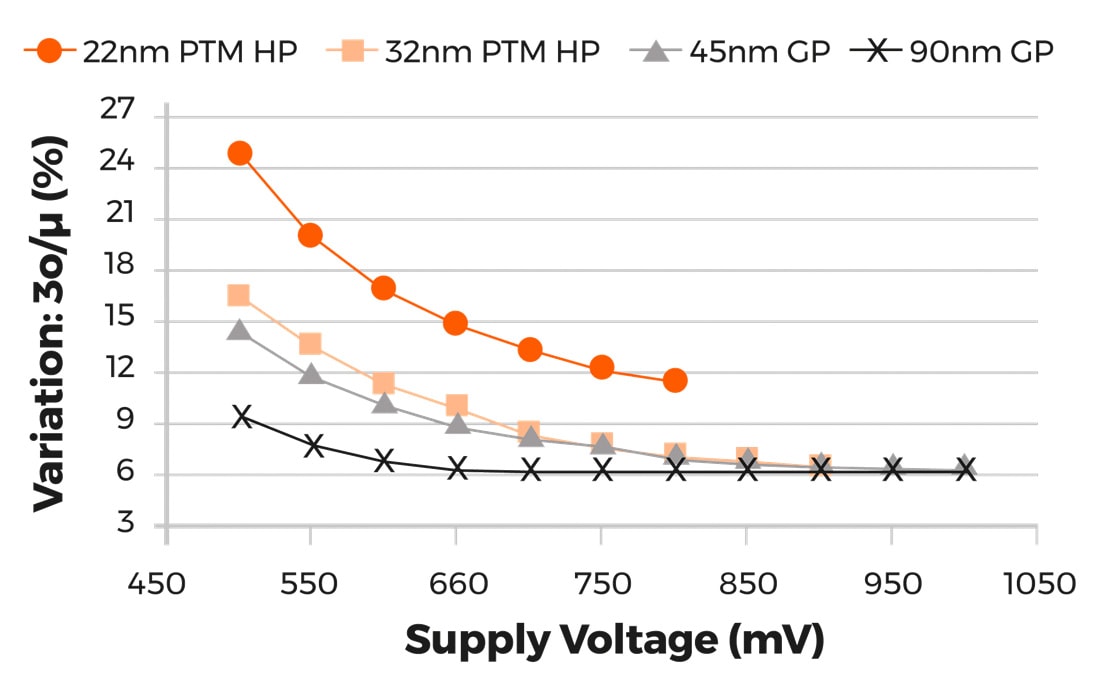

Moore’s Law has held up pretty well until the 28nm node, and below that node the price learning curve hasn’t been as rewarding. Even clock speeds have stalled in the GHz range. Short-channel effects started to hurt current leakage values, limiting battery life and performance, so new transistor approaches emerged like FinFET and SOI. Device variability is a dominant design issue today, meaning that even adjacent transistors on the same die can have different Vt (Voltage Threshold) values caused by different dopant values or silicon stress caused by proximity to isolation wells – layout dependent effects. Just take a look at how variations have increased with each smaller geometry node from 90nm down to 22nm:

Switching speed delay variations against supply voltage across process nodes (Source: Moortec)

For a 22nm process node with a 475mV supply level you can expect switching speed delay variations of 25%, while at the more mature process node of 90nm the delay variations are only 9%.

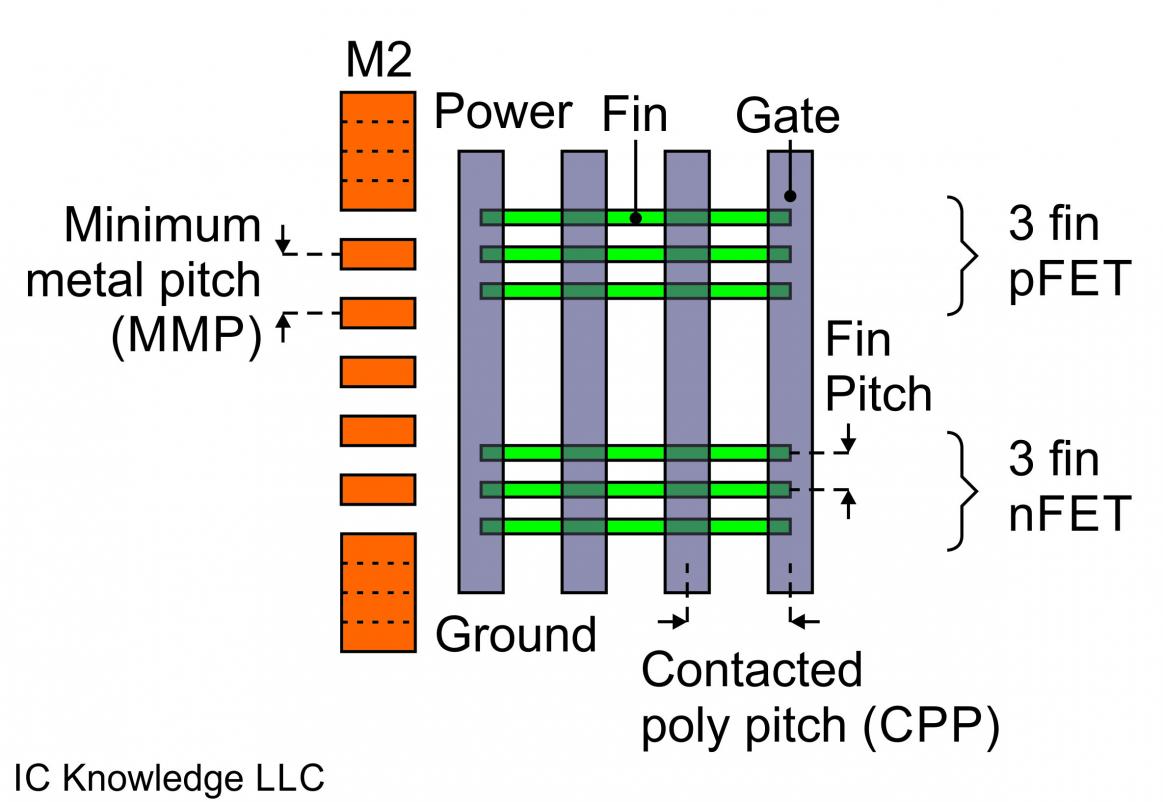

With FinFET technology in use since 22nm there are some new concerns caused by higher current densities like localized heating effects, so designers using 14nm, 10nm, 7nm and 5nm need to be aware of self-heating because it impacts the aging of transistors and the Vt actually begins to shift over time as shown below:

Vth degradation from NBTI and HCI effects (Source: Moortec)

The dark curve above shows a Vdd value of 1.3V being used for transistors, and over a 10 year operating period the nominal Vt value of 0.2V can be changed by over 4% due to negative bias temperature instability (NBTI) and hot carrier injection (HCI) effects. That Vt shift can simply slow down your IC or cause it to fail meeting your clock speed specification.

With lower Vdd values being used coupled with higher current levels and higher interconnect resistivity you can expect to see internal supply levels of a chip drooping from the values supplied at the pins. Knowing the actual internal Vdd levels can be quite critical.

To meet stringent power consumption requirements many approaches have been taken, a popular technique is called Dynamic Voltage and Frequency Scaling (DVFS) where the chip has the ability to change local Vdd values in order to throttle or speed up the frequency of operation. Reducing the Vdd value quickly reduces power consumption because power is related the square of the Vdd value. So lowering the Vdd value locally allows you to tune for power consumption. Adding DVFS does increase the logic overhead and requires even more simulation during the design phase. Turning local Vdd lines on causes a rush of current, triggering an IR drop issue which can cause transient errors in the silicon logic behavior.

IC designers can deal with all of these effects by a couple of approaches, the earliest approach was to design for worst-case conditions although at the expense of leaving margin on the table. A newer approach is to actually embed in each chip some specialized IP for three tasks:

- Temperature sensing

- Voltage monitoring

- Process monitoring

Knowing your actual PVT (Process, Voltage, Temperature) corner in silicon is incredibly useful to controlling your chip for maximum performance. With an embedded PVT monitor you can quickly perform speed binning without having to run extensive functional, full-chip testing. Aging effects on Vt can be measured with an embedded PVT methodology.

Using a temperature sensor at strategic locations on an SoC can then be used to dynamically measure how hot each region is, then decide to alter the Vdd values to keep the chip operating reliably while still meeting timing, even as the chip ages over time. A multi-core SoC with temperature sensors can dynamically assign new instructions to the core with the lowest temperature value, balancing the work load so as to not over-heat any one core with too many sustained operations. The mean time to failure for ICs is directly related to operating temperature levels, so with embedded PVT you can control the aging effects.

Moortec is the IP vendor that designed PVT monitoring for popular process nodes and they have internally created the sensors and controllers to interpret the data on-chip. Yes, you have to use a tiny amount of silicon area to implement the PVT monitoring, however the benefits far outweigh the die size impact. The process monitor can tell you the exact speed of your transistors to let you know how close they are to nominal values. Benefits of using these monitors include:

- Tuning on-chip parameters at product test

- Real-time managements of processor cores

- Avoiding localized aging effects

- Maximizing clock performance at a specific voltage and temperature

You can use PVT monitors to measure if your specific silicon will meet timing goals, or program local Vdd levels to achieve a certain clock speed. It also can make sense to have multiple PVT monitors spread out across a single die in order to collect regional data. For example you could place a PVT monitor in each corner of a die, then one in the center in order to measure process variability. For multi-core SoCs you would place PVT monitors in each core, next to critical blocks.

Engineers at Moortec have designed PVT monitors across a wide range of process nodes starting at 40nm and extending through 7nm, so you don’t need to be a PVT expert to use their IP. You get to consult with their experts about which PVT monitors to use and where to place them on your specific chip design. To really get the most performance out of advanced FinFET nodes you should consider adding PVT monitors into your next design, even the battery-powered IoT designs benefit from the data gathered by PVT monitors in saving power consumption.

There’s an 11 page white paper available at Moortec for download after a brief sign-up process.