Improper handling of design validation could simply translate into a debugging exercise. In mainstream RTL2GDS flow, design implementation involves a top-level integration and lower-level block developments. These lower-level components, comprising of macros, IPs and standard cells are subjected to frequent abstraction captures as inherently required by many cross-domain development and analysis tools. As a result, validation without automation is becoming a complex debugging feat.

Checklist

In dealing with numerous design view formats such as for netlist or layout, ambiguity may be present at the interface. Port directionality and labeling are critical. Although some attributes or design entities might later be implicitly defined at the top-level –such as the interface signals, which become internal nets at the top-level, correct assignment is still needed for completeness of the subdesign or the model’s boundary conditions. For example default I/O loading condition or input slew rate may be needed to prevent non-realistic ideal scenario.

Many approaches are available to capture a checklist in design flow. Static verification driven processes such as code linting, design rules checks and optimization constraints related checks have been quite effective in shaping design implementation and have their reliance on the use of a comprehensive checklist (rule set). Such checklist is normally aggregated over several project cycles and could be overwhelmingly long as well as complex to automate.

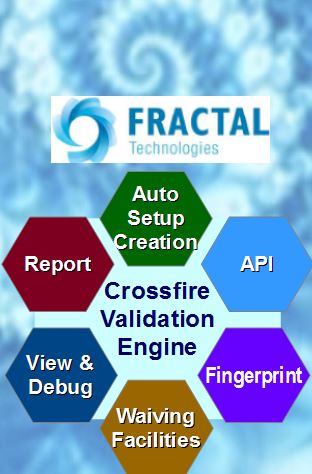

To address this cross data-format IP blocks or library validation need, Fractal Technologies provides Crossfire software. It is capable of doing the following two types of checks:

- Implementation completeness of a design entity. For example it will compare any item that has been parsed against its equivalent items from a reference. Such checks are applied to all different object types in the Crossfire data model, such as cells, pins, nets, timing arcs and power domains.

- Intrinsic sanity checks of model databases. For example it uses the various process corners described in library files and check whether delays shows increase with rising substrate temperature or decrease with increasing supply voltage.

In general, there are three usage categories for Crossfire as a sign-off tool for libraries and hard IP’s; as sanity checkpoint in library/IP development and as facility to inspect for the quality of incoming libraries and hard IP’s.

View Formats

Over the last few years, Fractal enhanced the Crossfire validation to expand coverage of settings and database used by many third-party well known implementation and analysis tools. Over 40 supported formats and databases that include the following list:

Bottleneck and Root Cause Analysis

Design rule checks could be in the order of hundreds or thousands. Multiple occurences of them could translate to tens of thousands or higher DRC errors depending on the design size. Similarly for database consistency checks, root causing the trigger point of a downstream error is a tricky feat requiring both traceability and understanding of the data flow.

Fractal Crosscheck has a mechanism to allow quick traceability through color-based connected graphs called fingerprint analysis and visualization (as shown in figure 2). For example designer could view the flagged errors and filter-out only on a cluster of interest through the color aided selection or by way of controlled waivers. This can be done up and down the design entities readily linked. Binning errors based on a rule-pivot or format-pivot can be performed as well.

Another Fractal usage flavor is to perform debug visualization through the use of the reporting dashboard. For example, assuming that an error occurs after running LEF vs DEF check check run, the designer could click on the analyze button to open message window. Subsequently, by selecting the layout view, the portion of the layout contributing to the error is highlighted in a pop-up view.

Library and hard IP validation is key in ensuring a smooth deployment for downstream tools in the design flow. Fractal’s Crossfire provides the facility to bridge many cross-domain design views and rule-based checklist to confirm the integrity of lower-level design implementation. The GUI driven dashboard and fingerprint diagram simplify diagnostic reporting, visualization and debugging process.

Native checks can be combined with externally done validation results (such as from DRC runs) to be viewed through the navigation dashboard. Currently more than 250 checks have been incorporated into Fractal Crossfire.

For more Fractal tool info, including whitepapers on usage cases and features, check this website LINK.