At advanced nodes, the clock is no longer just another signal. It is the most critical and sensitive electrical network on the chip, and the difference between meeting performance targets and missing the tape-out often comes down to a few picoseconds, buried deep inside the clock distribution network. Yet many design teams still rely on verification methods built for a world where margin was abundant and physics was forgiving. That world no longer exists.

ClockEdge delivers the SPICE-level precision, visibility and control that advanced node clock networks now require. Let’s examine how the company meets advanced node challenges and opens new innovation opportunities.

What ClockEdge Delivers and Why

At 28 nm, wide guard bands and coarse approximations could cover up hidden clock behavior — at a full design cost under $50M. At 3 nm and 2 nm, margins have collapsed, variability dominates, and a single tape-out can exceed $700M. With stakes this high, any inaccuracy in clock analysis becomes an unacceptable risk.

Modern clock networks run so close to physical limits that even small inaccuracies in timing, jitter, power, or aging analysis can trigger cascading failures in silicon. These interactions are invisible to traditional flows; designs may appear to close timing, and meet power and reliability targets, yet still fail in in silicon. The problem is a lack of accuracy, visibility and control of the all-important clock network. The traditional approach is to use static timing analysis and SPICE for critical paths only, due to the capacity and runtime limitations of SPICE.

This approach misses subtle but critical interactions that cause the previously mentioned cascading failures.

ClockEdge tames this problem with a family of SPICE-accurate analysis engines for timing, power, jitter, and aging analysis of clock circuits. A patented SPICE-accurate digital simulation engine delivers full SPICE precision without the capacity and speed limitations that make traditional SPICE impractical for full-clock analysis.

ClockEdge’s Veridian Suite delivers sign-off precision at real-world scale and speed, applying SPICE-accurate truth across the entire clock network. It uncovers interactions that conventional flows miss and exposes how nanometer effects directly shape clock performance and reliability.

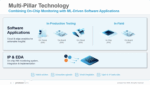

Components of the Veridian suite include:

- vTiming: Delivers SPICE-accurate, full-clock visibility from PLL to flop, exposing rail-to-rail failures, duty-cycle distortion, and hidden timing risks that define silicon performance.

- vPower: Pinpoints and reduces clock tree power using SPICE-accurate, power-aware analysis, enabling targeted optimization and fast, iterative design refinement.

- vAging: Models NBTI, HCI, and other stress effects to predict how clock paths degrade over time, exposing aging-induced timing drift, duty-cycle distortion and reliability loss.

- vJitter: Analyzes power supply induced noise with SPICE-level precision, revealing sub-picosecond timing variation and clock instability long before silicon.

Completing the picture is vHelm, the designer’s command center. vHelm provides instant visibility into how every clock decision affects timing, power, jitter, and aging, all at once.

Clock design is a system of tight interdependencies, where a single change that improves timing can degrade power, jitter, or aging unless these effects are evaluated together. vHelm exposes these interactions so designers can explore what-if scenarios, apply virtual ECO adjustments, and see waveform-accurate results in real time.

vHelm provides a unified workspace where designers can perform tasks such as resize a buffer, adjust a constraint, change a gating strategy, or test a topology change and see how the entire clock network responds. Timing margins, power consumption, edge quality, and long-term reliability are all updated side by side, making design trade-offs clear before decisions are committed.

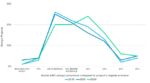

Together, the Veridian suite and vHelm deliver the breakthrough accuracy, visibility and control that advanced node clock networks can no longer function without. Thanks to ClockEdge, optimized clocking is now within reach for all design teams. There are many benefits. Some are illustrated in the graphic below.

To Learn More

I’ve just scratched the surface on what ClockEdge has to offer and how it will impact the quality and robustness of your next design. If qualities such as better design performance and longer device lifetimes appeal to you, check out ClockEdge here. If you’d like to see how the tool can help you in more detail you can reach out to set up a discussion here. And that’s how ClockEdge delivers precision, visibility and control that advanced node clock networks now demand.