Building next generation systems is a real balancing act. The high-performance computing demands presented by increasing AI an ML content in systems means there are increasing challenges for power consumption, thermal load, and the never-ending appetite for faster data communications. Power, performance, and cooling are all top of mind for system designers. These topics were discussed at a lively webinar held in association with the AI Hardware Summit this year. Several points of view were presented, and the impact of better data channels was explored in some detail. If you’re interested in adding AI/ML to your next design (and who isn’t), don’t be dismayed if you missed this webinar. A replay link is coming so you can see how much impact flexible, scalable interconnect for AI HW system architectures can have.

Introduction by Jean Bozman, President Cloud Architects LLC

Jean moderated the webinar and provided some introductory remarks. She discussed the need to balance power, thermal and performance in systems that transfer Terabytes of data and deliver Zettaflops of computing power across hundreds to thousands of AI computing units. 112G PAM4 transceiver technology was discussed. She touched on the importance of system interconnects to achieve the balance needed for these new systems. Jean then introduced Matt Burns, who set the stage for what is available and what is possible for system interconnects.

Matt Burns, Technical Marketing Manager at Samtec

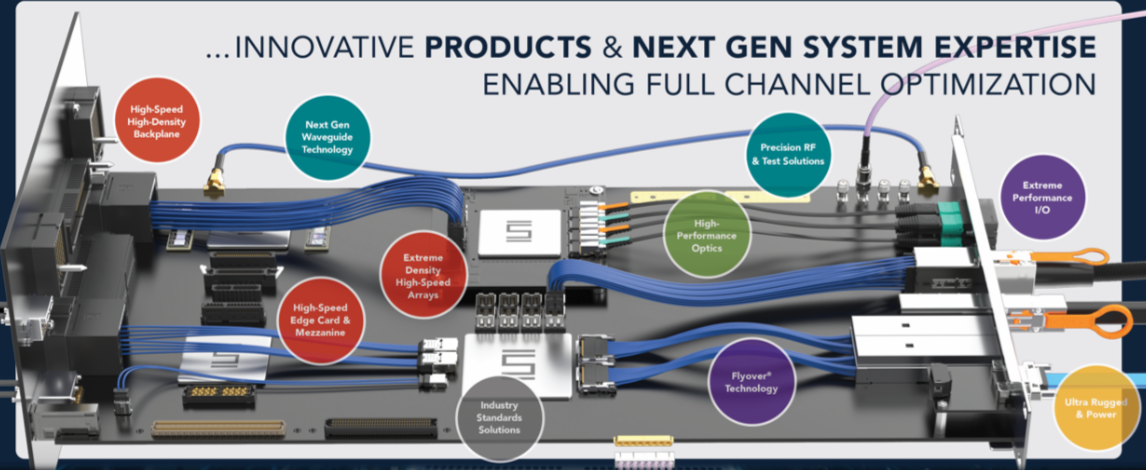

Matt observed that system and semiconductor products all need faster data communication channels to achieve their goals. Whether the problem is electrical, mechanical, optical or RF, the challenges are ever-present. Matt discussed Samtec’s Silicon-to-Silicon™ connectivity portfolio. He discussed the wide variety of interconnect solutions offered by Samtec. You really need to hear them all, but the graphic below gives you a sense of the breadth of Samtec’s capabilities.

Next, the system integrator perspective was discussed.

Cédric Bourrasset, Head of High-Performance AI Computing at Atos

Atos develops large-scale computing systems. Cedric focuses on large AI model training, which brings many challenges. He integrates thousands of computing units with collaboration from a broad ecosystem to address the challenges of model training. Scalability is a main challenge. The ability to implement efficient, fast data communication is a big part of that.

Dawei Huang, Director of Engineering at SambaNova Systems

SambaNova Systems builds an AI platform to help quickly deploy state-of-the-art AI and deep learning capabilities. Their focus is to bring enterprise-scale AI system benefits to all sizes of businesses. Get the benefits of AI without massive investment. They are a provider of the technology used by system integrators.

Panel Discussion

What followed these introductory remarks was a very spirited and informative panel discussion moderated by Jean Bozman. You need to hear the detailed responses, but I’ll provide a sample of the questions that were discussed:

- What is driving the dramatic increase in size and power of compute systems? Is it the workloads, the size of the data or something else?

- What are foundation models and what are their unique requirements?

- What are the new requirements to support AI being seen by ODMs and OEMs – what do they need?

- Energy, power, compute – what is changing here with the new pressures seen? Where does liquid cooling fit?

- What are the new bandwidth requirements for different parts of the technology stack?

- How do the communication and power requirements change between enterprise, edge, cloud and multi-cloud environments?

To Learn More

This is just a sample of the very detailed and insightful discussions that were captured during this webinar. If you are considering either adding or introducing AI/ML for your next project, I highly recommend you check out this webinar. You’ll learn a lot from folks who are in the middle of enabling these transitions.

You can access the webinar replay here. You can learn how much impact flexible, scalable interconnect for AI HW system architectures can have.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.