Calibre was a big game changer for DRC users when it first came out. Its hierarchical approach dramatically shortened runtimes with the same accuracy as other existing, but slower, flat tools. However, one unsung part of this story was that getting Calibre up and running required minimal effort for users. Two things are required for people to change what they are doing and adopt a new approach. The advantages of making the change must be extremely compelling. And, the effort required to make the change must be minimized so that it is not difficult or problematic. Otherwise, people will gladly just keep on doing what they are used to. Mentor knew this then and they apparently still are keenly aware of it now.

Calibre in the Cloud is what Mentor calls their recent announcement regarding running Calibre in a cloud environment. In a technical brief written by Omar El-Sewefy, they discuss several advantages of running in a cloud environment. The main and obvious advantage is scalability. Cloud server offerings usually have the ability to scale up to impressively large numbers of processors. With this scalability comes the potential for higher throughput and the ability to handle peak loads without having to build massive infrastructure in-house. For many organizations DRC checks are infrequent but represent demanding loads on server resources, making cloud computing an attractive option.

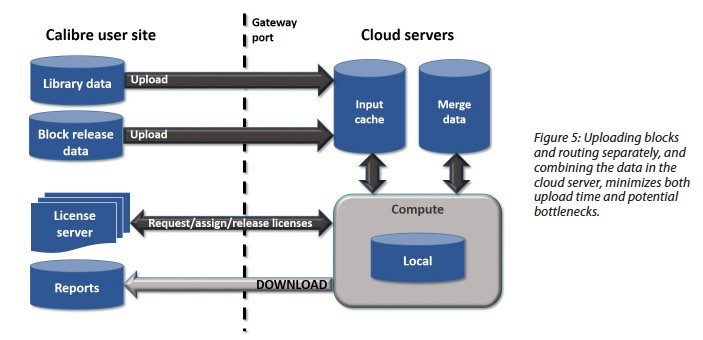

However, users do not want to spend excessive time to configure and set up for cloud usage. Mentor laid the foundation for Calibre in the Cloud back in 2006 when they introduced Calibre hyper-remote capability. This let users run on very large numbers of processors to get a significant performance and capacity boost. The process for running in the cloud is very similar to a non-cloud run, minimizing the effort required to set up and run.

The technical brief covers three topics that make cloud runs fast and efficient. They have worked closely with foundries to make sure that the most recent rule decks make the best use of Calibre’s advanced features. As a result, even with increasing rule complexity and data set size, runtimes and memory utilization have remained steady or decreased.

Transporting the data to the cloud is optimized by moving the cells individually, not the flattened design, in what Mentor calls a hierarchical filing methodology. Of course, Calibre needs to assemble the entire design in order to work on it in the cloud. This step is called hierarchical construction mode, where the hierarchical data base (HDB) is created. In prior versions of Calibre, they would allocate and start the worker processes and have them wait for the HDB construction step. In a cloud environment it is more efficient to allocate processes when they are needed. So, one of the key changes in Calibre is called MTFlex, which optimizes CPU utilization so idle processors are not running when they are not needed.

Calibre in the Cloud uses regular licenses so there are no complications from that perspective. Also results and reports can be brought back for viewing in the same way as local runs. Overall Mentor has endeavored to make the entire operation as efficient and smooth as possible. Users can run locally if they want, and then quickly transition to the cloud when production load warrants it.

The technical brief entitled Calibre in the Cloud: Unlocking Massive Scaling and Cost Efficiencies is pretty interesting reading, and makes the point that close collaboration between customers, foundries, cloud providers and Mentor was necessary to deliver a robust solution for scaling by moving to the cloud. Also, at the TSMC OIP Forum in Santa Clara recently Mentor, Microsoft, TSMC and AMD jointly presented the results of using Calibre in the Cloud on a 500M gate design. The presentation on this case study is viewable on demand.

Interestingly running Calibre in the cloud can be an effective solution for large or small companies. Each has their own obstacles to running in periods of peak resource needs. The technical brief can be downloaded from the Mentor website for a full reading.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.