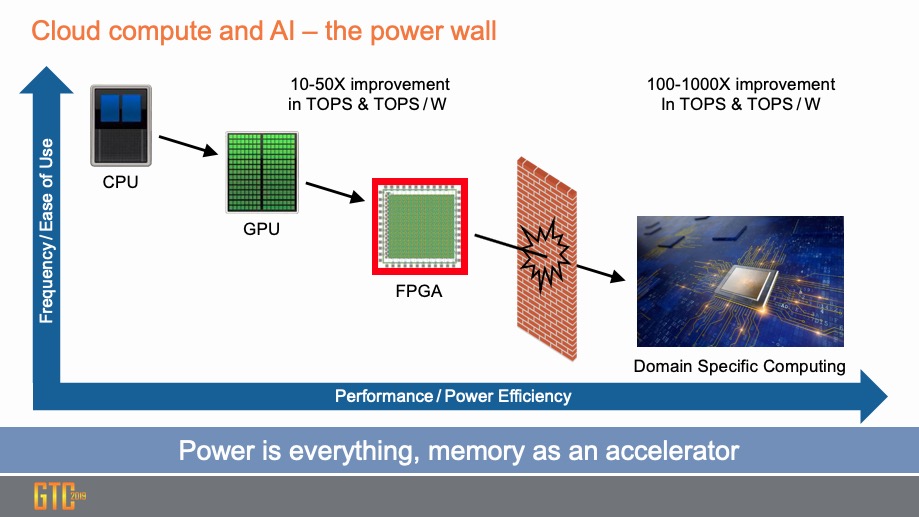

The use of machine learning (ML) to solve complex problems that could not previously be addressed by traditional computing is expanding at an accelerating rate. Even with advances in neural network design, ML’s efficiency and accuracy are highly dependent on the training process. The methods used for training evolved from CPU based software, to GPUs and FPGAs – which offer big advantages because of their parallelism. However, there are significant advantages to using specially designed domain specific computing solutions.

Because training is so compute intensive, both total performance and performance per watt are both extremely important. It has been shown that domain specific hardware can offer several orders of magnitude improvement over GPUs and FPGAs when running training operations.

On December 12th GLOBALFOUNDRIES (GF) and Enflame Technology announced a deep learning accelerator solution for training in data centers. The Enflame Cloudblazer T10 uses a Deep Thinking Unit (DTU) on GF’s 12LP FinFET platform with 2.5D packaging. The T10 has more than 14 billion transistors. It uses PCIe 4.0 and Enflame Smart Link for communication. The AI accelerator supports a wide range of data types, including FP32, FP16, BF16, Int8, Int16, Int32 and others.

The Enflame DTU core features 32 scalable intelligent processors (SIP). Groups of 8 SIPs each are used to create 4 scalable intelligent clusters (SIC) in the DTU. HBM2 is used to provide high speed memory for the processing elements. The DTU and HBM2 are integrated with 2.5D packaging.

This design highlights some of the interesting advantages of GF’s 12LP FinFET process. Because of high SRAM utilization in ML training, SRAM power consumption can play a major role in power efficiency. GF’s 12LP low voltage SRAM offers a big power reduction for this design. Another advantage of 12LP is much higher level of interconnect efficiency compared to 28nm or 7nm. While 7nm offers smaller feature size, there is no commensurate improvement in routing density for higher level metals. This means that for a highly connected design like the DTU, 12LP offers a uniquely efficient process node. Enflame is taking advantage of GF’s comprehensive selection of IP libraries for this project. The Enflame T10 has been sampled and is scheduled for production in early 2020 on GF’s Fab 8 in Malta New York.

A company like Enflame has to walk a very fine line in designing an accelerator like the T10. The specific requirements for machine learning determine many of the architectural decisions for the design. On-chip communication and reconfigurability are essential elements. The T10 excels in this area with its on-chip reconfiguration algorithm. Their choice in selecting 12LP means optimal performance without the risk and expense of going to a more advanced node. GF is able to offer HBM2 and 2.5D packaging in an integrated solution, further reducing risk and complexity for the project.

It is widely understood that increasing training data set size improves the operation and performance of ML applications. The only way to handle these increasing workloads is with fast and efficient accelerators that are designed specifically for the task. The CloudBlazer T10 looks like it should be an attractive solution. The full announcement and more information about both companies is available on the GLOBALFOUNDRIES website.

Also Read:

The GlobalFoundries IPO March Continues

Magnetic Immunity for Embedded Magnetoresistive RAM (eMRAM)

GloFo inside Intel? Foundry Foothold and Fixerupper- Good Synergies

Share this post via:

TSMC vs Intel Foundry vs Samsung Foundry 2026