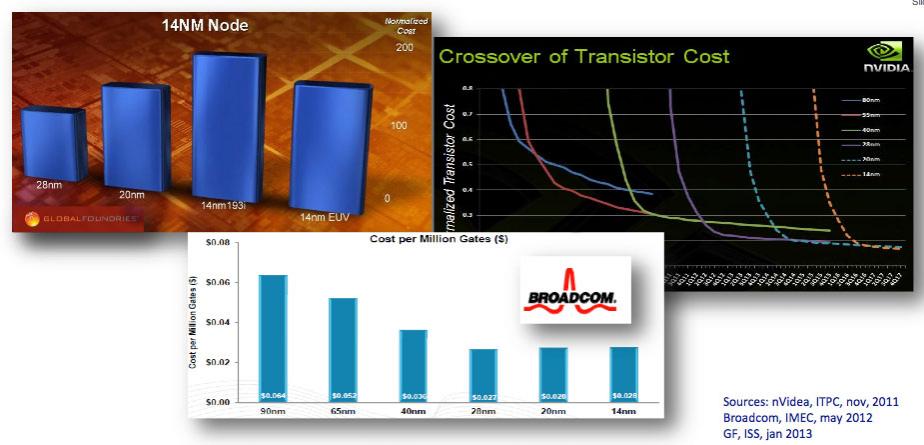

It is beginning to look as if 28nm transistors, which are the cheapest per million gates compared to any earlier process such as 45nm, may also be the cheapest per million gates compared to any later process such as 20nm.

What we know so far: FinFET seems to be difficult technology because of the 3D structure and so the novel manufacturing required but seems to be stable once mastered. Intel ramped it at 22nm and TSMC says they are on-track to have it at 16nm. What Intel doesn’t have at 22nm is double patterning, and TSMC does at 20nm. It seems to have severe variability problems even when mastered. TSMC have not yet ramped 20nm to HVM so there is still an aspect of wait-and-see there.

The cheap form of double patterning is non-self-aligned, meaning that the alignment of the two patterns on a layer is entirely up to the stepper repeatability which is of the order of 4nm apparently. Of course this means that there is huge variation in any sidewall effects (such as sidewall capacitance) since the distance between the “plates” of the capacitor may vary by up to 4nm. This is variability that is very hard to remove (the stepper people of course are trying to tighten up repeatability, of course, which will be needed in any case for later processes). Instead EDA tools need to analyze it and designers have to live with it, but the margins to live with are getting vanishingly small.

There is a more expensive form of double patterning that is self-aligned using a spacer or mandrel. The material required on the wafer is laid down. The spacer is laid down on top and then the edges of the spacer are used to create the pattern as sidewalls and then the spacer is removed. The pattern is then used to etch the underlying material. This involves a lot more process steps and is a lot more expensive, but does has less variability since the two sidewalls are closely aligned due to the way they were manufactured. It looks like we will need to use this approach to construct the FinFET transistors and their gates for 10nm and below.

A general rule in fabrication is to touch the wafer as few times as possible. Double patterning inevitably drives this up. One way to get it down is to use bigger wafers and, of course, there is a big push towards 450mm wafers. These provide about the same reduction in cost per million transistors as we used to get from a process generation (where the rule of thumb was twice as many transistors with a cost increase of 15% per wafer leaving about a 35% cost reduction per million transistors). But 450mm reduces the cost of all processes, and so probably the only thing that will ever be cheaper than 28nm on 300mm wafers will be 28nm on 450mm wafers. Or perhaps 28nm on 300mm wafers running in a fully-depreciated fab.

The other hope for cost reduction is EUV lithography. I’m skeptical about it, as you know if you’ve read my other blogs about it. Even if it works people appear to be planning to do 10nm without it (except in pilot stuff). EUV is almost comical if you describe it to someone. Droplets of molten tin are shaped with a small laser. Then a gigawatt-sized power plant blasts the molten tin with half a dozen huge lasers, vaporizing it and producing a little bit of EUV light. But everything absorbs EUV light so everything also has to be in a vacuum. Then the light is bounced of half a dozen mirrors and a reflective mask. And I use “reflective” in a relative way, since only about 30% of the light is reflected per mirror since these are actually mirrors that work by interference and Bragg reflection because a regular polished metal mirror would simply absorb the EUV. So maybe 4% of the light reaches the photoresist. And if that isn’t enough, the masks cannot be made defect free. And contamination on the mask will print since we (probably) can’t put a pellicle on it to keep contamination out of the focal plane since the pellicle will absorb all the EUV too. But maybe it will all come good. After all, when you first hear about immersion lithography or CMP they sound pretty unlikely ways to make electronics too.

If this scenario is true, there are a couple of big problems. The first is that electronics will stop getting cheaper. You can have faster processors, lower power, more cores or whatever. But it will cost you. In the past we have always had a virtuous cycle where costs get reduced, performance/power improve and design size increases. So even if you didn’t want to move from 90nm to 65nm for performance, power or size reasons, the cost reduction made you do it anyway. That will no longer be true. Yes, Apple’s Ax chips for high-end smartphones will move even if the chips cost twice as much: in a $600 phone you won’t notice. But the mainstream smartphone market, and the area with predicted high growth, is sub $100. They will all have to be made at 28nm for cost reasons, and make do without the stuff 20nm and below offers. Products that can support a premium for improved performance will benefit, of course, but we’ve never been in an area where next year’s quadcore chip costs twice what this year’s dual core chip did.

The other big problem is that if only a few designs move to these later nodes, the bleeding edge designs that really need the performance, will that be enough to justify the multi-billion dollar investment in developing the processes and building the fabs. Those leading edge smartphone, router and microprocessor chips can go to 22/20nm for a year, then move to 16/14nm. But then…crickets. All the other designs can’t afford to pay the premium and stay at 28nm. Chip design will be like other industries, such as batteries say, improving at most by a few percent per year and no longer with any exponential component.

To be fair, Intel have said publicly that they see costs continuing to come down. Various theories are around as to why this is. It seems likely that they believe it rather than just posturing. Maybe they know something nobody else does. I know equipment people who say that they no longer get any access to Intel fabs so don’t really know everything their equipment is being used for. Maybe they are mistaken. Or maybe it is true for Intel who are transitioning from a very high-margin microprocessor business that is not very cost-sensitive to a foundry/SoC business that is very cost-sensitive, and so are also transitioning from not having good wafer costs to being forced to be competitive with everyone else. I’ve said before that managers at Intel often think that they are better than they are since there is so much margin bleedthrough from microprocessors that everyone else looks good. Maybe this is just another facet of that phenomenon.

See also my report on EUV from Semicon West in July.

See also my take on Intel’s cost-reduction statements.

Memory Matters: Signals from the 2025 NVM Survey