With advancement of semiconductor technologies, ever increasing sizes of SoCs bank on higher densities of design rather than giving any leeway towards increasing chip area and package sizes; a phenomenon often overlooked. The result is – larger designs with lesser number of pins bonded out of ever shrinking package sizes;… Read More

Enabling RISC-V & AI Innovations with Andes AX45MPV Running Live on S2C Prodigy S8-100 Prototyping SystemQualifying an AI-class RISC-V SoC demands proving that…Read More

Enabling RISC-V & AI Innovations with Andes AX45MPV Running Live on S2C Prodigy S8-100 Prototyping SystemQualifying an AI-class RISC-V SoC demands proving that…Read More DAC News – A New Era of Electronic Design Begins with Siemens EDA AIAI is the centerpiece of DAC this year.…Read More

DAC News – A New Era of Electronic Design Begins with Siemens EDA AIAI is the centerpiece of DAC this year.…Read More Arteris at the 2025 Design Automation Conference #62DACKey Takeaways: Expanded Multi-Die Solution: Arteris showcases its…Read More

Arteris at the 2025 Design Automation Conference #62DACKey Takeaways: Expanded Multi-Die Solution: Arteris showcases its…Read MoreSynopsys VC VIP for Memory

Synopsys have been gradually broadening their portfolio of verification IP (VIP). It is 100% native SystemVerilog with native debug using Verdi (that was acquired from SpringSoft last year, now fully integrated into Verification Compiler). It has native performance with VCS. Going forward there are source code test suites.… Read More

A couple of misconceptions about FD-SOI

We have extensively discussed in Semiwiki about FD-SOI technology, explaining the main advantages (Faster, Cooler, Simpler), sometimes leading to very deep technical discussions, thanks to Semiwiki readers and their posts. I have recently found an article “Samsung & ST Team Up on 28nm FD-SOI”. This article includes many… Read More

Quicklogic Delivers First Wearable Sensor Hub with Under 150uW Standby

I have talked before about how the Internet of Things (IoT) doesn’t require enormous power-hungry SoCs. We all accept, or at least put up with, having to recharge our phones daily. But smart pedometers (or whatever a good name for Fitbit-like products are) had better last for a week or two between charges.

Today, Quicklogic… Read More

Momentum Builds For 64-bit ARMv8-A

No doubt about it, the summer break has ended, it’s time for releasing big announcement, like this one from ARM “Momentum Builds For the Next Generation of ARM Processors”. In fact, the key information is about ARMv8-A market adoption. A total of 27 companies have signed agreements for the company’s ARMv8-A technology as… Read More

Google Glass with purpose, not just another smart wear

Last year or earlier (when it was in the making), when I first heard of Google Glass, I was of the opinion that it’s yet another device with a screen in front of your eyes, with wearable glasses through which you can see the virtual extension of reality you are interacting with (here is a demo); a technology called Augmented Reality (… Read More

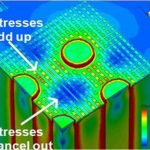

Managing Stress in 3D

A new publication on mechanical stress in integrated circuits, co-edited by Valeriy Sukharev, Principal Engineer for Calibre R&D at Mentor Graphics, has just been released by AIP Publishing. “Stress-Induced Phenomena and Reliability in 3D Microelectronics” includes 14 key papers from four international workshops … Read More

Design Collaboration across Multiple Sites

Any SoC or IC design project, whether implemented at the same design site or multiple sites requires some data management tools to manage things such as a central data repository, revision management of files, etc., for effective co-ordination of work among different team members. Given the challenge of meeting the shrinking… Read More

MIPS 64 bit CPU Architecture

Imagination Technologies has just launched the 5[SUP]th[/SUP] generation of MIPS CPU core, the 64-bits Warrior, or I6400 family, offering a total compatibility with the 32-bit previous architecture. MIPS Warrior I-class processor cores offers 64-bit processing in applications including embedded, mobile, digital consumer,… Read More

How to detect weak nodes in a power-off analog circuit?

Most analog cells have a power off mode intended to reduce power consumption. In this mode, all the circuit branches between the supply lines are set in a high impedance mode by driving MOS gates to a blocking voltage. This is a somewhat similar situation to that in tri-state digital circuits.

When a branch is set in that high impedance… Read More

Flynn Was Right: How a 2003 Warning Foretold Today’s Architectural Pivot