Since power has become a critical factor in semiconductor chip design, the stress is towards decreasing supply voltage to reduce power consumption. However, the threshold voltage to switch devices cannot go down beyond a certain limit and these results in an extremely narrow margin for noise between the two. And that gets further… Read More

WEBINAR: Edge AI Optimization: How to Design Future-Proof Architectures for Next-Gen Intelligent DevicesEdge AI is rapidly transforming how intelligent solutions…Read More

WEBINAR: Edge AI Optimization: How to Design Future-Proof Architectures for Next-Gen Intelligent DevicesEdge AI is rapidly transforming how intelligent solutions…Read More WEBINAR Unpacking System Performance: Supercharge Your Systems with Lossless Compression IPsIn today's data-driven systems—from cloud storage and AI…Read More

WEBINAR Unpacking System Performance: Supercharge Your Systems with Lossless Compression IPsIn today's data-driven systems—from cloud storage and AI…Read More ChipAgents Tackles Debug. This is ImportantInnovation is never ending in verification, for performance,…Read More

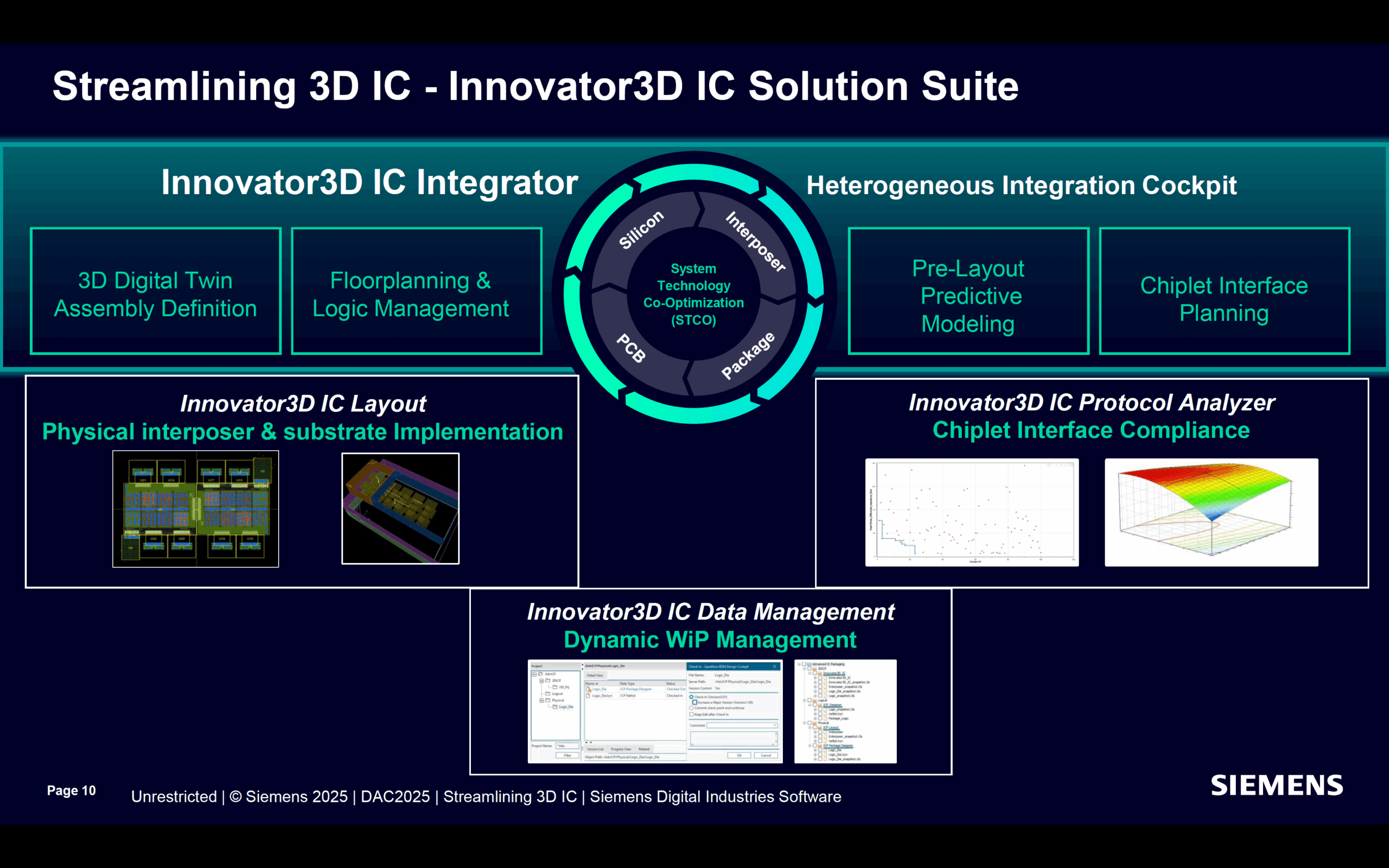

ChipAgents Tackles Debug. This is ImportantInnovation is never ending in verification, for performance,…Read More Siemens EDA Unveils Groundbreaking Tools to Simplify 3D IC Design and AnalysisIn a major announcement at the 2025 Design…Read More

Siemens EDA Unveils Groundbreaking Tools to Simplify 3D IC Design and AnalysisIn a major announcement at the 2025 Design…Read More CEO Interview with Faraj Aalaei of CognichipFaraj Aalaei is a successful visionary entrepreneur with…Read More

CEO Interview with Faraj Aalaei of CognichipFaraj Aalaei is a successful visionary entrepreneur with…Read MoreWhat Does Intel Look Like 10 Years From Now?

Intel (INTC) CEO Brian Krzanich keynoted the Citi Global Technology Conference last week. This was a precursor to the Intel Developer Forum in San Francisco this week. Normally these types of events are scripted dog and pony shows but sometimes interesting information comes out. The first question for example:

What does Intel… Read More

Design & EDA Collaboration Advances Mixed-Signal Verification through VCS AMS

Last week it was a rare opportunity for me to attend a webinar where an SoC design house, a leading IP provider and a leading EDA tool provider joined together to present on how the tool capabilities are being used for advanced mixed-signal simulation of large designs, faster with accuracy. It’s always been a struggle to combine design… Read More

EDA Plus ARM Equals Big Views!

In looking at the SemiWiki analytics, one of the top search terms that brings traffic to our site is ARM, just about anything ARM. In fact, that’s what the next SemiWiki book will be about. Yes, ARM is that interesting. While EDA is also one of our top search terms, EDA+ARM will get the most views, absolutely. And let’s face it, bloggers… Read More

TSMC OIP: Registration Open

It’s that time of year again! The 4th TSMC Open Innovation Platform Ecosystem Forum is coming up on September 30th. As usual it is in the San Jose conference center. The TSMC OIP Ecosystem Forum brings together TSMC’s design ecosystem companies and their customers to share real case solutions to today’s design challenges.… Read More

Kilimanjaro

For several weeks I have been trying to put out one blog per day rather than being like London buses where there are none for ages and then three come along at once. Or SFMuni buses for that matter. This week has been more of the same. Well, OK, I skipped labor day because…labor day. All you IC types will be busy eating chips not designing… Read More

SmartScan Addresses Test Challenges of SoCs

With advancement of semiconductor technologies, ever increasing sizes of SoCs bank on higher densities of design rather than giving any leeway towards increasing chip area and package sizes; a phenomenon often overlooked. The result is – larger designs with lesser number of pins bonded out of ever shrinking package sizes;… Read More

Synopsys VC VIP for Memory

Synopsys have been gradually broadening their portfolio of verification IP (VIP). It is 100% native SystemVerilog with native debug using Verdi (that was acquired from SpringSoft last year, now fully integrated into Verification Compiler). It has native performance with VCS. Going forward there are source code test suites.… Read More

A couple of misconceptions about FD-SOI

We have extensively discussed in Semiwiki about FD-SOI technology, explaining the main advantages (Faster, Cooler, Simpler), sometimes leading to very deep technical discussions, thanks to Semiwiki readers and their posts. I have recently found an article “Samsung & ST Team Up on 28nm FD-SOI”. This article includes many… Read More

Quicklogic Delivers First Wearable Sensor Hub with Under 150uW Standby

I have talked before about how the Internet of Things (IoT) doesn’t require enormous power-hungry SoCs. We all accept, or at least put up with, having to recharge our phones daily. But smart pedometers (or whatever a good name for Fitbit-like products are) had better last for a week or two between charges.

Today, Quicklogic… Read More

Facing the Quantum Nature of EUV Lithography