“If a tree falls in a forest and no one is around to hear it, does it make a sound?” is a philosophical thought experiment that raises questions regarding observation and perception [Source: Wikipedia]. Setting aside the philosophical aspects, if one wasn’t present where a sound was generated, the sound was lost forever. That’s until the advent of audio related technologies, starting with the microphones and loudspeakers. One could be seated in a far corner of a very large auditorium and still be able to hear a speech being delivered from the podium. Audio technology has advanced a lot since its early days. Loudspeakers have progressed through stereo, quad, 5.1, 7.1, large speaker arrays, Ambisonics, and Dolby Atmos. Headphones have advanced through stereo and multi-driver to binaural.

While audio technologies have progressively brought the listener closer to an aural immersion experience, they do not fully mimic the natural world experience. There is more to that experience than just hearing a sound. The phrase “you had to be there” to experience it has truth to it. This experience is termed 3D or Spatial Audio experience. The rendering audio technology should also sense the listener’s movement relative to the assumed location of sound source and continuously provide a realistic experience. Gaming, augmented reality and virtual reality have introduced more challenges to overcome for achieving a realistic aural experience.

So, how to mimic the aural experience of the natural world and the virtual world with audio electronics?

Recently, CEVA and VisiSonics co-hosted a webinar titled “Spatial Audio: How to Overcome Its Unique Challenges to Provide A Complete Solution.” The presenters were Bryan Cook, Senior Team Leader, Algorithm Software, CEVA, Inc. and Ramani Duraiswami, CEO and Founder, VisiSonics, Inc. Bryan and Ramani explained spatial audio, how it works, the importance of head tracking, challenges faced and their company’s respective offerings for a complete solution.

The following are some excerpts based on what I gathered from the webinar.

Spatial/3D Audio

While surround sound technology renders a good listening experience, the sound itself is mixed for a given sweet spot. The listener looking in a direction other than perfectly forward breaks the immersion of surround sound. Audiovisual media lose realism. Video games miss crucial location information pertinent to virtual survival.

Sound in a real world scenario comes from all directions: up, down, left, right, rear and front. And the sound source typically stays fixed while the listener may be moving. Spatial/3D audio experience is one that reproduces a realistic aural experience of the real world and the virtual world as the case may be. Spatial audio technology is being deployed in music, gaming, audio/visual presentations, automotive and defense applications. The technology is delivered primarily via headphones/TWS earbuds, but also through smart speakers/sound bars, and AR/VR/XR devices.

How does Spatial Audio Work?

Experiencing spatial sound relies on some primary and secondary cues.

The primary cues are based off of interaural time difference (ITD) and interaural level difference (ILD) relative to the distance between the source and each ear. ITD is the difference in arrival time of a sound at each ear. ILD is the difference in volume of a sound at each ear.

The secondary cues are the position dependent frequency changes to the sound. The shape of the listener’s head, ears and shoulders amplify or attenuate sounds at different frequencies. While low frequency sounds are either not affected or affected consistently, high frequency sound transformations are dependent on ear shape. But mid-frequency sound transformations are dependent upon the head and body shape. The overall effects of these transformations can be in the order of tens of dB.

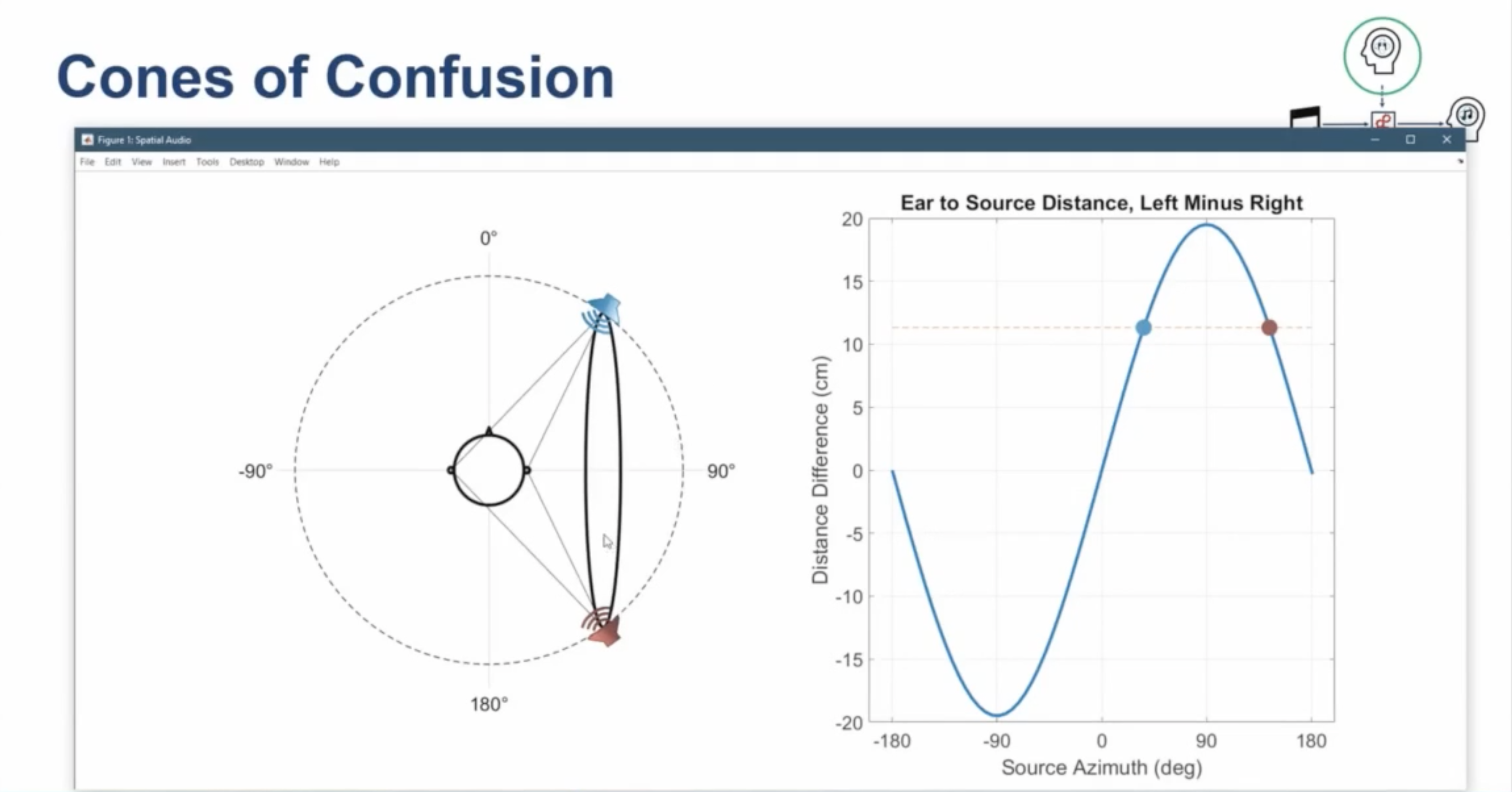

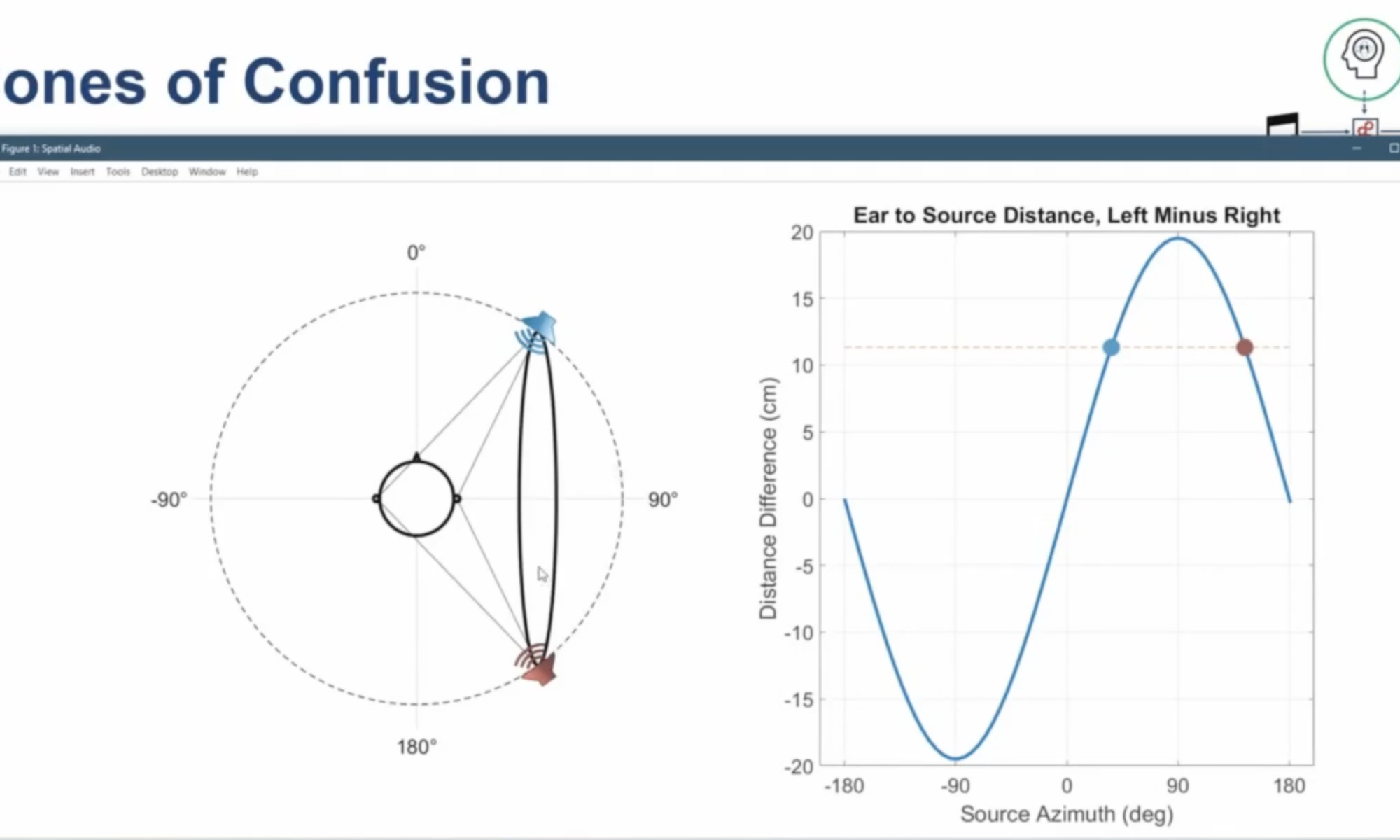

As such, head-tracking and capturing the impact on primary and secondary cues becomes essential for delivering a realistic spatial audio experience. These effects are captured and modeled as Head Related Transfer Functions (HRTF). Without head tracking, the sound source will move with the head motion, causing the spatial audio cues to remain unchanged. With head tracking, the sound sources will be held stationary in the digital world. This recreates the real world situation and improves the effectiveness of the spatial audio experience that is rendered. Head tracking also helps with disambiguating the location of a sound source when multiple locations can produce the same primary spatial audio cues. This is referred to as the cones of confusion effect.

Technical Challenges to Implementing an Effective Spatial Audio system

There are two latencies that come in the way of delivering effective spatial audio experience. The first is the audio latency. This relates to the time it takes for the audio playback to be sent to the headphones. For pre-recorded music, audio output latency doesn’t matter. For movies and games, a large audio output latency can lead to lip-sync issues. The second is the head tracking latency. This relates to the time that passes from the point of moving the head to when the audio changes to reflect this change.

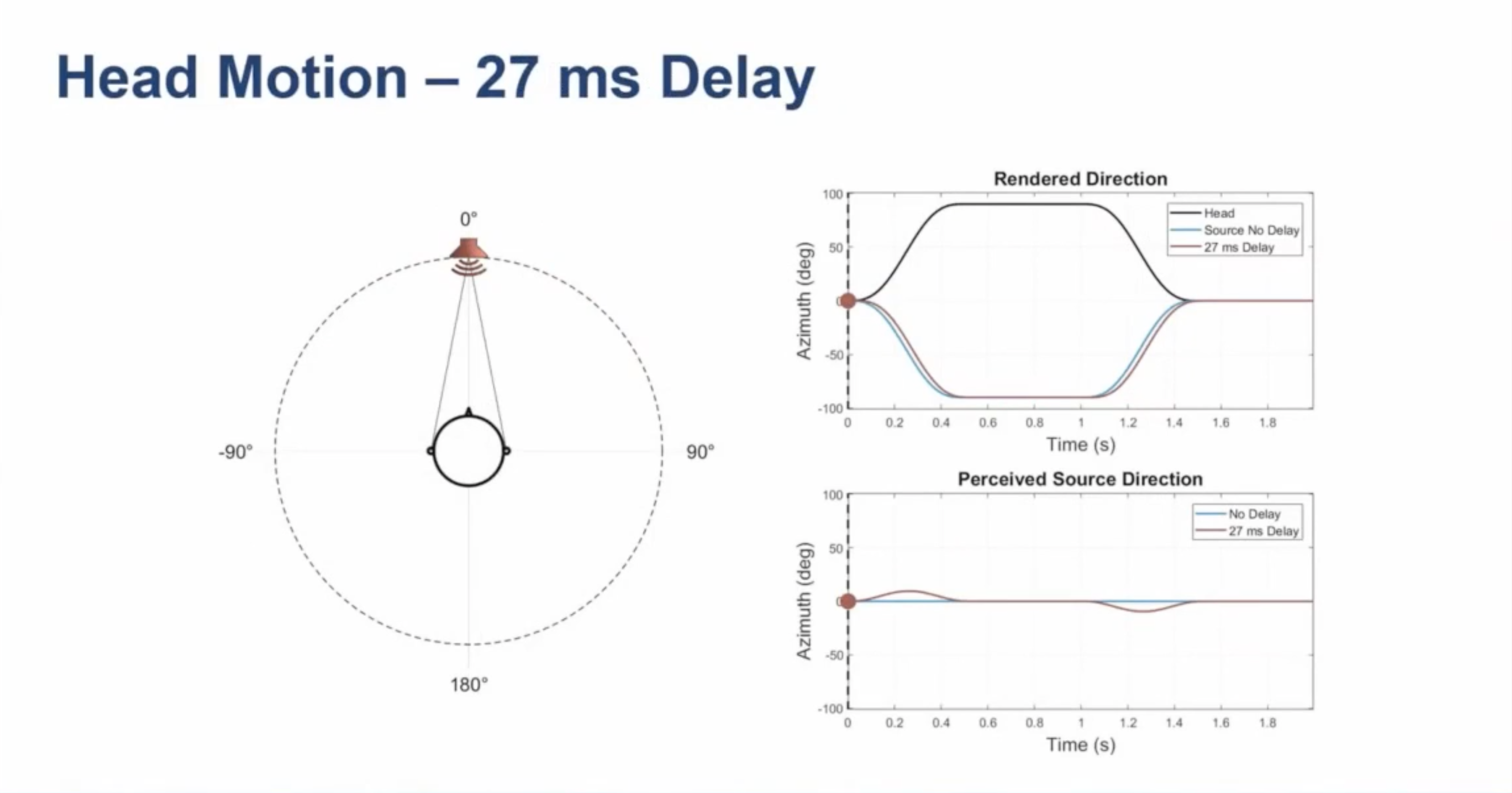

When head tracking is not processed locally on the headphone device itself, a large latency can be introduced. For example, Apple AirPods Pro head tracking latency is more than 200 ms because sensor information is transferred to the phone for processing. For this 200 ms latency, the head motion information doesn’t even get processed for correcting the perceived source direction. This makes it hard to localize perceived source direction leading to over 60 degrees of error. The result is an erratic spatial audio experience, particularly during large or frequent head movements.

Addressing the Technical Challenges For a Robust, Complete Solution

A better spatial audio experience can be delivered with a low head tracking latency. Low latencies can be achieved with local audio processing on the headphones. This approach eliminates wireless transmissions in the head tracking processing path. CEVA’s reference design delivers less than 27 ms of head tracking latency through the effective use of CEVA-X2 DSP for local spatial processing.

With a 27 ms latency, less than a ten degree error in perceived source direction is achievable. A low latency also helps with disambiguating the location of the sound source with respect to the cones of confusion discussed earlier.

The Figure below shows the inertial measurement unit (IMU) sensors useful for head tracking purposes and the corresponding reasons.

![]()

Complete Solution from CEVA and VisiSonics

A spatial audio system takes in the audio input, head tracking input, and the head related transfer function (HRTF) for processing into spatial audio output.

CEVA’s MotionEngine® software enables high accuracy head tracking. The CEVA-X2 DSP core enables low latency head tracking. The head tracking sensing itself is enabled by CEVA’s IMU sensors, sensor fusion software and algorithms and activity detectors.

VisiSonics’ RealSpace® 3D Spatial Audio technology easily integrates into headphones for personalizing HRTFs for mobile devices and VR/AR/XR applications.

For all the details from the webinar, you can listen to it in its entirety. If you are looking to add spatial audio capabilities to your audio electronics products, you may want to have deeper discussions with CEVA and VisiSonics.

Also read:

CEVA PentaG2 5G NR IP Platform

CEVA Fortrix™ SecureD2D IP: Securing Communications between Heterogeneous Chiplets

AI at the Edge No Longer Means Dumbed-Down AI

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.