AI accelerators as engines for object or speech recognition (among many possibilities), are becoming increasingly popular for inference in mobile and power-constrained applications. Today much of this inferencing runs largely in software on CPUs or GPUs thanks to the sheer size of the smartphone market, but that will shift as IoT volumes quickly overtake these familiar devices. IoT applications are generally very cost and power-sensitive, yet also demand higher performance from the inference engine to recognize more objects or phrases in real-time, so that they can deliver a competitive user experience.

Cost, power and performance are generally critical to differentiation for these devices and standard hardware platforms can’t rise to competitive expectations; this is driving the popularity of custom AI accelerators. However there is no standard architecture for these engines. Certainly there’s a general approach – convolutional neural nets (CNNs) or similar networks, but details in implementation can vary widely, in numbers and types of layers, window sizes, word sizes within layers and even in temporal versus spatial architectures.

So how does a system architect go about building differentiation into her CNN engine when she’s not really a hardware expert? One obvious choice is to start the design in an FPGA, at least for prototyping. This defers ASIC complexities to a later stage, but RTL-based design for the FPGA can still be a huge challenge. A much more system-friendly starting point is C++.

Suppose for example you want to build a spatial accelerator – a grid of processing elements (PEs) which can parallel process sliding windows on an image (this approach is getting a lot of press, see for example Wave Computing). You’ll first want to define your base PE design then interconnect these in a grid structure. The PE element needs to read in image data and weights, then compute partial sums. In addition, depending on how you choose to implement communication through the grid, you may forward weight and image data info through the PE or perhaps around the PE. Next you’ll array and interconnect these elements to build up your grid.

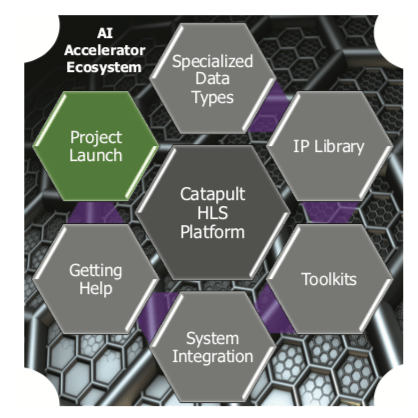

All of this can be expressed in a C++ description of the grid, with instances of classes for the various components. There are some limitations in coding to ensure this can be mapped to hardware, for example word widths are going to have to map to real hardware, and you’ll want to experiment with these widths to optimize your design. This is where the Catapult ecosystem helps out.

You don’t want to start with basic C++ datatypes and functions because these can’t always be optimally mapped; for example, basic C++ doesn’t offer word support with arbitrary widths and general-purpose packages that do won’t natively connect to hardware. The AI ecosystem instead provides predefined HLS (high-level synthesis) datatypes as C++ classes with overloaded operator functions to map your C++ description to a hardware equivalent, while also allowing you to tune in parameterizations consistent with that mapping.

It also provides a math library, including not only the usual math functions for those datatypes but also matrix and linear algebra functions common in neural net computation. Such functions can come in different implementation options:, such as fast with some small error or a little slower with higher accuracy. As you’re running your C++ trials you can easily experiment with tradeoffs like this. Functions provided cover all the usual list for neural nets, including PWL functions for absolute value, log, square root, trig, activation functions for tanh, sigmoid and leaky ReLU, and linear algebra functions like matrix multiply and Cholesky decomposition A lot of these functions also have MatLab reference models which you will probably find useful during your architectural analysis.

You also get a parameterized DSP library for functions like filters and Fourier transforms and an image processing library, configurable for common pixel formats and providing functions you are likely to need, like color conversion, image scaling and windowing classes for 2D convolution.

So pretty much you’ve got everything you need to take you from an input image (or speech segment) through widowing, to all the CNN functions you’re going to need to complete your implementation through to identification. All in C++, using which you can do initial tuning in MatLab. You can experiment with and verify functionality and performance at this level (waaaay faster than simulation at RTL) and, when you’re happy, you can synthesize directly into an RTL implementation where you can characterize power and area.

Since your accelerator will sit in a larger system (an FPGA or an SoC), you need to connect with that system through standard interfaces like AXI. Catapult HLS takes care of you here through interface synthesis. Ultimately, at least for your prototype, you can then map your design to that FPGA implementation so you can check performance and accuracy at real-time speeds.

To round this out, the ecosystem provides a number of predefined toolkits/reference designs: for pixel-pipe video processing, for 2D convolution based on the spatial accelerator grid structure I mentioned earlier, and for tinyYOLO object classification. No need to build these from scratch; you can start with the toolkits and tweak to get to the architecture you want.

This is a pretty complete design solution to help bridge the gap between AI system design expert needs and the hardware implementation team. You should check it out HERE.

Share this post via:

Comments

One Reply to “An AI Accelerator Ecosystem For High-Level Synthesis”

You must register or log in to view/post comments.