I attend a lot of events on machine learning and write about it regularly. However, I learned some exciting new information about machine learning in a very surprising place recently. Every year for the last few years I have attended the HSPICE SIG dinner hosted by Synopsys in Santa Clara. This event starts with a vendor fair featuring companies that have useful interfaces and integrations with HSPICE. After a mixer in the vendor fair area, there is a dinner and several talks pertinent to the HSPICE user community.

Usually there are interesting talks by Synopsys customers on how they used the features of HSPICE in their flow to solve a vexing design problem. However, this time Synopsys threw us a curve ball. The main thrust of the talks this year focused on applications for machine learning in EDA, and specifically around HSPICE related issues. Naturally, EDA is not the first thing one thinks of when machine learning is mentioned. Yet, more and more, machine learning is being applied to difficult problems in electronics design.

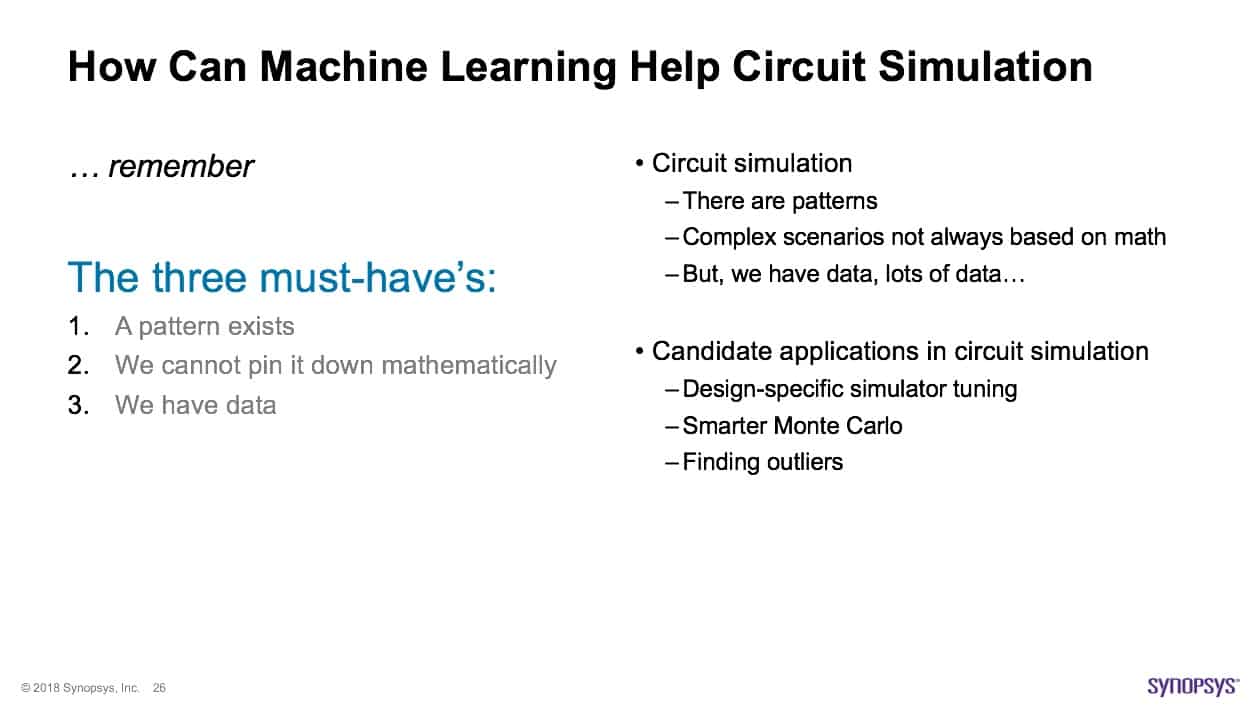

It’s important to point out that machine learning is as good at numerical problems as it is at visual problems. During a panel portion of the dinner event, Synopsys Scientist Dr. Mayukh Bhattacharya clearly laid out the ideal scenario for using machine learning for a numerical problem. He cited the very broad definition of machine learning as “using a set of observations to uncover an underlying process”. There are three must haves: a pattern exists, we cannot pin it down mathematically, and we have data. It should be evident that these three conditions are met in a lot of SPICE related design problems.

As a good example was the talk that evening by Chintan Shah, VLSI Engineer of from Nvidia, where he has been working on clock methodology and timing. It’s interesting to think that Nvidia, a leader in machine learning technology, is applying machine learning to their own design methodology. In particular Chintan use machine learning to predict clock cell joule self-heating in their designs. Self-heating has become a much bigger issue with the advent of FinFET devices. FinFET self-heating can lead to device failure and it can also contribute to electro-migration issues.

Chintan’s problem is that there are huge numbers of clock cells that need to be simulated based on their parameters and their specific instantiation the design. For small numbers of such cells direct simulation is an adequate solution. Chintan reasoned that machine learning might help determine which cells are at risk and need detailed simulation. Nvidia has lots of historic data that can be used for training and validation of their deep learning model. They chose deep learning because single layer neural networks are not good at systems with nonlinearities.

Their conclusion was that the machine learning model had an average error of around 6.5%, with a mean square error of 0.05. This enabled them to filter out 99% of the cells with no simulation necessary. The remaining 1% represented a tractable simulation problem. This represents a 100X runtime improvement.

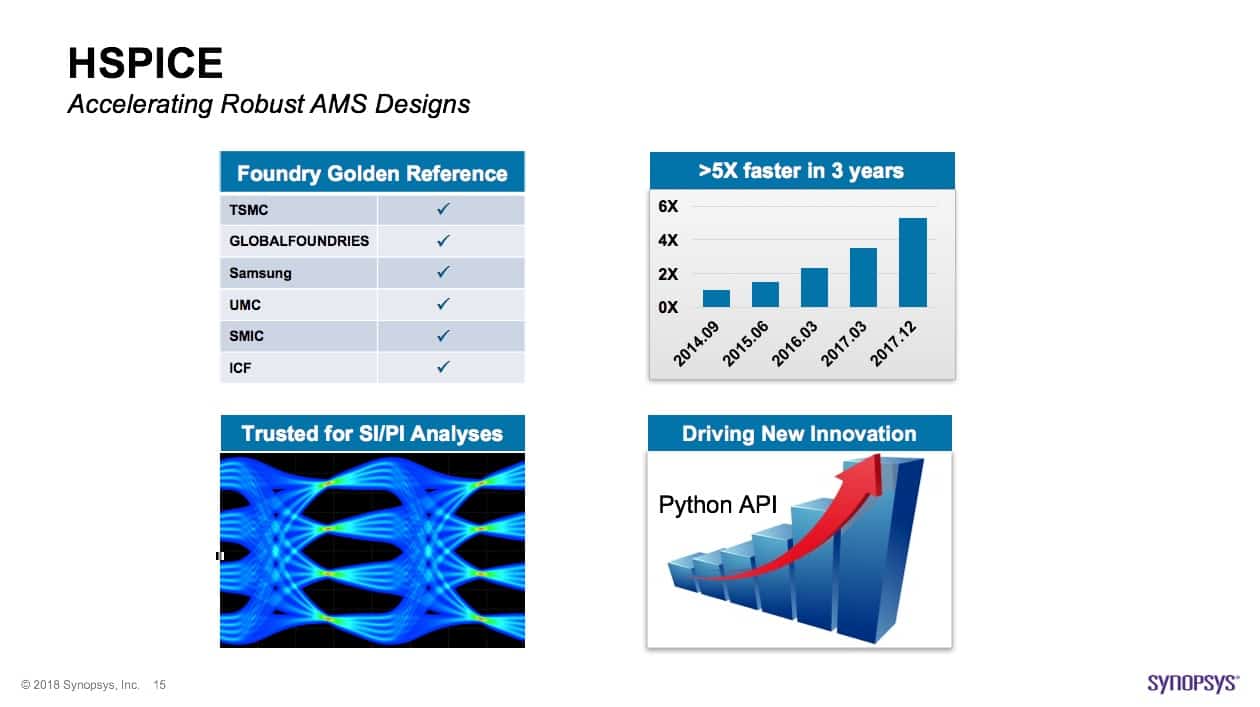

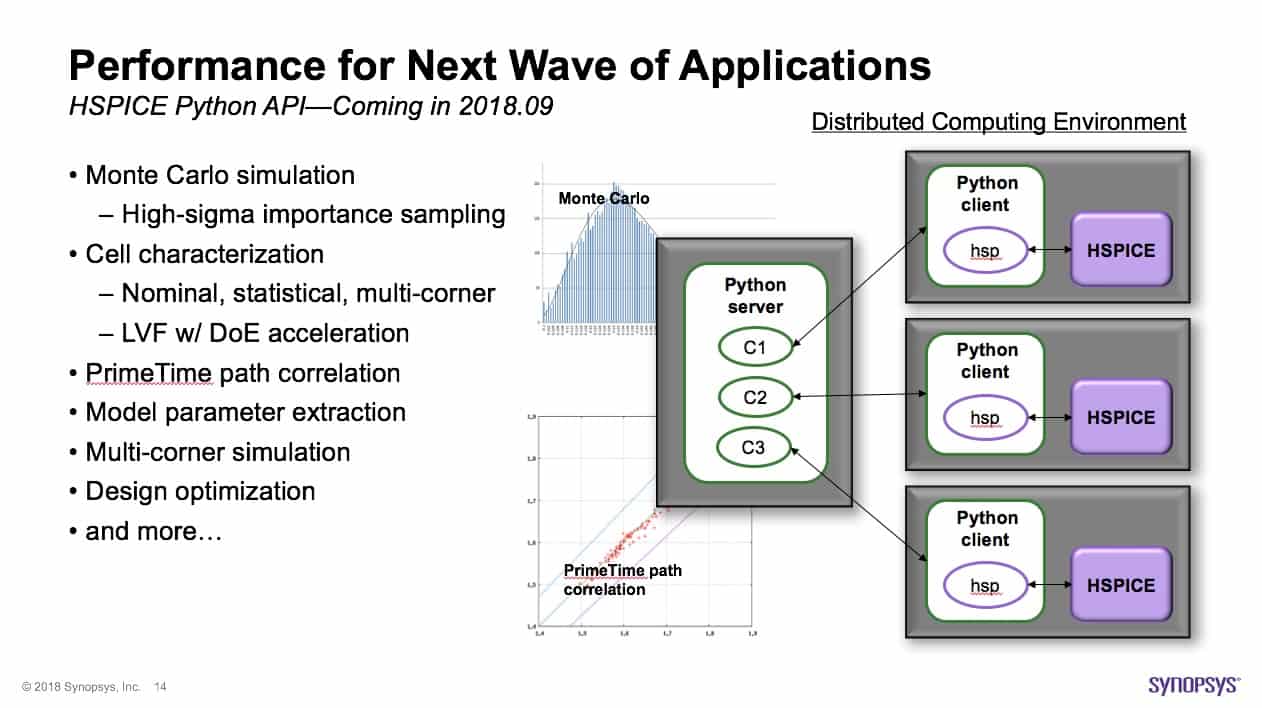

Of course, as is traditionally the case, there was a talk at the beginning by Synopsys’ Dr. Scott Wedge on HSPICE. He highlighted their latest developments and talked about their relentless progress on HSPICE performance. He aptly pointed out that if designs are growing at the rate predicted by Moore’s law, the tools used for design need to improve at a similar or better rate – something that Synopsys has been able to do with HSPICE, while reducing the memory footprint. One of the most interesting features Scott mentioned is their Python API. This opens the door for customers to conceive and implement advanced applications that utilize HSPICE as a core engine. As part of this there is support for a distributed computing environment.

Additionally, there was a talk by AMD on their use of HSPICE and MATLAB for global clock tree closure by Jason Ferrell. By the end of the evening it was clear that EDA will be increasingly affected by the growth of machine learning. And, it will happen in what will initially seem to be unexpected places. Machine learning is something that that everyone involved in EDA needs to pay attention to. Synopsys is providing a video of the entire dinner session available online. I’d suggest viewing the video to glean much more specific information on all the topics covered.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.