There’s always a lot of activity in EDA to innovate and refine specialized algorithms in functional modeling,  implementation, verification and many other aspects of design automation. But when Google, Facebook, Amazon, IBM and Microsoft are pushing AI, deep learning, Big Data and cloud technologies, it can be hard not to see EDA as something of a backwater compared to the more dynamic (and more widely relevant) world of big software. Which makes you wonder if we also could benefit from some of those methods and whether that might also catalyze new directions in EDA, attracting new (and young) talent and ideas to a domain that seems to have been largely indifferent to the software mainstream.

implementation, verification and many other aspects of design automation. But when Google, Facebook, Amazon, IBM and Microsoft are pushing AI, deep learning, Big Data and cloud technologies, it can be hard not to see EDA as something of a backwater compared to the more dynamic (and more widely relevant) world of big software. Which makes you wonder if we also could benefit from some of those methods and whether that might also catalyze new directions in EDA, attracting new (and young) talent and ideas to a domain that seems to have been largely indifferent to the software mainstream.

I now believe this is changing because I see forward-thinkers in EDA working on ways they can leverage hot ideas from mainstream computing. I mentioned in earlier posts Ansys’ big data analytics around power integrity and reliability analysis. In this post I want to talk about some very interesting work Synopsys has been doing around machine learning (ML), particularly in application to enhancing ease of use in formal verification. Manish Pandey (Synopsys) introduced this area in the first tutorial at the Formal Methods for CAD (FMCAD) conference a couple of weeks ago.

I should be clear up-front that the tutorial and this blog are on directions, not products. Don’t bother calling your Synopsys sales rep; there is nothing you can buy, yet, but this isn’t whiteboard stuff either. In my discussion, Manish was understandably cagey about how far they have got but it sounded like they are in active prototyping. So it seems timely to talk about why Manish and Synopsys feel ML is important for formal verification and what it can enable.

Why ML is Interesting for Formal

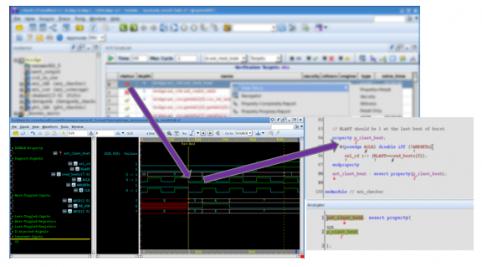

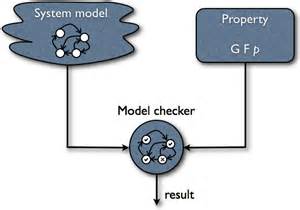

Formal has become a lot more accessible through pre-packaged apps requiring little specialized understanding to use. And a growing group of verification engineers are becoming more comfortable with some level of custom property and constraint definition, enabling more definition of architecture-specific checks without need for a team of formal PhDs. But some of what needs to be checked is getting harder: Cache-coherent interconnects, security management, safety management and power management dynamics are some messily inter-dependent examples. The properties that need to be defined are complex in their own right but even more challenging can be defining constraints which will reasonably bound run-times yet not mask real problems. ML methods could potentially help both in assisting engineers to debug why properties they expect to pass are failing, and in helping them build more reliable constraint sets.

Machine Learning – a quick review

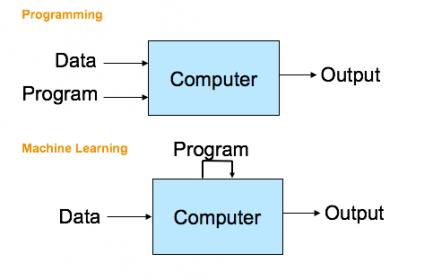

Given the press we see on neural nets/deep learning, you could be forgiven for thinking that all AI is now encompassed in that topic. Actually it is a subset technique in the broader scope of machine learning. All such methods use training data to effectively self-program recognition of complex scenarios in images or other datasets.

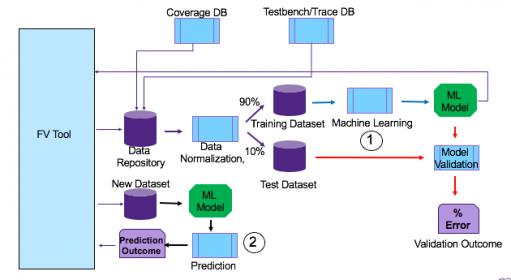

Machine learning architectures also place significant emphasis on methods to access rich and distributed data sources. Which leads naturally to a need for Big Data methods; in verification you might want to harvest training data from many different designs sites and in different formats: simulation, emulation and formal verification. Hadoop is probably the best-known Big Data platform, but it doesn’t seem to be a hot choice in EDA, primarily thanks to specialized needs in access to EDA datasets. One such need is to do very fast iterative analysis on data, not a particular strength for Hadoop. Manish mentioned Apache Spark as an alternative headed in the right direction, but reserved judgment on whether it was the best possible platform for Synopsys needs. Whichever way this goes, the solution will require a world-class SW stack providing in-memory analysis and computation on distributed data.

ML and Formal Applications

So if you could setup a powerful ML system with efficient access to a rich, distributed set of functional analysis data, what kinds of analysis might that enable? One example is in helping do root-cause analysis on a failed assertion. Manish illustrated this through an imagined dialog between a trained ML system and an engineer, where the engineer identifies an unexpected behavior, the ML asks a few clarifying questions, does a root-cause assessment then suggests a change in constraints that would lead to expected behavior (and launches a re-run with the new constraints).

Another promising area is in specification mining – searching though prior verification datasets to find likely reasonable properties and constraints. Like all machine-learning methods, this is a probabilistic exercise – what you find is not guaranteed to be absolutely true in a mathematical sense. But given a sufficiently rich learning dataset, it may be true enough to represent all reasonable use-cases. And what you find as exceptions may be sufficiently revealing to prompt you to add safeguard logic to block those cases, or to add a strongly-worded warning to the documentation, or perhaps to lead you to rethink the property you are checking.

Manish mentioned other areas that he sees as promising though further out, of which I’ll touch on just one here: using ML to aid in theorem-proving. This task is generally required in proving that a design (or subsystem) meets predefined system specifications through a series of proof steps. Since these often span significant amounts of logic (and perhaps time), they must be guided by heuristics to minimize human effort in decomposing the problem into tractable sub-components. Developing these heuristics typically requires a great deal of expertise, but relatively recent work has shown that there is a functional relationship between the conjecture to be proved (plus axioms) and the best heuristics to use for the proof search, which makes ML a very suitable assistant in guiding proofs. That could make ML+formal even more useful in proving things like compliance safety and security specifications.

You can learn more about the Synopsys presentation HERE and more background information on the theorem-proving topic HERE.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.