AI-based applications are fast advancing with evolving neural network (NN) models, pushing aggressive performance envelopes. Just a few years ago, performance requirements of NN driven applications were at 1 TOPS and less. Current and future applications in the areas of augmented reality (AR), surveillance, high-end smartphones, ADAS vision/LiDAR/RADAR, high end gaming and more are calling for 50 TOPS to 1000+ TOPS. This trend is leading to development of neural processor units (NPUs) to handle this demanding requirement.

Pierre Paulin, Director of R&D, Embedded Vision at Synopsys gave a talk on NPUs at the Linley Spring Conference April 2022. His presentation was titled “Bigger, Faster and Better AI: Synopsys NPUs” and covered their recently announced ARC NPX6 and ARC NPX6FS processors. This post is a synopsis of the salient points from his talk.

Embedded Neural Network Trends

Four factors contribute to the increasing levels of performance requirements of artificial intelligence (AI) applications.

- AI research is evolving and new neural network models are emerging. Solutions must be able to handle models such as the AlexNet from 2012 as well as the latest models such as the transformer and recommender graphs.

- With the automotive market being a big adopter of AI, the applications need to meet functional safety requirements standards. This market requires mature and stable solutions.

- Applications are leveraging higher definition sensors, multiple camera arrays and more complex algorithms. This calls for parallel processing of data from multiple types of sensors.

- All of the above push more requirements on to the SoCs implementing and supporting the AI applications. The hardware and software solutions should enable quicker and quicker time to market.

Synopsys’ New Neural Processing Units (NPUs)

Synopsys recently introduced their new NPX series of NPUs to deliver performance, flexibility and efficiency demanded by the latest NN trends.

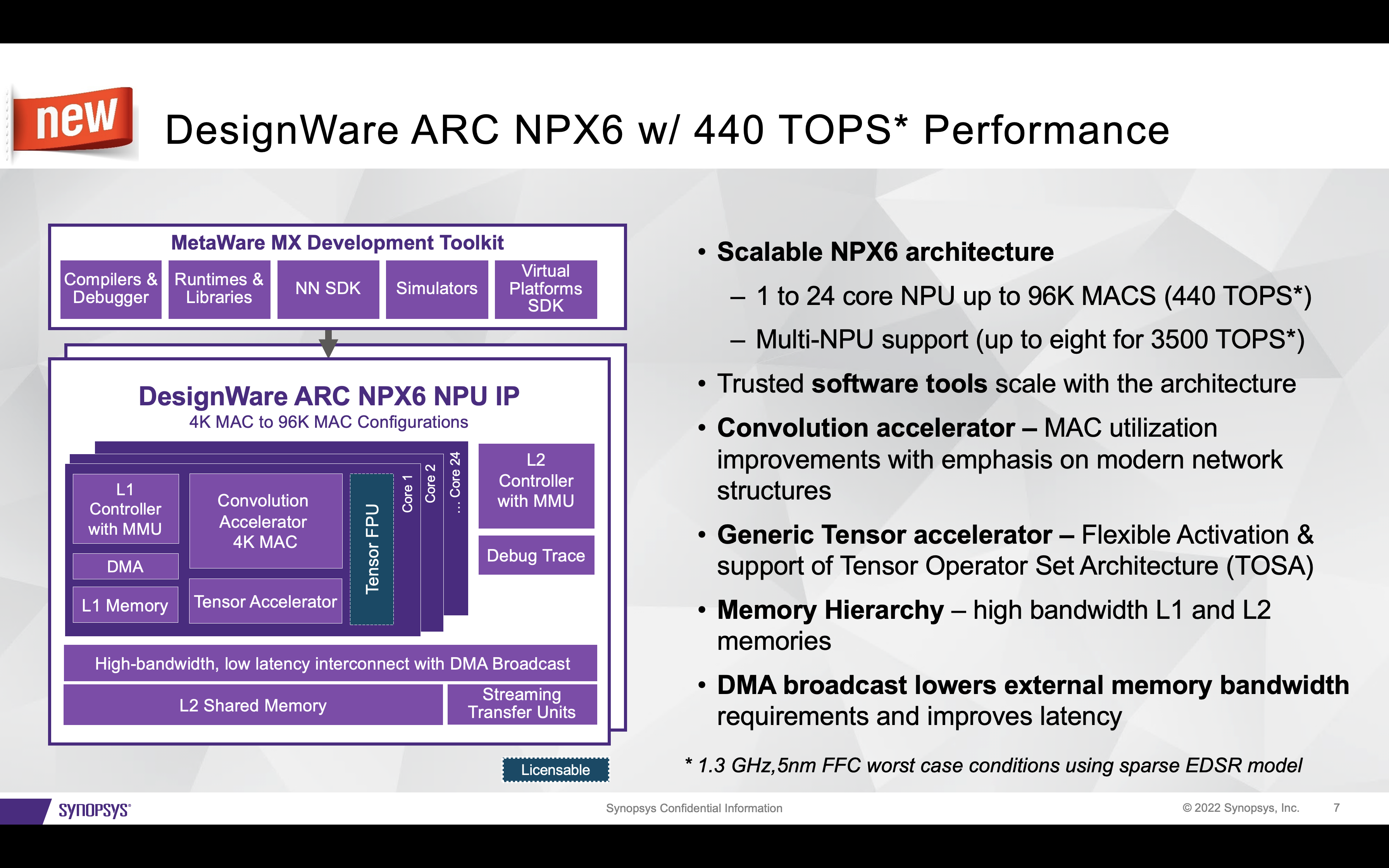

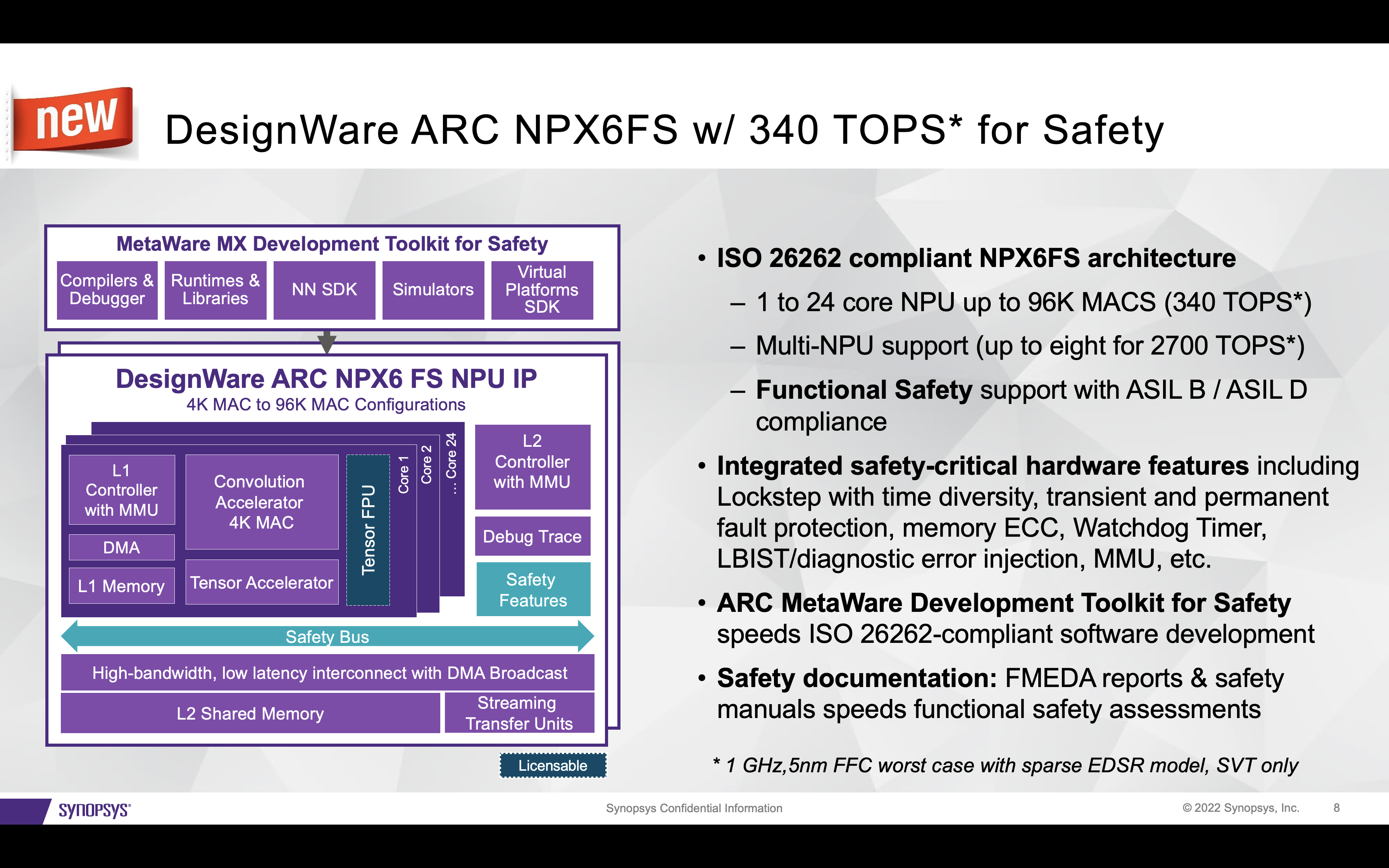

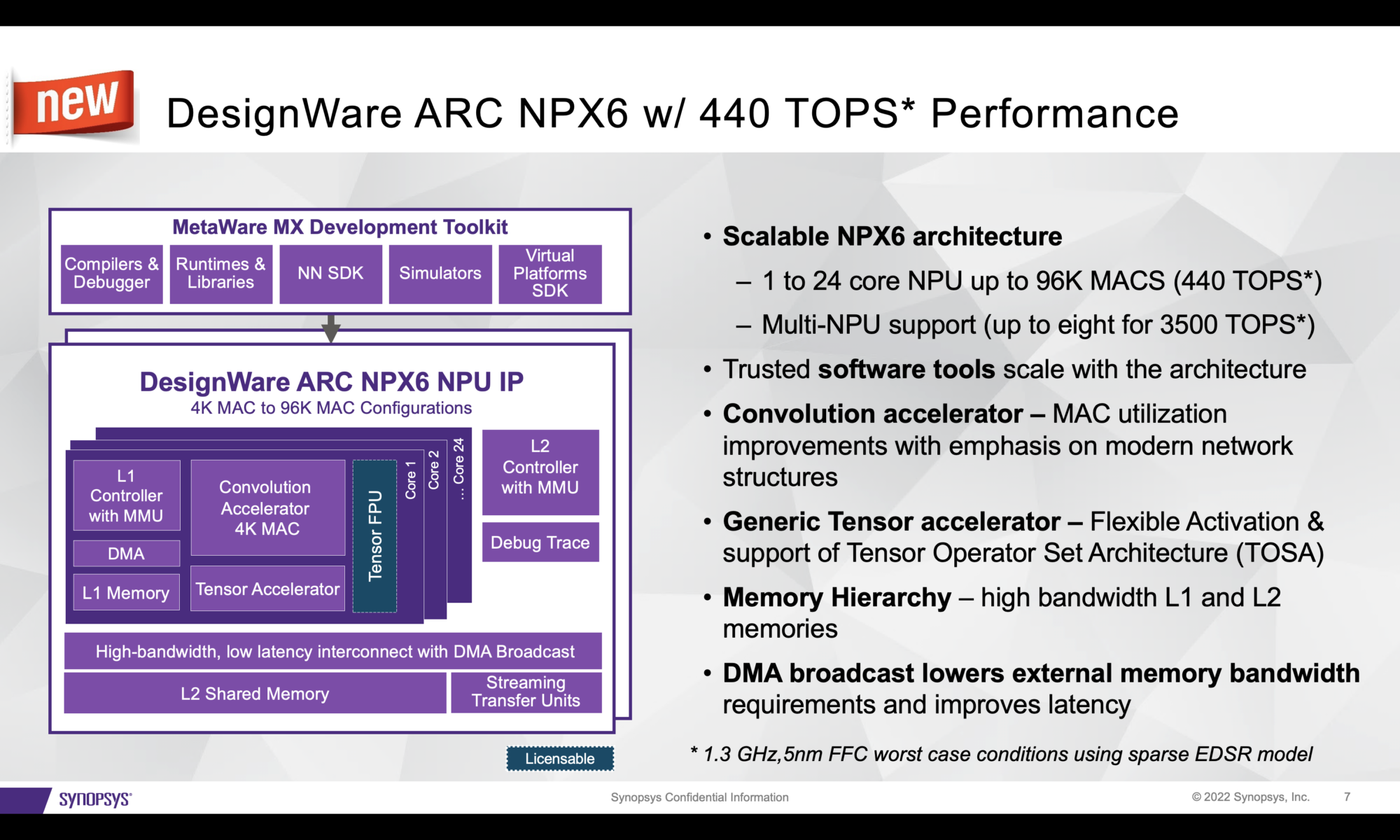

The NPU core of the NPX6 offering is based on a scalable architecture with 4K MACs building blocks. A single NPU instance can be built from 1 to 24 NPU cores. A multi-NPU configuration can include up to 8 NPU instances. Synopsys also offers NPX6FS to support the automotive market. Refer to the Figures below for corresponding block diagrams.

The key building block within the NPU core is the Convolution Accelerator. Synopsys’ main focus was on MAC utilization for handling the most modern graphs such as the EfficientNet. The NPX6/NPX6FS contain a generic tensor accelerator to handle the non-convolution parts and fully supports the Tensor Operator Set Architecture (TOSA).

A high bandwidth, low latency interconnect is included within the NPU core and is coupled with high-bandwidth L1 and L2 memories. The NPX6 also includes an intelligent broadcast feature which works as follows. Anytime a feature map or coefficient is read from external memory, it is read only once and reused as much as possible within the core. The data is broadcast only when used by more than one core.

Of course, the hardware is only half the story. The other half is software and Synopsys has been working on the entire effort for many years to deliver a solution that is fully automatic. Some of the key features/functionality are mentioned below.

Flexibility

With every new NN model comes a new activation function. The NPX6/NPX6FS cores support all activation functions (old, new and ones yet to come) using a programmable lookup table approach.

Enhanced datatype support

Though the industry is moving toward 8 bit datatype support, there are still cases where a mix of datatypes is appropriate. Synopsys provides a tool that automatically explores the hybrid versions of a couple of layers in 16 bit and all other layers in 8 bit. The NPX6 supports FP16 and BF16 (as options) with very low overhead. Customers are taking this option to quickly move from a GPU oriented, power hungry solution to an embedded, low power, small form factor solution.

Latency reduction

Instead of pipelining, the NPX architecture takes an approach of parallelizing a convolutional layer on multiple cores to deliver both higher throughput and lower latency.

Power Efficiency

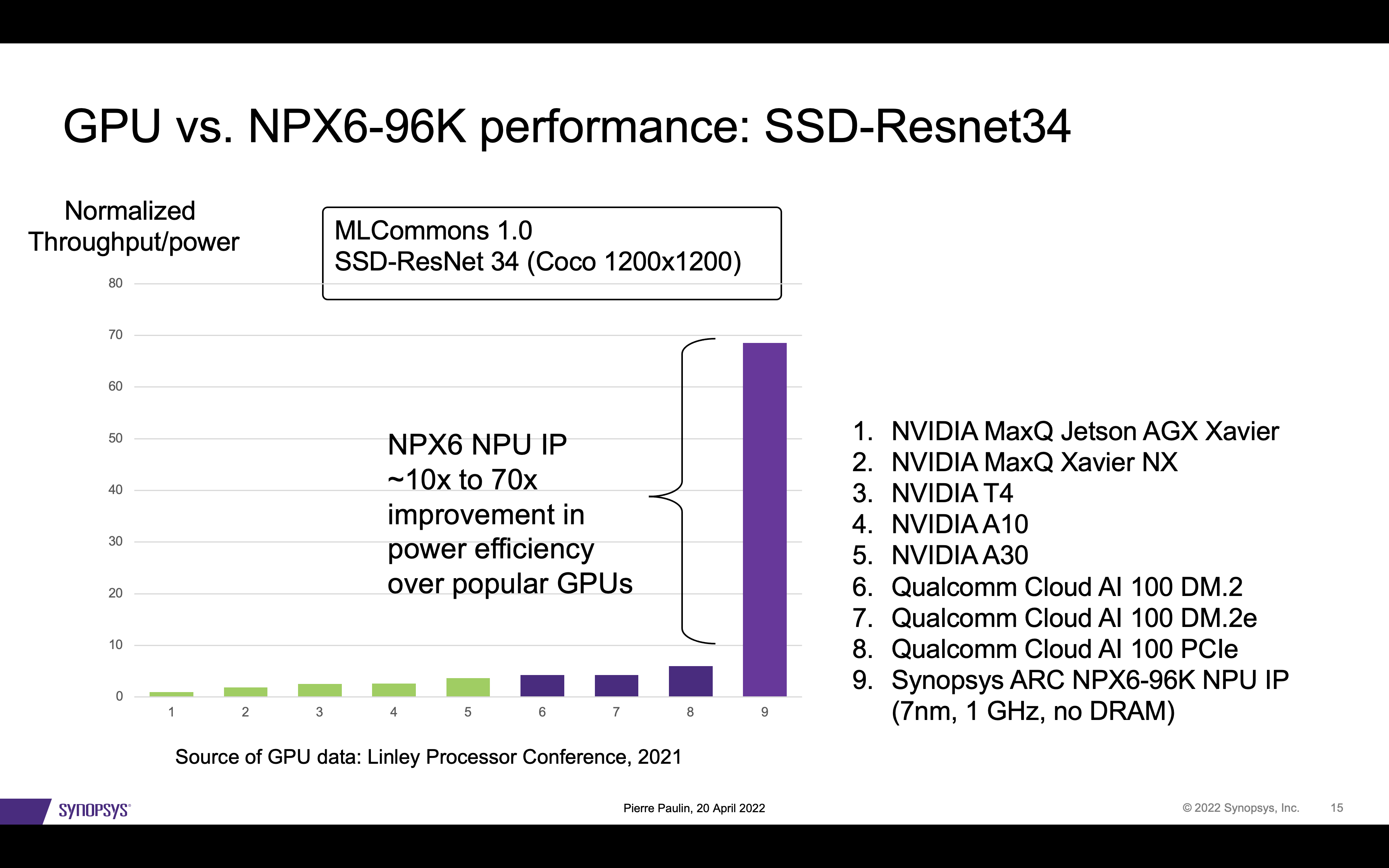

The NPX6 is able to achieve 30 TOPS/W in 5nm, which is an order of magnitude better than many solutions out there today.

Bandwidth Reduction

With a machine running at over 100 TOPS, the NPX6 is able to handle the bandwidth requirement with a LPDDR4/LPDDR5 class of memory interface.

Benchmark Results

Refer to Figure below for performance benchmark results when comparing Frames per second per Watt as the metric.

On-Demand Access to Pierre’s entire talk and presentation

You can listen to Pierre’s talk from here, under “Keynote and Session 1.” You will find his presentation slides here, under “Day 1 – Keynote – AM Sessions.”

Also read:

The Path Towards Automation of Analog Design

Design to Layout Collaboration Mixed Signal

Synopsys Tutorial on Dependable System Design

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.