Cadence held a well-attended Automotive Summit where Cadence presented an overview of their solution and system enablement along with industry experts and established or startup companies sharing their perspective and product features from autonomous driving, LiDAR, Radar, thermal imaging, sensor imaging, and AI.

We will examine here the first two sessions with more details on other ones in subsequent blog posts. The Summit opened with Raja Tabet, Cadence Corporate VP of Emerging Technologies outlining the automotive market dynamics.

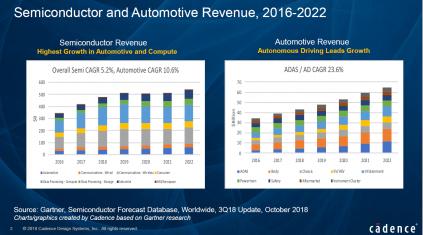

Figure 1: Semiconductor and Automotive Revenue

Tabet compared the overall semiconductor revenue with a CAGR of 5.2% (topping $500B in 2019) to the Automotive CAGR of 10.6% (approaching $50B in 2019) and with ADAS/AD at 23.6% CAGR showing the highest segment/sub-applications growth. Tabet added that if one looks at how much semiconductor content exists in cars the expectation is for it to double from 2015 to 2022 (now an expected $600 per vehicle) and by the time we deploy 5G and Level 4 and Level 5 autonomous driving the semiconductor content will triple to $1300. This number refers to the semiconductor value not the electronic components value in the car. There is a big ratio between semiconductor and components, meaning the cost of electronic components is far higher and is 4x or 5x of the semiconductor value. 30% of car cost comes from electronic components and Tabet believes that this number will grow at a healthy pace moving forward.

The key trends shaping the automotive industry are

- Autonomous driving (AD/ADAS)

- Electrification

- Connectivity

- E-mobility

Bracketed across all is security and safety across all trends. Electrification is the best answer to emissions and green effects, ADAS is the answer for safety, reducing fatalities and managing traffic moving forward. Connectivity is a must and one cannot deliver the premise of autonomous driving without connectivity and all these trends are required. Individual ownership of cars will diminish replaced by specialty vehicles, e-tailing, and robo-taxis. Trends are connected and reinforce each other.

The one important element is the realization that the differentiation of the car went from mechanical to software. OEM/semis are trying to figure out what to do in software and hardware. Standards and innovation are key to safety and security to fulfill the promise of mobility.

An interesting contrast exists between China’s intelligent connected vehicle relying on a distributed ecosystem with intelligence in the road with sensors and relying on intelligence fed to the car versus the US/Europe focusing on autonomous self-sufficient, self-contained and road smart cars. Tabet pointed out that the approach may differ, but the technology drivers are the same. Automotive is becoming one of the most challenging and demanding technology platforms.

The value chain is shifting from OEM to Tier1 that then deals with semi, to a direct path from OEM to semis in parallel with OEM to Tier1 for autonomous driving solutions.

Currently, some backpedaling is occurring on the very aggressive level 4 and level 5 due to initial difficulty and practical considerations on the availability of hardware technology in terms of performance and the need to have software maturity and deployed regulations. Today we are talking POC (proof of concept). True deployment of Level 4 or 5 may not occur until 2030.

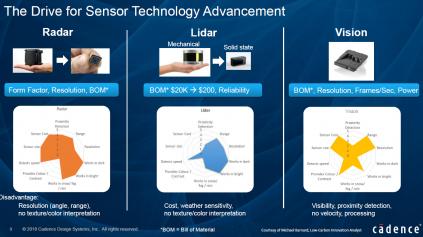

Sensor technologies can be grouped as radar, lidar and vision categories

Disruptions in the value chain, sensors, software, standardization, optimization of form factor, cost, resolutions, power are drivers. Automotive is becoming one of the most challenging and demanding technology platforms, requiring innovation across architecture, design, IP, AI, sensors, process technology, EDA tools, and flows.

Cadence’s automotive enablement consists of automotive- specific consulting & services, automotive domain-Specific IP infrastructure complemented with Cadence IP and EDA tools helping develop SOCs with multi-cores, safety islands, vision CNN subsystems, and high-speed/ low-speed memory interfaces.

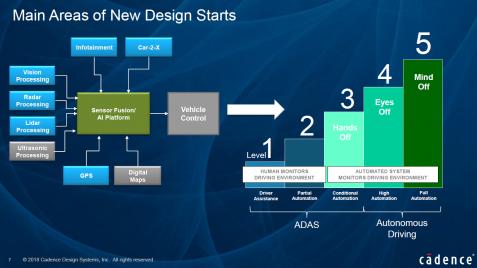

In the second session, Robert Schweiger, Director, Automotive Solutions outlined Cadence’s system enablement. The driver becomes a passenger and passes control to a machine. Evolutionary steps are needed to enable Level 5 autonomous driving like improving sensor technology, compute platform and also adding more network bandwidth. Trends in transportation can be organized as follows:

- Cars/Robo-taxis: Currently there are difficult traffic situations along with limited power and space. Today’s car levels go from 2 to 3, with robo-taxi reaching Level 4 (Uber). It is expected that cars will achieve L5 around or after 2027.

- Bus/Train/Delivery Vans: They operate in restricted areas (like hospital campus or special rail tracks). Van L5 testing is ongoing now with Train L5 testing targeted for 2023 and production around 2025.

- Trucks/Tractors: Driving occurs in restricted areas, with little or no power/space limitations. They achieve Level 5 today and some example companies are Einride, Embark, Otto, and Schenker.

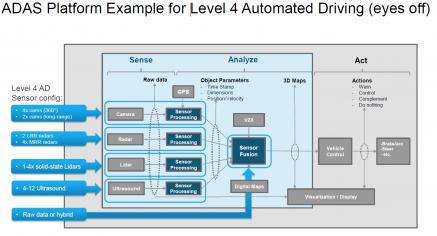

An ADAS platform example for Level 4 configuration can be illustrated as follows:

It becomes clear that advanced technology will be needed in sensor technology like longer range cameras, higher resolution, faster processing in order to analyze the position, velocity, and visualization for improved reaction time and accuracy.

It becomes clear that advanced technology will be needed in sensor technology like longer range cameras, higher resolution, faster processing in order to analyze the position, velocity, and visualization for improved reaction time and accuracy.

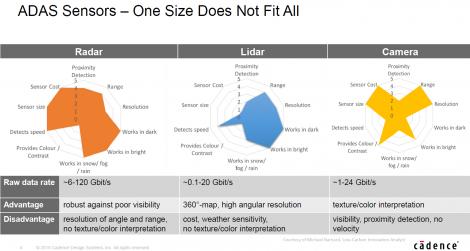

Schweiger outlined three different types of sensors along with their advantages and drawbacks. There are ten criteria that are ranked from 0 to 5 in terms of fitness/performance to the criteria.

The sensor types are radar, lidar, and camera with the criteria being: Proximity Detection, Work in Snow/Fog/Rain, Range, Color/Contrast, Resolution, Detect Speed, Work in Dark, Sensor Size, Work in Brigh, Sensor Cost

The evolution of radar shows higher MMIC integration to include on-chip antenna, digital modulation, depth, 3D x, y, z, doppler and finally imaging and AI. The radar processing consists architecturally of a front-end that includes antennas, MIMOs with FFTs and beam forming hardware and a back-end handling image mapping with detection, estimation, tracking and classification to create an object list with position, heading, velocity and type.

Some considerations:

- The front-end is intensive and customers often have their own hardware. Raw data rate ranges from 28 to 120 GB/sec with 16b fixed point (FXP)

- The detection of multiple moving objects is compute intensive.

- The estimation, tracking, and classification are where most customers need programmability

- Linear algebra is required in estimation/back-end and optimized libraries such as BLAS or LAPACK are available

- Tracking & classification is often seeking most MACs/cycle and it may be a separate or different processor doing sensor fusion with other inputs too

- Classification is now turning to neural networks in ADAS

- Consumer radar for gesture/occupancy often uses single DSP for front and back ends as data rates/accuracy is often lower. The object data rate varies from 10 to 100kB/secCadence has a smart radar sensor implementation for 16 and 32-bit floating point (FLP) as well as AI processing implementation for tracking and classification.

Another trend in radar is the up-integration and reduction of expensive MMICs into a single-chip solution based on CMOS or a SiP solution using SiGe technology for RF and CMOS for the digital part.

Cadence has now a new Virtuoso RF Solution for RFIC and RF module design with the following features to address the increasing complexity of RF modules containing multiple IC technologies (CMOS, SOI, SiGe, GaAs, GaN)- RF module and RFIC co-design environment

- Multi-PDK support and simultaneous editing across multiple technologies

- Single ‘golden’ schematic for implementation, verification, and electro-magnetic analysis

- Seamless integration of electro-magnetic extraction and analysis solutions

- Interoperability with Cadence SiP

Moving to the lidar technology topic, there are more than 80 companies with lidar sensors. Due to the bulkiness of current solutions, the only viable solution will include a solid-state lidar sensor where the most design activity is occurring. There are 2 popular solutions, the flash version illuminating the scene in front of you with multiple beams at once, and the scanning lidar where you eventually control the laser beam with MEMs mirrors detecting the surroundings. There are some lidar challenges with quite coarse 3D resolution and the faster you go you will need higher resolution and higher frame rates with peak power consumption (with the flash power also being high). The Audi A8 is the first car in production which has a single lidar sensor but eventually, people will be implementing 480-degree net for lidar sensors.

The Virtuoso System Design Platform merges chip, package, and board analysis into a unified design tool and provides a central cockpit to the designer for system integration. In addition, Virtuoso provides interfaces to other tools to enable silicon photonics and MEMS co-design.

The Virtuoso System Design Platform can be used as a single cockpit to do the multi technology simulations, package design with Allegro, and Sigrity power integrity analysis of package and board.

Following the lidar technology outline, Schweiger moved to the vision portion including real-time embedded vision AI pipeline where intelligence and programmability meet with strides in recognition already occurring.

Today’s camera-based ADAS systems in production cars mainly use classic vision algorithms, for example, object detection. However, in the future, we will see more AI-based ADAS systems which require much higher performance. A DSP with a limited instruction set and VLIW processing capabilities is well suited to pixel processing and sensor processing using classic imaging or AI methods. Highly energy efficient Vision DSPs offer 4X – 100X the performance for a variety of pixel operations relative to traditional mobile CPU-plus-GPU systems at a fraction of the power/energy to enable in-camera computer vision.

Tensilica processors for AI provide scalable high-performance and low-power multi-core solutions with the app-specific Tensilica Vision P6 DSP and the Tensilica AI DSPs, including the Tensilica C5 DSP and the DNA 100 Processor (DNA stands for Deep Neural-network Accelerator).

ADAS performance requirements range from 0.25 to 4 TMACs, which can be addressed by both Tensilica application-specific DSPs and Tensilica AI DSPs

- . We are moving from ADAS to autonomous cars with on-device neural network inference, and performance ranges from tens to hundreds of TMACs with the use of scalable DSP clusters. In vision you can perform the same task (pedestrian detection) in 2 different ways using classic imaging algorithms or an AI-based approach, for instance, to detect an object and compare them. Currently verifying an AI-based system is an unsolved problem. There is no verification possible because it highly depends on the database that the network was trained on.Back in September, Cadence announced the Tensilica DNA 100 which is a deep neural network accelerator, allowing scaling up performance from 0.5 TeraMacs up to 12 TeraMacs. Especially a low-power, high-performance DSP that is scalable is what is needed because the devices are placed behind mirrors and these must be low power. Cadence has also an automated neural network flow. The Xtensa Neural Network Compiler (XNNC) can directly take the input from a Cafe or Tensorflow-based environment and optimize and map it directly to the Tensilica hardware platform.

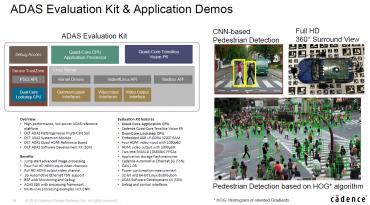

Schweiger described a next-generation ADAS vision development platform and an ADAS evaluation kit with application demos shown below. RTL code can be licensed and modified as needed to reduce the time-to-market. A pedestrian detection based on histograms of oriented gradients was illustrated. He also described Autoware, an open source autonomous driving software implementation from Nagoya University that allows:

- Recognition that includes object recognition, sensor fusion, and location estimation

- Decision that includes path generation, behavior decision, and navigation

- Control of motors and actuators

Regarding functional safety, Cadence has spent a lot of effort to achieve ISO 26262 safety compliance for their EDA tools and IPs. Safety manuals have been created which can be used on customer products by the design teams. For example, the Tensilica development environment is safety certified up to ASIL-D. If one wants to verify the safety level of the chip, Cadence provides a safety verification solution based on Incisive where you can inject faults and measure the diagnostic coverage of the system and all those were certified by TUV Sud or SGS TUV SAAR, the leading German assessors.In summary, the revolution in automotive is ongoing with Cadence being a broad-based partner for automotive system design enablement with strong ADAS offering for high-performance, low-power SoCs through

- Tensilica, the common acceleration platform for ADAS

- Dedicated neural network processor cores including a neural network compiler

- ADAS rapid prototyping system including quad-core Tensilica Vision P6 and SDK

The ISO 26262 safety compliance can be accomplished with

- Tensilica processor certified ASIL B(D)-ready as safety element out of context (SEooC)

- Available hardware safety kit for Tensilica

- Validated design flows, tools and IP for ISO26262 compliant product design & certification

—-

Note (*) This is the range Cadence believes to be required for ADAS applications, not the range of their DSPs. A single DNA 100 scales up to 12 effective TMAC/s.Read more here: Automotive Summit 2018 Proceedings

Comments

There are no comments yet.

You must register or log in to view/post comments.