Custom AI acceleration continues to gather steam. In the cloud, Alibaba has launched its own custom accelerator, following Amazon and Google. Facebook is in the game too and Microsoft has a significant stake in Graphcore. Intel/Mobileye have a strong lock on edge AI in cars and wireless infrastructure builders are adding AI capabilities to small cells and base stations for 5G. All of these applications depend on a lot of flexibility and future-proofing for long-term relevance in rapidly evolving environments.

But there are many applications, probably accounting for the great majority of units, for which power, cost or transparent use models are much more important metrics. An agricultural monitor in a field in the middle of nowhere, a microwave voice controller, traffic sensors distributed across a large city. For these a general-purpose solution, even a general-purpose AI solution, may be overkill. An application-specific AI function would be much more compelling.

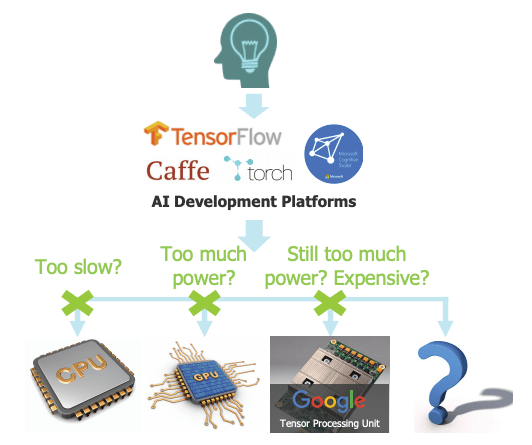

Pre-AI times, you would immediately think of a hardware accelerator – some function that would do whatever it had to do but much faster than running a software equivalent on the CPU. That’s pretty much what an AI accelerator does. It may still be software driven but not in the same way as a general-purpose CPU. Software is developed in Python on a big platform such as TensorFlow or Torch then compiled through multiple steps onto the target accelerator.

Therein lies the magic. That accelerator can be as wild as you want it to be as long as it stays within the general bounds of a neural net architecture. It may support multiple convolution engines, each in turn supported by SRAM for the accelerator as a whole, along with local memories to optimize access for a preferred ordering of operations.

It may support specialized functions for common operations such as pooling. For speed and power, it will commonly support different word widths at different stages of inference and specialized optimizations in handling sparse arrays. These are both hot areas of innovation in neural net architectures, some architects even experimenting with single-bit weights – if a weight can only be 1 or 0, you don’t need multiplication in convolution and sparseness increases!

The challenge in all of this is that you have so many knobs you can turn that it becomes difficult to know where to start or if you have really explored the full space of possibilities when you want to commit to a final architecture. Compounding the problem, you need to test and characterize over a large range of large test-cases – big images, speech samples and so on.

Running the majority of your testing in C rather than RTL is just common sense since it will run orders of magnitude faster and it’s easier to tune than the RTL. Also, neural net algorithms map well through high-level synthesis (HLS), so your C model can be more than a model – it can be the implementation from which you generate the RTL. You can explore the power, performance and area implications of choices you are considering – multiple convolution processors, local memories, word widths, broadcast updates. All with a fast turn-around time, allowing you to more fully explore the range of possible optimizations.

Mentor has just released a white paper with a nice intro on some of the architectural tradeoffs in building such accelerators. You can register to get the paper HERE.

Share this post via:

Comments

One Reply to “High-Level Synthesis at the Edge”

You must register or log in to view/post comments.