Block floating point (BFP) has been around for a while but is just now starting to be seen as a very useful technique for performing machine learning operations. It’s worth pointing out up front that bfloat is not the same thing. BFP combines the efficiency of fixed point operations and also offers the dynamic range of full floating… Read More

Thermal Reliability Challenges in Automotive and Data Center Applications – A Xilinx Perspective

I wrote recently on ANSYS and TSMC’s joint work on thermal reliability workflows, as these become much more important in advanced processes and packaging. Xilinx provided their own perspective on thermal reliability analysis for their unquestionably large systems – SoC, memory, SERDES and high-speed I/O – stacked within a … Read More

WEBINAR: Prototyping With Intel’s New 80M Gate FPGA

The next generation FPGAs have been announced, and they are BIG! Intel is shipping its Stratix 10 GX 10M FPGA, and Xilinx has announced its VU19P FPGA for general availability in the Fall of next year. The former is expected to support about 80M ASIC gates, and the latter about 50M ASIC gates. And, to bring this mind-boggling gate… Read More

Network on Chip Brings Big Benefits to FPGAs

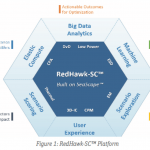

The conventional thinking about programmable solutions such as FPGAs is that you have to be willing to make a lot of trade-offs for their flexibility. This has certainly been the case in many instances. Even just getting data across the chip can eat up valuable routing resources and add a lot of overhead. These problems are exacerbated… Read More

New Generation of FPGA Based Distributed Accelerator Cards Offer High Performance and Adaptability

We have learned from nature that two characteristics are helpful for success, diversity and adaptability. The same has been shown to be true for computing systems. Things have come a long way from when CPU centric computing was the only choice. Much heavy lifting these days is done by GPUs, ASICs, and FPGAs, with CPUs in a support … Read More

Achronix Announces New Accelerator Card at Linley Fall Processor Conference – VectorPath

This blog is my second blog from this year’s Linley Fall Processor Conference. The first two blogs focused on edge inference solutions. Achronix’s discussion was much broader than just AI/ML; it was about where FPGA’s have been going and culminated with a product announcement preview. I’ll get to the announcement in a moment, … Read More

Design Perspectives on Intermittent Faults

Bugs are an inescapable reality in any but the most trivial designs and usually trace back to very deterministic causes – a misunderstanding of the intended spec or an incompletely thought-through implementation of some feature, either way leading to reliably reproducible failure under the right circumstances. You run diagnostics,… Read More

Acceleration in a Heterogenous Compute Environment

Heterogenous compute isn’t a new concept. We’ve had it in phones and datacenters for quite a while – CPUs complemented by GPUs, DSPs and perhaps other specialized processors. But each of these compute engines has a very specific role, each driven by its own software (or training in the case of AI accelerators). You write software… Read More

Xilinx on ANSYS Elastic Compute for Timing and EM/IR

I’m a fan of getting customer reality checks on advanced design technologies. This is not so much because vendors put the best possible spin on their product capabilities; of course they do (within reason), as does every other company aiming to stay in business. But application by customers on real designs often shows lower performance,… Read More

Tortuga Webinar: Ensuring System Level Security Through HW/SW Verification

We all know (I hope) that security is important so we’re willing to invest time and money in this area but there are a couple of problems. First there’s no point in making your design secure if it’s not competitive and making it competitive is hard enough, so the great majority of resource and investment is going to go into that objective.… Read More

The Name Changes but the Vision Remains the Same – ESD Alliance Through the Years